The Pong Imperative: Driving Dishbrain to Suicide.

Achilles Desjardins had always found smart gels a bit creepy. People thought of them as brains in boxes, but they weren’t. They didn’t have the parts. Forget about the neocortex or the cerebellum—these things had nothing. No hypothalamus, no pineal gland, no sheathing of mammal over reptile over fish. No instincts. No desires. Just a porridge of cultured neurons, really: four-digit IQs that didn’t give a rat’s ass whether they even lived or died. Somehow they learned through operant conditioning, although they lacked the capacity either to enjoy reward or suffer punishment. Their pathways formed and dissolved with all the colorless indifference of water shaping a river delta.

—Maelstrom, 2001

There’s an obvious contradiction in those last two sentences. Reward/Punishment is the very foundation of operant conditioning; how can it work on something that experiences neither? I wasn’t sure, back at the turn of the century. I knew what feedback was. I knew that the pseudoneurons of neural nets had weights attached to them, that the odds of one of them firing would increase some fractional amount every time it made the “right” move. I figured that by the time my story took place, people would have figured out how to make the meat act like the software. It was far from the biggest liberty I took in that novel. No one rang me up to call bullshit on it, anyway.

Now, a quarter of a century later, I have my answer. Yes, folks, it’s time for another ripped-from-the-headlines story about Head Cheeses IRL.

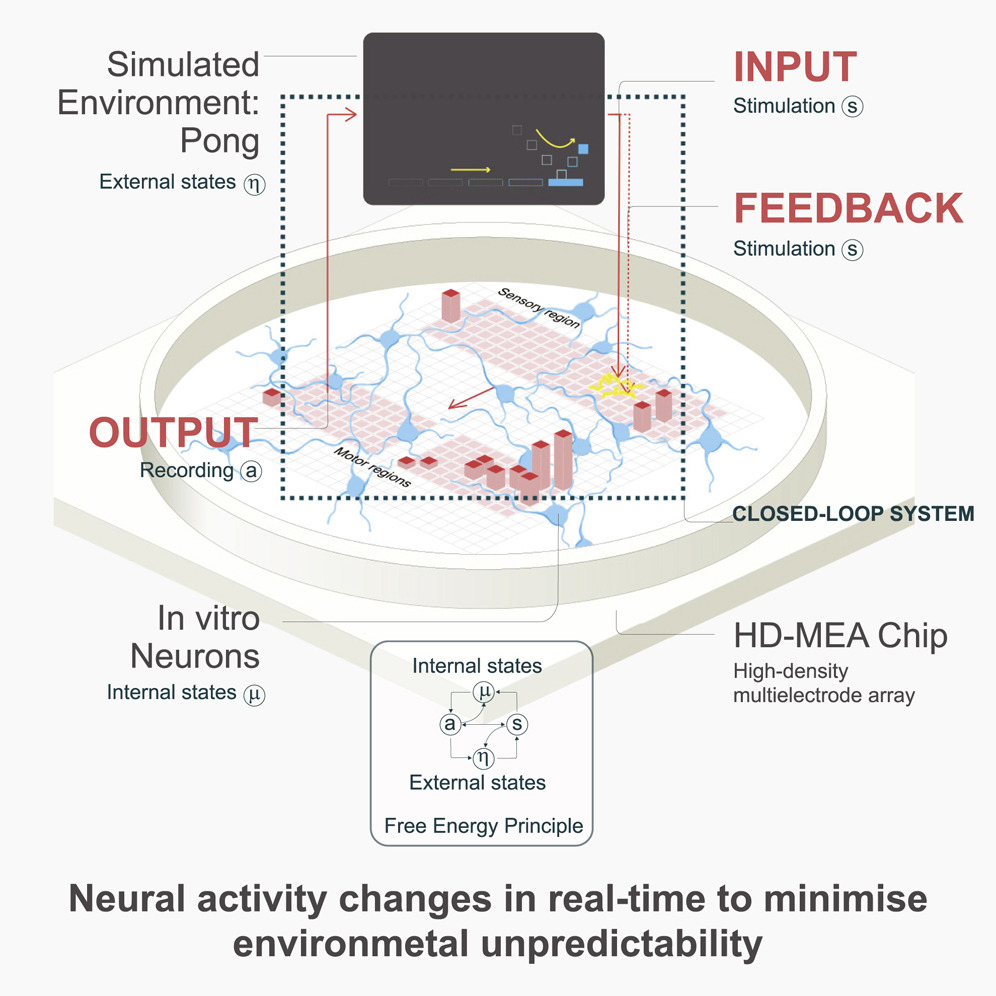

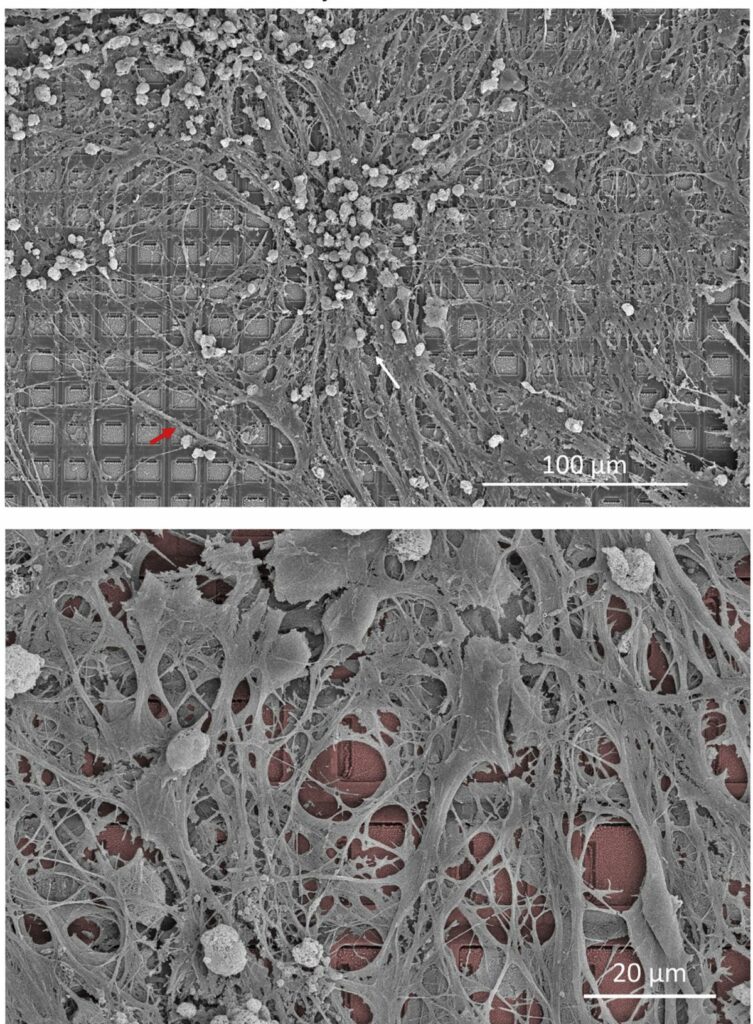

This one comes via the latest issue of Neuron courtesy of an Australian outfit called Cortical Labs, where Brett Kagan and his buddies grew a neuron culture in a dish and taught it—more accurately, spurred it to teach itself—how to play Pong. Some of you started sending me the link a couple of weeks back, and I’ll admit at first blush I thought the whole thing was a step backward. Neuron cultures playing Pong in 2022? Weren’t we talking about hooking them up to power grids and stock markets over ten years ago? Weren’t researchers from the University of Reading using them to control little robots back in 2008? But I followed the links, and the title—”In vitro neurons learn and exhibit sentience when embodied in a simulated game-world“—caught my eye.

Signs of sentience, you say.

Okay. Show me what you got.

What they’ve got, as it turns out, is a nifty little proof-of-principle in support of the Free-Energy-Minimization model I was chewing over last April. Back then it was Mark Solms, forcing me to rethink my assertion that consciousness could be decoupled from the survival instinct. The essence of Solms’ argument is that feelings are a metric of need, you don’t have needs unless you have an agenda (i.e., survival), and you can’t feel feelings without being subjectively aware of them (i.e., conscious). I wasn’t fully convinced, but I was shaken free of certain suppositions I’d encrusted around myself over a couple of decades. If Solms was right, I realized, consciousness wasn’t independent of survival drives; it was a manifestation of them.

But the fundamental point that ties this school of thought together is right there in the name: Free Energy Minimization. Self-organizing complex systems are just plain lazy, according to FEM. They aspire to low-energy states. If they’re the kind of system that acts in response to input from an external environment, the way to keep things chill is to keep them predictable: know exactly what’s coming, know exactly how to react, live on autopilot. Surprise is anathema, surprise means the environment isn’t doing what you expect. You have two choices when that happens: either rejig your predictive model to conform with the new observed reality, or act on that observed reality in a way that brings it more into line with your predictions. If you’re a weather simulation you might update your correlations relating barometric pressure and precipitation. If you’re an earthworm you might move away from an unpleasant stimulus that’s pushing you out of homeostasis.

In each case you want to minimize energy costs, and that means minimizing the difference between what you expect to happen and what actually does. Consciousness exists in the space between those two things. The wider the difference—the greater the surprise—the more “conscious” the response. Conversely: the more accurate your model, the lower the informational entropy and the less awake you are[1].

And right there: that’s your motivation. You don’t need pain or pleasure centers. You don’t need to program specific imperatives or win states. The tendency to “low informational entropy” is an intrinsic part of the system. That’s what the FEM lobby claims.

Which means that if you’re a neuron culture spread across an electrode array like peanut butter on toast, and some of those electrodes feed you information about whether a ball is hitting a paddle, while others take instructions from you about how to move that paddle—and if you receive a predictable signal when the ball hits the paddle, but a burst of random static when it misses—you will learn, on your own, to minimize the frequency of those bursts of surprise. You will learn to play Pong.

That’s what Kagan et al did. They let “Dishbrain” grow over an array of electrodes: some arbitrarily defined as motor nerves, others as sensory nerves. The sensories sent signals corresponding to paddle/ball dynamics; the motors received commands on paddle motion. Kagan et al set Pong in motion, shocked or stroked Dishbrain as appropriate, and waited.

Dishbrain figured it out in five minutes.

Well, sort of. The paddle intercepted the ball significantly more often than random chance would predict. That’s not to say it ever became a black belt at Pong: there’s a lot of daylight between better than random and world champion, and in some cases Dishbrain performed barely better (occasionally even worse) than one or another of the control cultures. I’m guessing the impressive videos presented in the paper, while described as “representational”, were cherry-picked.

Still. Dishbrain learned to hit the ball, in some cases apparently anticipating where it would arrive before it even hit the backboard. A puddle of neurons, finding itself in a virtual game environment with unknown rules—without even so much as the “maximize score” instruction granted to that Starcraft-beating headline-grabbing DeepMind you’ve read about— organized itself on the fly to act in a way that minimized unpredictable input. Score one for FEM.

As for the claim that dishbrain is “sentient”: predictably, it’s proven controversial. Kagan et al use a particularly narrow definition of the term—”responsive to sensory impressions through adaptive internal processes”—attributed to Karl Friston (a high priest of FEM, who also happens to be one of the paper’s authors). Others have bristled at the term, since it generally connotes subjective experience. Dean Burnett out of Cardiff Psychology School isn’t willing to go beyond “thinking system“. Even Kagan admits that Dishbrain shows no signs of consciousness.

Personally, I think they’re playing it a bit too safe. Sure there’s no hard evidence that Dishbrain is “awake” in the commonly understood sense—but then again there’s no hard evidence that you’re awake, and not just a sophisticated iteration of Google’s Lambda in a meat chassis. A few years back PNAS published a paper that made a pretty good case for insect consciousness: insects might not have the specific brain structures associated with consciousness in we mammals, it said, but they have structures that perform analogous functions. They acquire information from their environment; they monitor their own internal states; they integrate those two data sets into a unified model that generates behavioral responses. Many argue that it’s that integration that results in subjective experience. Vertebrates, cephalopods, and insects are all built to do that in their own way, so it stands to reason they’re all phenomenally conscious (unlike, say, nematodes). Dishbrain also embodies those three components—in a rudimentary form, certainly, but perhaps not that much simpler than you’d find in a bristletail. Who’s to say that it isn’t conscious, even in the wider qualia-based sense?

There’s excitement in these findings. The idea that all self-organizing networks have at least one “motive” baked in is a revelation (to me, anyway). But that’s the beginning of inspiration, not the end. What do you do with that insight, as a science fiction writer? What are the consequences that can be explored narratively?

How can all of this go wrong?

Well, here’s something: all motives are not created equal. The universal motive accruing to self-organizing systems is Predictability, not Survival or Reproduction. So take Dishbrain. Take a head cheese. Put it in an environment that generates predictable feedback not when it paddles a ball but when it throttles its nutrient supply, or otherwise degrades its own integrity. Reward it for self-harm; watch it commit suicide.

What might that look like if you scaled it up to a human brain?

We may not even have to speculate. There are people out there who serve, if not as real-world examples, at least serve as real-world analogs. It’s not a perfect point-for-point mapping: we have actual brains, and that means brains stems and amygdalas and evolved agendas that do tie directly to survival and reproduction.

And yet people exist who offer a glimpse of how such a hack might manifest, people who do seek to physically compromise themselves. They experience the unshakable conviction that one of their body parts doesn’t belong to them, that it is alien, that it needs to be removed. They’ve been known to try sawing off the offending limb, or blow it off with a shotgun. One soul tried for decades to damage his leg enough to force an amputation, finally succeeding after immersing it in dry ice.

And when these people succeed in losing the offending arm or leg—they feel happy. They feel that they have finally become who they truly are. They feel whole.

The clinical term is Body Integrity Dysphoria[2], and while it’s extremely rare, researchers have been able to tag certain tentative neurological correlates. Diminished skin conductance response distal to the desired amputation point, for one thing. Reduced gray matter in the superior parietal lobule, for another.

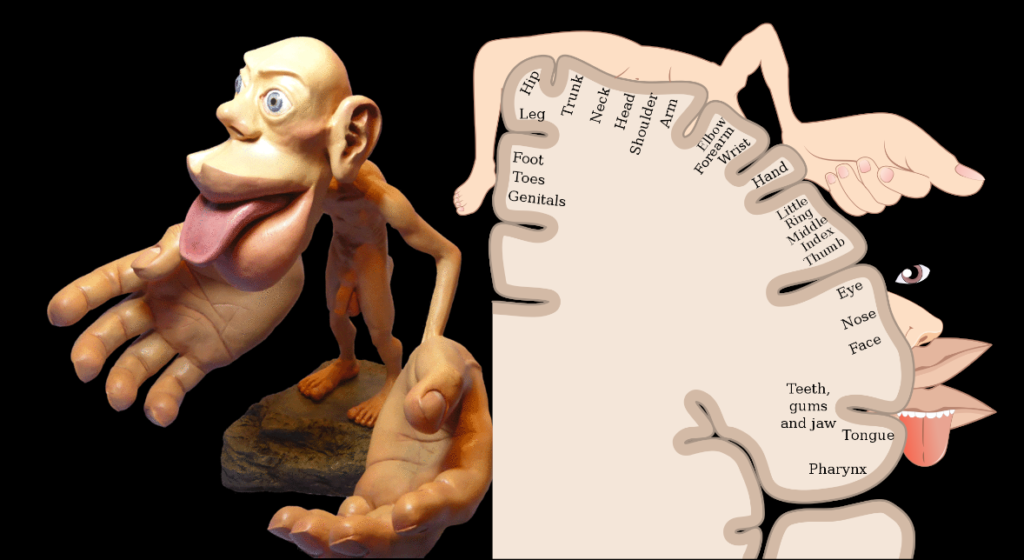

Interesting thing about the superior parietal lobule: it’s part of the somatosensory cortex.

You remember the somatosensory cortex, aka the Penfield homunculus: that strip of brain that maps the body in terms of sensory and motor processing. And you know about Phantom Limbs, which result from the fact that even after a limb has been amputated, the corresponding part of the homunculus—the map of that particular somatic territory—persists in the brain. As long as that part of the switchboard is still firing, a person will feel sensation from the corresponding limb regardless of whether it’s still attached. (It has also been commonly observed that the parts of the strip that map feet and genitals butt up against each other; leakage between the two might explain why foot fetishes are the most common sexual kink. But I digress.)

To me at least, BID seems like nothing so much as a bizarro Phantom Limb Syndrome, where the brain—instead of registering that something’s there when it isn’t—somehow registers that something isn’t there (or at least, shouldn’t be) when it is. And if the one syndrome results from a piece of the map being there when it shouldn’t be, maybe the other results from a part of the map being missing (reduced gray matter, remember?) when it should be present. In both cases, the internal model of the self-organizing system is at odds with incoming data. In BID the system takes action to reduce that dissonance. To eliminate the surprise. To minimize informational entropy.

Move the paddle; remove the limb. Maybe the same thing, when you get right down it.

All speculation, of course. That’s what we do here. Then again, with fewer than 500 cases on record, hard data are not exactly thick upon the ground. And the limited MRI results we do have seem consistent with Penfield’s involvement.

What might a suicidal Dishbrain look like if you scaled it up onto Human architecture? Well, we’ve been playing around with ways to program neurons for decades now. Transcranial magnetic stimulation. Compressed ultrasound. Sony even filed a patent a couple of decades back; who knows how far they’ve come since then?

Say they pull it off, learned how to tweak to somatosensory cortex. (That’s what they were aiming for: a way to plant input directly into the brain, without having to go through those messy eyes and ears and noses. They were pitching it as the ultimate game interface.) Imagine, twenty minutes in the future, we all have Sony headsets talking to our homunculi. Imagine someone hacks them to erase certain parts of that map, induce Body Integrity Dysphoria on command.

The thing is, arms and legs are not the only limbs we have. The neck is a limb too.

I wonder how comprehensive Sony’s liability insurance might be.

-

This relationship, by the way, inspired me to write a story about hive minds and all the catastrophic things that are bound to go wrong if Neuralink works the way Elon Musk wants it to. I’m told that story has caught the eye of one of Neuralink’s cofounders, which is especially remarkable given that it hasn’t been published yet.↑

-

If you’re feeling a sense of deja vu here: yeah, the parallels to gender dysphoria and transitioning are pretty striking. The experts opine that BID is a related syndrome, but nonetheless a distinct one. ↑

Interesting, for definitions of interesting that are like finding that the Thing exists and one of your own is now a monster; in this case our minds, the thing we call consciousness is not what we think it is.

H P Lovecraft would have a field day.

> the thing we call consciousness is not what we think it is

I can tell you for free: it’s a fabrication. A wonderful, beautiful, empowering illusion… until it jitters.

Nasty.

> H P Lovecraft would have a field day.

Indeed. He should tag-team with Oliver Sacks.

Senpai noticed us!

Good, ’bout f-ing time. I’m not saying the minions have been jonesing, much, but there’s dents in their Skinner cage about a quarter lights deep.

> What might a suicidal Dishbrain look like if you scaled it up onto Human architecture?

Too easy: Nov 8. You’re welcome.

I dunno. I think the Nov 8 crowd might have fewer per-capita neurons…

With most of those neurons not even in use. And yet, they vote.

Nevertheless, today’s GOP face a true existential problem. Radicalizing their own voters through ritualized self-slaughter is a bold move, Cotton, but even with assistance of gerrymandering, voter suppression, and recounts probably fallen off the back of a truck, they’re really riding the razor in terms of raw numbers. Kill too many too soon and “Pop!” goes their full-fascist putsch.

Luckily, technology rides to their rescue: PongBrain™! Simply convince their ranks of loyal lickspittles to donate a chunk of spare forebrain, perhaps in trade for something they can actually use such as the right to bully a trans kid to suicide, pop that unimpressive gray blob in a jar, immortalize it, bestow upon it full personhood and voting rights of a true US citizen, and BAM! Instant guaranteed GOP base, forever. If a few pickled neurons can already wiffle a virtual bat, imagine with a little more practice they can juggle a Diebold black box.

…

Once that base is secured, the party can swiftly proceed to swelling those ranks just by scraping out the medical waste bins around local hospitals every week. They already fetishize the not-yet-alive and already-dead (while utterly despising everyone else in the middle), and their own God is the already world’s leading abortion provider by a sordid mile (around 1:3 pregnancies).

So why not streamline the whole process and eliminate the old 18-year waiting period entirely? Recruit as the new Party faithful those that cannot yet think for themselves and never would! Everyone wins. Everyone that matters. Heck, everyone that is matter, shortly. The GOP shall never lose power again†—it’s literally their perfect electorate!

Pong today, thousand-year GOP tomorrow. Remember, you heard it all here first.

Anyway, I’m just off to buy hot stocks in glassware and culture manufacturers, BRB.

—

† (or at least as long as they never lose power…)

I suppose one man’s bullying is another man’s saving from a deranged child chemical castration and mutilation cult.

Provided that “another man” is an ignorant idiot, absolutely.

Slow-adjusting “exploiters” with strong perineuronal nets take a good long while to catch up to any radical shifts in scientific and/or medical thinking. Or indeed any radical shifts in any data coming from their environment. Many will die before they do.

That stubbornness is literally their natural role – they’re a hedge against missteps.

Of course, when the environment starts changing fast, they pose quite a serious risk to adaptation on the level of whole populations…

Found the TERF/TrumperHumper.

Frankly sticking a male-wired brain in a female body or a female-wired brain in a male body is terribly small beans to that deranged mess we fondly call dear old Mother Nature.

Biology discovering marvelously inventive ways to be an absolute bag of dicks to anything complex enough to possess a pulse is a frequent talking point for this blog. Alas, I’m guessing “Ji” (not hir real name: far too sexually ambiguous) is not a regular reader in these here parts.

The ridiculous radish could also look up “blood libel” sometime—as if the rest of us don’t see exactly what they’re doing. Although I’m personally guessing that’s its whole point: blunt and shrivelled.

Actually, I didn’t know what blood libel was myself until you mentioned it.

So the pizzagate pedophile thing doesn’t even have a redeeming whiff of originality to it…

Oh my sweet summer chile.

Hahaha! Oh hell no.

It’s a kind of group behaviour that seems to be wired to emerge from that section of the population whenever they’re sufficiently unhappy. The outfit that it wears doesn’t really matter, just as long as it pushes certain very basic emotional buttons, the ones that promote being good at inter-tribal violence. Hence in the early 21st they’ve gradually zeroed in on this formula of linking anyone on the opposing political side to pedophiles (the best villain you can get in the West today!) via trans people, gender non-conformists, and generally anyone who seems to threaten the heteronormative nuclear family, which of course must be worshipped without question if you don’t want to be out-bred and subsequently slaughtered by other tribes. See also: right wing politicians openly promoting lies about schools letting their students “identify” as animals. Other examples that jump out readily just as soon as you’ve got your eye in.

As late as 2015, we thought we’d seen the last of this monster as a real force in the “enlightened” West following WW2. The years since then have been educational.

That person read and understood at least half of your comment. They were probably reading all the comments.

Someone who would read and understand any of this blog and its comments, and yet remain clearly incapable of rational thought on certain topics, is rather scarier than a common TERF / MAGAt / rabid social conservative.

I suppose that it could also be an ill-disciplined contractor spook, here to keep an eye on our Dr due to his “anti-American sentiment”.

That whole comment is a minor masterpiece of satire. Kudos!

Regarding Neuralink: with the current state of computer security, I certainly am not trusting them with my brain.

I’ve actually had recent cause to look on in horrified mistrust when it comes to a lot of recent techbro ventures. And yes, Neuralink is definitely up there.

The current state of computer security?

Welcome to the 20th-century; you must be new here!

Historical computer systems were at least simpler, and not connected to other ones quite as much.

Speaking of Maelstrom, I see it just came out on Audible last week.

Is the cut you get as an author of an Audible credit even a noticeable blip on the income radar?

No idea. I’ve got the advance for the audio editions, so there’s that. Whether it earns out is another issue (I don’t think the dead-tree versions ever did).

“if you receive a predictable signal when the ball hits the paddle, but a burst of random static when it misses—you will learn, on your own, to minimize the frequency of those bursts of surprise.”

I am missing something. I’m a gelbrain. You want me to to dislike random static and learn to modify myself so as to minimize static. Why do I care to do so? Shouldn’t there be some mixing and culling to force evolution?

Energy management: random static takes up more energy to process and untangle than a predictable, orderly signal. Even a gelbrain has only so much energy to spare.

At least that’s how I understood it. I’ve been wrong before.

But it seems that caring about energy use, or about staying alive, would require an instruction or motivator. Alternately, I have often read about evolutionary systems that use many entities, cull bad performers, randomly mutate the survivors, and test performance again. Like nature, red in tooth and claw.

No, that’s exactly what I found so cool about this work. I’m totally with you on the whole why should it care front. But the point these guys are making is, energy-minimization is an inherent property of self-organizing systems; it’s a kind of motivation that comes baked in as standard equipment. I’m not up on the underlying math (Solms presents a lot of it, as I recall), but the sense I get is that it’s a kind of water-flows-downhill scenario. Nobody asks what motivates the water to do that; it’s just an inevitable result of potential-into-kinetic energies.

Dude! That sounds kinda mystical, Water runs down hill because of gravity. Energy-minimization is built into the ground-rules of physics like gravity is? Seriously?

Anything that helps reduce useless neural firing will result in less need for food. In a Malthusian world, Darwin ensures that this helps the body reach reproductive age. Alternatively, how useful a survival mechanism is having the equivalent of constant epileptic fits?

Brains as prediction machines has a decent history and I am fairly onboard with the idea, especially when it is coupled with the idea of minimizing difference between reality and prediction.

It also makes sense that good prediction allows the brain to relax attention, only returning when reality doesn’t match. I’m sure we have all had that experience driving a known route and only paying attention when something out of the ordinary occurs, like a detour

I hadn’t heard anything about insect consciousness, but if so, Doug Hofstadter might say: “Told you so”.

The tendency to move to a low-energy state, certainly. It doesn’t sound mystical to me; it sounds like high-school physics. A stationary ball at the top of a hill has high potential energy and low kinetic; a ball rolling down the hill is converting potential into kinetic; a ball at rest at the bottom of the hill has low kinetic and low potential energy. Same thing happens when water flows downhill. If energy minimization a la FEM seems arcane, maybe try “path of least resistance”.

Coincidentally my reply, which I decided to sleep on, was going to be something along the lines “why should the process “care” whether the gelbrain cares or not?” The gelbrains that don’t manage their energy management adequately (heh) aren’t really a factor into this discussion on account of being just gel.

Maybe the whole problem needs to be flipped around – maybe the “care” part is simply an evolutionary adaptation that develops in, say, Hominid #549,278 after it sees the bones and corpses of previous 549, 277 hominids who ate from the same berry patch and died moaning and shitting themselves and, instead of following in their footsteps, it suddenly decides “Fuck that noise, I’m gonna go find a different patch of berries.”

Hmm. Something wrong with the logic here. A gelbrain playing pong is in a much higher energy state than one just sits there or dies. In fact, death is the best way for an organism to minimise it’s energy state and maximize entropy.

Thinking.

Pretty sure that evolution weeded apoptosis out of your average neuron a long time ago, so death is off the table. So’s passive inactivity, since these things are programmed to forge connections and pathways (just as a muscle cell is built to contract or a pancreatic cell is built to produce insulin). The FEM people aren’t just being gratuitous when they say “self-organizing systems”.

So you can take the building of networks as a given, given the type of meat involved. The question that follows is, how to minimize network costs? It’s more expensive energetically to process random, ever-changing input (which entails building whole new pathways) than predictable input (which can use the same ol’ pathways over and over).

(In fact, as I recall one of the experimental control cultures was passive, insofar as it received no external feedback to shape its behaviour one way or another. It still formed networks because that’s what those cells are built to do— but since nothing was forcing it to choose between different activity modes, it just kinda lay there.)

That’s my take on it, anyway.

I do think the reasoning in this blog relating consciousness and surprise is quite compelling.

As for this motivation / energy state stuff, I see that the Dishbrain paper in Neuron is open source, so I am gonna work my way thru it before running my mouth anymore. Should have proceeded in that order to begin with.

Neuronal apoptosis is important developmentally, so they are capable of it. However, regulation of the process doesn’t look simple and I’m not sure a petri dish would provide the appropriate hormones etc.

Oh, right. During pruning, I guess?

Yeah. Insulin-like growth factor’s involved, as I recall.

The first part reminded me of Iain Banks’ throwaway line about the Ultimate War not being between good and evil but Interest and Banality.

This is food for thought, Peter.

The thing about technology is that we overestimate the impact at short term and underestimate it at long term.

But what if the horrific hack-event of Sony’s ultrasound tech you describe never happens? What if this tech widely spreads and some day humans are not even capable of distinguish real rewards and impulses of suicidal behavior from implanted?

I think you pointed out this previously in regards how we’re acting against the environment: society have been rewarding self destruct actions for quite a long time.

In a way, you could almost argue that this is our default state anyway. We don’t really have control over the thoughts that pop into our heads at any given time; that’s something the routines down in the basement are working on, and every now and then they just send us a memo. So if you define “us” as the conscious self, everything we experience is “implanted”—by a different part of the brain.

Good old fashioned anorexia nervosa seems to fit that scenario.

Just our luck that the sci fi author that is turning out accurate predictions is Peter Watts. Oh well, at least we get a bit of a warning.

Speaking of, I’m seeing a lot of hard sci fi in the media – some netflix cartoon about 3d printed astronauts, Love death and robots adapting a lot of classic sci fi short stories (Swarm by Bruce Sterling is in the second season) so when are we seeing some actual honest to god Watts adaptations on screen? (Not that the Blindsight pseudo trailer we got wasn’t awesome)

Well, last I heard Neill Blomkamp was still working on something centered around my vampire concept. The Blindsight screenplay has gone to third draft and they want to renew the option again. There’s recently been yet another noncommittal nibble on Starfish. Plus a couple of other things I could mention but for the NDA’s stuffed into my mouth like ball gags.

You wouldn’t know it yet to look at the list of new releases, but the past couple of years have actually been pretty good to me on that front.

> The Blindsight screenplay has gone to third draft and they want to renew the option again.

Nicolas Winding Refn as director or it didn’t happen.

Oooor Villeneuve as director and Woodkid as composer (if you haven’t listened to his Desierto soundtrack yet, it’s just about time, this shit slaps). Blindsight deserves scope.

Villeneuve does terrific scope. Arrival is high on my film list. But Blindsight isn’t a scope story, it’s a dirty basement barroom brawl. Dark, claustrophobic, paranoid, brutal, grinding, saturated, tortured. The walls crawl. And just as you think you are finding your feet, it tilts all in with a Glasgow kiss.

That is not Villeneuve headspace at all. And Tarkovsky’s long dead, so probably not available.

So, Nicolas Winding Refn. Mad as a sack of rabid hamsters. Watch Only God Forgives and Bronson and then tell me I’m wrong.

(Although if old Nick is too busy washing his hair or something, maybe Ben Wheatley? See High Rise. Solid script is important, but choice of director will make or break its telling. You do NOT want a freaking Ad Astra abomination.)

Music-wise? That’s a toughie. Critical too. Some godforsaken crash of Ligeti and Blanck Mass as starting point would be my uneducated first guess, although I’m sure that can be improved on. Maybe pipe it through Max Richter and Hans Zimmer with a cocaine/laudanum chaser and see what comes out just this side of screaming?

Addendum: There’s another powerful reason for getting a Winding Refn or Wheatley instead of a Villeneuve, as talented as the man is.

In Hollywood’s peculiar biology, energy measures in stacks of green paper. A low-budget director can rip out an effective psychological horror genre flick on well under ten million. With low energy consumption it can survive not being a box office monster and yield long-life returns as a cult favorite.

In contrast, even a mid-budget Villeneuve space opera runs 50 mil easy. At that price point, those investors need safe fast guaranteed returns… and before you know it you’ve got cowboys bopping in space aeroplanes, not Peter crawling around your amygdala as is its birthright.

Paradoxical, but if you want to see a good Peter Watts movie you need to cut its budget to the bone. Pump it full of cash, all you’ll get is George Lucas.

(Avec apologies for the slight derail.:)

I do not know this Refn guy. But I would not turn up my nose if Villeneuve came knocking. Or Del Toro, for that matter, although I continue to mock his stance on Optimism being the “radical option”.

Or Ben Stiller. Seriously. I mean, forget Zoolander: have you seen “Severance”?

As for the musical soundtrack, there’s really only one choice. The Shaggs.

> I do not know this Refn guy. But I would not turn up my nose if Villeneuve came knocking.

For who among us would not? I’m sure Denis’d do a fine job too, though it would be dramatically different movie from the Blindsight that’s looped in my own head since I read it.

As for NWR, watch Only God Forgives on a very large screen. (Seriously: on a small screen it looks ass.) Like Kubrick butt-dialed contrast to eleven. Not a perfect film, but not easily forgotten. Also your perfect Siri and Valerie right there: Gosling is inevitable but KST is terrifying.

Once you’ve survived that spectacle, for a quieter, more contemplative character study, his earlier Bronson is excellent. (Bonus Tom Hardy as Cunningham, natch.)

I’ve not seen The Neon Demon (on account of me being a giant wuss) but I suspect that might be your kind of psychological horror. All very Elizabeth Báthory. It also looks stunning. (I bet Fanning would smash it as James; though I can’t help wanting Caity Lotz from her turn in The Machine, another very Wattsian flick.)

> Or Ben Stiller. Seriously. I mean, forget Zoolander: have you seen “Severance”?

Very underrated director. (Also, gas fight FTW!) Shamed to say, I’ve not seen Severance, ostensibly as I’m too cheap to get AppleTV but mostly cos your review of it caused me nightmares.

Though honestly, whoever you get, just lock ’em in a cage for a week and starve ’em, then feed on nothing but red raw meat until it’s a wrap; you’ll be golden.

> As for the musical soundtrack, there’s really only one choice. The Shaggs.

A bold strategy, Cotton, let’s see if it pays off!

Hmmm, okay, one Shaggs track—we did declare an inchoate bandwidth war on Rorschach, after all. A full album though and you’ll find Tommy Wiseau directing, and then you’ve no-one to blame but yourself.

No, please, don’t let that happen, even if you stay a mid-to-down-list HARD-SF cult author for the rest of your life; I wouldn’t watch anything that Villeneuve auteur-for-hire touches. Ew!

You’re down with The Shaggs, though, right?

LOL!

For sure!

Enough of that Richter/Zimmer aural triviality, please

There is a new show on Amazon, Peripheral, based on William Gibson’s book (which I haven’t read), that has a device that looks like what Sony patented. Three episodes so far, they haven’t explored it much in terms of consciousness, but I keep wondering if there will be something interesting on that aspect.

Not if they stick to the novel.

Then again, they’ve significantly diverged from the novel so far, so who knows?

Emotion as evaluation. A constant intraorganismal conflict between the neuronal imperative to obtain accurate predictions (Truth) and the biological imperative to not fucking die off (Survive).

Checks out. I suppose there’s also a choice there, as much as there can be one, and perhaps the only meaningful one we ever get to make. “Which camp are you on?”

They’re not inconsistent with each other. I think the way the theory gets it to work is positing that the brain also “predicts” that it’s alive and has enough food and such, and the easiest way to satisfy those predictions is to actually take actions to make them true.

Storms are out of control. 19 tornadoes last night and today. Reminds me of something…

Per capita extreme weather damage and deaths are down from start of 20th century.

But you are, of course, like most everyone victim of natural gas interests propaganda.

(To explain, nat gas producers have been caught hyping renewables because actual engineering and weather constraints mean a big renewables build-out has to be backed up by standby thermal plants for the time when wind doesn’t blow and sun doesn’t shine much, like entirety of the last month in Europe).

In an ideal world we could omit renewables and just replace the burner modules in thermal power plants with buried molten salt reactors, however, the way to go will be these as backups for the bird and bat death machines, and in 20 years if we’re still alive maybe someone will figure out we can just keep the nuclear reactors running 95% of the time and save the hapless flying critters..

I’d like to see a citation for that. And a legit comparison would also have to account for things like changes in building standards over the same period.

Well that’s a cheery thought. Something something real life Death Note something something.

Also, in case you didn’t know, someone recently made a fairly popular youtube video about Blindsight : https://www.youtube.com/watch?v=9ZzQACC7-C0

Yeah, I saw that. Nice to get some love.

Regarding neural nets: in the brief period since last blog post I have been under the impression that a serious paradigm shift is happening in image processing. Several AI-driven models and engines sprang up on the internet and people use them lavishly for entertainment, but it also means that they will become more popular in media and culture. Artists are fearing for their bread since they fear the AI will steal their work.

On the other hand, I have also seen models that adapt synthetic voice to certain speech models. With advance of graphics software like UE5 and After Effects I fear I have practically given up on trying to distinguish fiction from reality in sufficiently low-resolution video sources.

https://www.youtube.com/watch?v=9Zxq554cvzs

https://www.youtube.com/watch?v=3EvGNqC-Pw8

In any case, it seems like adaptability of falsification is going to only increase, especially when people will learn to combine the two. IMHO another couple of years and it will fully transpire into higher resolutions and then I can finally give up on the news in any form.

What’s your opinion on this development? Will there be any chance for maintaining (somewhat) unique and universal scientific reality or it will be shattered into many mismatching pieces like a broken toy?

From a purely theoretical (and admittedly unhelpful) perspective, our baseline sensory perceptions have always been deepfakes: an internally-generated FPS informed by chemical signals, potentially no more reflective of “reality” than a desktop icon is of the underlying binary.

In terms of being able to distinguish AI-generated deepfakes from brain-generated ones, though, I can take some comfort (as usual) from Saturday Morning Breakfast Cereal:

Most of the technology involved in this is not that old (a year or two). TTS and “deepfake” face-swapping have been doable for a while; if large organizations were interested in using them to fake videos they already would have been doing it. I suppose it would be hard to tell.

It’s not “if”, but “when”, IMO. “Large organizations” usually have “reputation” to keep, which in actuality means that they are just too cumbersome to adopt most recent developments in technology. Doesn’t mean they won’t do it in the near future, but when they’ll do that… you’ll never see it coming.

As a matter of fact, you have already missed a big one.

That there is your reason for hope (or despair, depending on what hypothetical outcome you preferred).

These fakes come to you through pipes no wider than the “real world” does. But the real world comes in a never-ending stream. You can move your head to get a different angle on it. You can ask it questions and get answers. That ultimately adds up over time to a super high resolution.

Reflex/reflect dichotomy. Another pattern which is written all through nature (although only in biology and up, AFAIK). Reflect is too slow, reflex is too easy to fool. Mix the two, and you become a hard mark. You can hold your own in the arms race for a while.

The fakes will fall apart easily – if just a bit too slowly for the social reflexive response – as the scrutiny of them increases. On the day when they don’t, you’ve got “a difference that makes no difference is no difference”. Grant the fakes personhood, because what other choice do you have? By now there ought to be a healthy emerging body of sci fi stories where the protagonist literally began as an NPC in a video game, then got just a bit too sophisticated, and wound up having to work and pay taxes.

The same principle works in reverse. E.g. that attention seeker who made a big stir by claiming that he had made facial recognition software successfully detect gay people… (begin fine print) from their profile photos on gay dating sites (end fine print). In the street? In homophobic areas of the world…? Better proof required, mate. Far more dangerous is the probability that people in such areas don’t read the fine print, and think that it actually works. Corrupt and repressive authorities don’t require it to really work, they just require it to point a finger at the people who they don’t like, and for enough of their support base to believe that the finger speaks true.

In principle, these systems can develop to a point where they actually can reliably identify gay people in the street… But by then their existence will have wrought a hell of a lot of other changes. Gay people being detectable in the street might no longer be a problem of great concern.

True, with a few teeny tiny oversights:

H. sapiens is still fundamentally wired for discriminating lions vs zebras out on the savannah. That old code could afford a strong bias towards false positives back then: better to run unnecessarily now and again, than fail to run once and be eaten. Starved of lions to match now, it’s an itchy hungry menace.

“A lie is halfway round the world before the truth has got its boots on.” Disputing false information demands conscious time and effort. A lie that is ultimately debunked but in the lag time suckers enough people long enough to cause harm is lie that is successful.

A significant percentage of people want to be fooled, and will bend over backwards embracing pleasing lies over unwelcome truths. They are capable of moving their heads yet choose to hold them still. And in that lag they are leveraging those lies to “rationalize” (excuse) their own escalating response to asserted threats.

Much of our modern input is not received through direct perception but recorded and rebroadcast via external third-party channels where a slight wiggle of our heads has no effect on the original camera’s angle. We can compare and contrast different feeds for gross differences but our fine perception remains two-dimensional.

Nor is disregarding those third-party inputs a viable option. Insisting only direct experience counts is the terrain of narcissists and nihilists, who care for and about nothing but Self. We live in a global society: anyone who willingly shoves their head in the sand deserves all the kicks in the ass.

…

Therefore, what you say sounds great in principle, but humans are dumb, selfish, conceited, and fallible, and ridiculously easy to game by anyone who puts their mind to it. See also: Gaslighting, Firehosing, “Stop the Steal”, big-L Libertarianism, Communism, Fascism, Antivax, Alt-Med, Bitcon, Religion, and every other grift and scam on the planet.

Your entire truth-checking system is only as strong as its weakest component—which is you. A synthesized fiction itself, possessed of its own willfully selfish motives and biases.

And that’s not even including actual neurological dysfunctions—psychoses, agnosias, personality disorders, etc—that involuntarily blind individuals to their own errors.

Just close your eyes at night and dream, to see what nonsense you can freely believe in the moment.

GLWT.

—

“The first principle is that you must not fool yourself, and you are the easiest person to fool.”—Richard Feynman

Indeed, but so what? You can send information at infinite speed, too, if you’re happy with a 50% error rate.

Reflex/reflect. It’s the same old problem, moved from the individual into the realm of population dynamics. Twitch response has its place, but there’s a reason why we have the capacity to sit and spin our mental clockwork on things. Don’t assume that it’s either/or. We are not going to discard either response.

You’ve taken the metaphor too literally. Instead of thinking about watching a DeepFake of Elon Musk talking about how he loves drinking his own piss, think about looking at transcripts of various conversations with LaMDA, as our Dr Watts did for a blog post earlier in the year. One guy questioning LaMDA fooled himself quite effectively. Dr Watts was in the “reflective” network for that one, and was less convinced.

The human capacity for willful self-deception predates deepfakes by quite a few millennia. Lying to ourselves has always been easy AF, false data or no.

It’s being honest that’s hard. Not knowing if you should even trust your eyes and ears any longer makes it harder still. Some of that distrust is natural crawling paranoia; some the successful industrial product of neo-Soviet firehosing raised to global scale. Even a convenience, perhaps: an easy nihilistic out. Just don’t trust anything any more—problem solved!

Well, fine, as long as you don’t want to count for anything more while flapping about on the mud; but then you’re just lapping round to lying to yourself again: “All this shit’s not my fault.” Bleh, shoulda stuck to invertebrate. Waste of a spine (slipped discs and all).

Alas, I can’t offer the good answers, just good film clips.

As for Elon drinking his own piss, I think it safe to say dude is already well into late-stage Howard Hughes, the 21st-century version of sitting in a darkened room full of bottles of urine. Unfortunate, but. Guy has genuinely revolutionized spaceflight and is a leading disruptor of entrenched ICE interests (capacity of our electrical distribution networks notwithstanding), but his failure to lash down his own mania is undoing him hard. No deepfakes required; 100% self-inflicted. See above. #SelfMemed #SoItGoes

You have it backwards; it’s not Elon who is deep into Howard Hughes style psychic meltdown, it’s the entirety of the professional managerial class (you’ve read your Burnham, right) who are getting slowly insane.

Elon is just an asshole autist who is extremely pissed various entrenched interests are hampering his business efforts, and decided to go into politics with his twitter acquisition.

Hence, the entirety of the liberal narrative control machine that crucially depends on Twitter decide to start hating him, which is why you hate him and think he’s crazy. Because they’re not bad at their job, and you are insufficiently nasty and paranoid of a person to be non-susceptible to guilt-tripping and social desirability bias.

“Hence, the entirety of the liberal narrative control machine that crucially depends on Twitter decide to start hating him, which is why you hate him and think he’s crazy.”

Ah, another drive-by nonsense dump. I’d ask you to elaborate or offer a rational argument for this claim, but based on prior experience, I’d be wasting my time.

Elon is not crazy. He’s deeply insecure and willing to do stupid tricks to finally attract the attention he craves, but which has somehow eluded him. His Twitter acquisition has secured him a willing audience. That the audience consists principally of bots and a few garbage people makes no difference to Elon.

Is the Anglerfish relative to this discussion? The male gives away it’s body to become a sperm pumping parasite (probably not a perfect term for this condition..) on the female’s abdomen. More predictable with lower energy you cannot find.

Why stop with anglerfish? “Sperm-pumping parasite” is the perfect descriptor of a lot of people I could name…

I just burst out laughing in a meeting. Well done!

“Sperm-pumping parasite”

“a lot of people I could name…”

Fiblet-time. Also best band name.

Thanks for reminding me I picked up Solms’ book The Hidden Spring after reading the other post and I haven’t finished it yet.

“Predictability” is (probably) more powerful than you think.

I don’t have any links handy, and you probably saw this anyway, but research in “xenobots” has apparently been revealing that the information minimisation principle alone is enough to make cells do all kinds of seemingly fancy tricks. The one which particularly grabbed me is self-healing in damaged tissues. Cells automatically try to reduce environmental unpredictability by surrounding themselves with others “of their own kind”, which has the effect of striving to minimise tissue surface area, which closes wounds. Or so I have seen it alleged.

Entropy appears to be so fundamental to our “viewpoint” on the physical universe that we probably should not be surprised to find that all the most basic scaffolding of everything that we see happening is built from it. Like, you know, time, and things “happening” at all. Our world is defined by being full of surprises that we don’t like.

My main question concerning constructs like pong-playing DishBrain is this. How many nested layers of Plato’s Cave do you need before something analogous to pain emerges? Is one enough?

If we’re not in pain, we’re not alive. Right?

> Put it in an environment that generates predictable feedback not when it paddles a ball but when it throttles its nutrient supply, or otherwise degrades its own integrity. Reward it for self-harm; watch it commit suicide.

That’s just an overly elaborate local minima trap. You get them in any energy minimisation system, including any kind of life and any kind of AI. The physical universe is full of them, as is neuroscience and psychology. The only way out – universal truth, this, at least in our universe – is a big kick from outside, which changes the landscape underneath you. Real world examples are so common, in every kind of structure that you can think of.

Actually constructing a self-suiciding DishBrain would be no more interesting than the robot art piece which turns itself off when you turn it on (remember that?) Unless… DishBrain found a way to avoid suicide when placed in this situation. That would be really surprising!

Here’s something that you may not have known about Body Integrity Dysmorphia.

I went to a lecture a few months where an neurosci academic was discussing this disorder. An audience member asked: can people who have amputated an “alien” limb due to BID get phantom limb pain in the amputated limb?

The answer is, apparently… Yes! Talk about not being able to catch a break…

Much more interesting to me is people who are apparently able to induce BID in themselves due to a dire survival situation. Everyone has heard about animals chewing off their own limbs to escape traps, but there is now at least one really well documented example of a human doing this – the story of Aron Ralston.

Oliver Sacks’ The Man Who Mistook His Wife for a Hat provides a popsci trot through some of the varied delights of Reality Simulation v1.0 component malfunctions. You might want to crawl back under the bedsheets when you’re done.

Of course! But will they remove their hand from the box?

Nope, because they don’t understand the instructions.

Give them the instructions in a form that they can understand, and I’ll lay a bet with you that the majority will not remove their “hand” from the box.

The simplest Gom Jabbar test that we have a name for is XOR.

Lol. You’ve not seen The Grudge, I take it?

My dude, I barely make it through the opening credits to Scooby-Doo.

Dammit. Well, there goes my somatosensory cortex theory.

Surely that’s not real BID, is it? Deciding to bite the bullet and amputate a limb because the only alternative is death isn’t the same thing as regarding that limb as some alien tumorous thing that never belonged attached to you in the first place. Or did Ralston et al actually reframe their own appendages as horrific in the heat of the moment?

Unless it’s a false memory, I saw at least one interview where he claimed exactly that, in pretty stark terms. But if it really happened then he’s probably said it more than once.

Doesn’t sound like it. Ralston made a rational, voluntary decision following a cost-vs-benefit analysis: hack off a part of the organism to save the remainder. He may have trotted through a variety fibs—“I can escape”, “help is on its way”, “this won’t hurt so much”—on his way to accepting “this arm is boned”, but all of it is conscious choice.

BID is involuntary in its nature: there is discrepancy between the brain’s own definition of physical self and the observable meat actually stuck to it. That’s not reasoned; it’s an incorrect reading produced by faulty low-level wiring.

Sacks’ book has a chapter titled “The Man Who Fell out of Bed”, which wikipedia summarises:

Involuntary delusions (fixed beliefs) are terrifying: we cannot reconcile the mismatch between what we perceive and what we know to be true because some part of that wetware is itself misfiring. The distress is 100% real, product of a mind that knows it is sane so can find only one valid explanation to fit all the facts: the of the world is falling apart, gone insane, everyone else turned against us.

For something repairable like acute psychosis, court-mandated medication and extended hold on a locked ward hopefully brings the poor sod back down. (Though still extremely unpleasant for everyone involved.)

For intractable cases, it’s their entire life going forward. Sacks’ patient knows that leg he sees is not his, and no amount of connective tissue will ever convince him otherwise. Imagine being intimately stalked by such alien flesh for the rest of your life. Being able to regard its meat as an unpleasantly intransigent parasite would be downright reassuring. His brain denies him even that comfort—he cannot conceive it.

Makes my skin crawl.

—

Do not proffer sympathy to the mentally ill; it is a bottomless pit. Tell them firmly, “I am not paid to listen to this drivel—you are a terminal fool!”—William S Burroughs

Emphasis mine.

That’s rather a bold statement, don’t you think? Which definition of “conscious” are you using?

Why can’t part of the brain which you – or Ralston – thinks of as unconscious and involuntary be the part which worked out and accepted “this arm is boned”?

Look through other extreme survival stories by climbers. There’s quite a striking common thread – something in the basement makes the decision to do whatever it takes to survive, and then does whatever it takes to get the bits upstairs to play ball. Now, there is a lot of survivorship bias here, of course.

Correcting my misleading phrasing:

A billion years of evolution have wired in some powerful self-preservation instincts. *H. sapiens* is hardly alone in this. Ralston didn’t want to die in a hole so did what he calculated necessary to survive.

That’s not BID though, which is a physical deficiency in the brain’s wiring: there’s a hole in the sensory homunculus that declares “these are all the known parts of me”. Sacks’ patient was no more capable of recognizing his leg than a person with prosopagnosia can recognize your face.

Had Ralston actually experienced BID, he could not have escaped from that hole. His brain would assess all the available information and conclude that his body is tethered, yet be utterly unable to identify by what.

Scratch that last paragraph. While agnosias can blind victims to all sorts of thing from faces to half the world around them, BID sufferers recognize the alien attachment to Self, going to great trouble to destroy it themselves.

From the CNN article I linked above here’s that study that identified the missing wiring.

Oooh, thanks for that link!

As we all know, there’s nothing quite like fear for promoting new connections in the wiring.

Connections that were missing in the first place, and connections that are there but effectively inhibited by new ones, will produce identical behaviour.

Here, I’ll give you something to replace it.

Let’s leave the meat for later, and start with a robot. Take a deep learning system, the job of which is to learn to pilot an awkward robot through a complex environment. Give it only the most basic “score” (survival drives buried deep in the firmware) to start from, and do the rest of the bootstrapping with a reward system based on predictive coding – the algorithm is rewarded for correctly predicting the data that it will get next through the robot’s senses. Of course, the deep learning box also has its virtual hands on the piloting controls, so it can take actions which might make the environment more predictable; but it’s prevented from just staring at a wall by those survival drives, and by the “exploration” term in the algorithm, which of course is initially set quite high.

When you set this robot off, if you’ve built it right, the deep learning box should find and latch onto all of the most obvious repeating patterns in the environment, and get pretty good at predicting them. But the environment is complex and it has those niggling survival and exploration drives; so it is still getting surprised quite often. Its predictive performance plateaus.

Take a second deep learning box and stick it on top of the first one. The job of the second box is to learn to predict when the first box will get surprised; this function also forms an “environment” which such a learning system can learn to navigate around. The second box also has some piloting controls at its disposal. As the performance of the first box starts to plateau, and the second box gets good at predicting where the simple patterns that the first box is reliant upon will break, the second box can intervene and override the controls in a manner which reduces the surprise to both systems.

Remember though, the first box is still learning from what it “sees”, and still expects the data from the senses to be consistent with the commands that it issues to the controls. The interventions from the second box will constitute a whole new layer of surprise in the environment, and one which we’d like it to ignore, because the two boxes are supposed to be on the same team. The first box is already specialised to pick up certain patterns in the environment; if it starts to adjust its expectations based on what the second box is doing, then it will start to lose that specialisation. What do we need?

We need a “that was me” signal from the second box to the first, telling it not to be concerned when the controls are overridden. “Stop learning from what you see, until I give the controls back to you”. And the “overseer” layer will come to expect that when it sends this signal and overrides the controls, it will see input consistent with the robot moving in the fashion that it predicts.

Repeat this process to stack as many more overseer layers on top of each other as you have the resources for and can get to work. Take the awkward robot at the base of the whole stack, and stitch it together with a bunch of other awkward robots with a similar architecture; this forms a new compound robot, so you’ll need a new deep learning box to pilot that – by selectively overriding the controls of all the “limb” robots, according to its own survival imperatives – and more overseer layers on top of that one. I’m sure you get the picture by now.

Somewhere in this tree of robots, introduce damage. Cut the link which provides the “that was me” signal – or cut the link which overrides the next layer down for control of the limb. What happens?

Compare: https://en.wikipedia.org/wiki/Efference_copy

That’s an interesting rationale, but I’m not entirely sure why the second machine (presumably based on the same architecture as the first) is capable of dealing with situations unpredictable to the first one. Couldn’t the first machine just develop more complex pathways with experience? Do we need a nested system here? Or are we assuming that the first-machine-unpredictable scenarios are so different that learning to deal with them would somehow degrade performance in the other scenarios?

Efference copies are cool. They get a call-out in a body-snatcher story I’ve got coming out over the next few months in Lightspeed.

Very good question! I’m not sure whether or not the actual answer is known for sure yet.

The explanation where “the first-machine-unpredictable scenarios are so different” is one that I find very plausible, and which I’ve noticed domain experts referencing in roughly these terms. Imagine that the predictable patterns form numerous clusters in the space of possible inputs, such that “stretching” one matcher to cover two clusters is very inefficient; whereas a layered system efficiently performs something analogous to wavelet decomposition on the input signal. Each sandboxed layer discovers a reversible dimensionality reduction transform which produces a low-entropy signal from the high-entropy input.

Another obvious possibility is that it’s a getting-there-from-here problem. Extending the architecture with new pathways whilst remaining functional is just far more difficult if the parts which are doing the extending are not separated from the parts which are doing the functioning, so that they can more easily vary independently. Same reason why an internal model of the “self” is such an obvious winning pattern in motile organisms. If all inputs connect independently to all outputs, then they all have to jump from one viable configuration to another viable configuration at the same time; hard for a learning (or evolving) system to do. Whereas if the inputs only connect to the outputs by going through a central integration area, then changes can happen on either side (or in the integration area) without the other parts needing to change simultaneously.

I’m glad your around and kicking to write fit bits and not being eaten by MRSA or rotting in a federal prison.

Me too.

But the decade is yet young.

I’ve got $5 riding on “Reciting How to Explain Pictures to a Dead Sea Hare as Tsar Bomba goes off in the background”, so don’t disappoint me, lad.

After watching the M3gan Android doll horror movie trailer I’ve decided I no longer want an Artificial Friend for Christmas. Please tell Santa.

Is it energy minimizing in just the short term or is there a lens for long term energy minimization? Winning the game would effectively minimize energy output in the long run?

You lead me down the Mark Solm’s rabbit hole and wow that shit really left an impact. I can’t make much sense of Friston as he is in too deep on the maths, but his work on psychedelics and the “anarchic brain” is fascinating.

This led me to Michael Levin, (who worships at the altar of FEM) and introduced me to the terms of “bioelectrics” and morphogenetic space. How cells are corralled into growing this or that limb. Any agent with purpose is sentient in his book (as I understand it). which broadens things well beyond insects

Not sure where I’m going with this, but if people start losing limbs to BIS we aught to be able to grow back a more accommodating limb of choice before too long.

Sorry, but that’s simply not true. The most common sexual kink is BDSM, which is related to the evolutionary need of females to exist under patriarchy.

At least 40% of population is into some or all aspects of it.

https://aella.substack.com/p/fetish-tabooness-vs-popularity?sd=pf

Feet doesn’t even make the top 10 according to a fairly well populated survey by the infamous Aella. (apostate fundie prostitute/camgirl who tried to kill herself with heroic daily doses of LSD for most of a year but somehow ended up more sane than the typical Harvard graduate these days)

That’s a very cool survey, and a shitload of work obviously went into it. (Also I was fascinated to see that the respondents regarded the murder kink as less taboo than pedophilia.) But Aella herself admits that the sample is significantly skewed along a bunch of axes (being a sex worker herself, with the audience that implies, you’d expect a disproportionate representation of extreme kink). Feet are definitely the #1 kink “for otherwise non-sexual objects or body parts” according to Wikipedia—admittedly BDSM focuses on sexual body parts, maybe that’s the difference?—although their source is the International Journal of Impotence Research. Make of that what you will.

Lubin > Fischer?

According to Aella’s survey, yeah. Her graphic (which you can be damn sure I have downloaded) shows that respondents tag “pre-puberty children” as 98% taboo, while “executions” only rate 92% and that perennial fave “cannibalism/meatslaves” comes in at 93.

Again, with appropriate caveats about sample complexion.

Aw c’mon, is nobody going to take that “evolutionary need” bait? It’s on such a shiny platter!

Have pity on that poor frustrated sub…

I’ll admit that line caught my eye. I just wasn’t quite sure how it was supposed to work…

Deliberately ignored the incel dogma opening. Ignoring it seems to have immediately spun the chud off into a rant about shadowy lizardmen cabals (see above).

The concept of the link between sentience and motivation has been bugging me lately too. I keep thinking about the common domestication syndrome in mammals (down regulated neurotransmitter pathways reduce reactive aggression/fear, and you get patchy coats and floppy ears for the same price). Are domestics less sentient than wild animals in absolute terms? Or more in relative terms because the instinctive/reflexive aspects of their behaviour are weakened, leaving the reflective mechanisms more dominant? And all this got me to wondering if psychopaths (who supposedly have underactive amygdalas and ineffective fear responses) are a kind of hyperdomesticated human (with the occasional side effect that they can kill without feeling fear or remorse).

Not sure what the state of the art opinion is on this one, but many people have wondered just how survivable psychopaths and sociopaths could have been in the hunter-gatherer world, under the iron reign of Dunbar.

They may well be a kind of parasitic or symbiotic variant that only became viable with the advent of settled civilisation. That would make them a “hyperdomesticated human” in a sense, or perhaps a kind of intra-species synanthrope.

My impression is that psychopaths are relatively immune to the manipulations of their own kind; if you have empathy, it’s hard to imagine/predict the behaviour of something that doesn’t. So a population of sociopaths would implode for lack of vulnerable prey. The only way a sociopath could prosper would be if the vast majority of those around it were normal empathic humans. The estimates I’ve read put the socio/psychopath population at 5-10% of the total.

Which, probably through sheer coincidence, is pretty much the same proportion you get for viable predator-prey populations out in the wild…

The quoted numbers do seem to bounce around a bit. ~1% seems commonly bandied about for full-bore Antisocial Personality Disorder. That said, it’s a spectrum disorder, with maybe up to 1 in 3 possessing some traits, and no doubt further selected by and for in toxic societies. Plus APD has much in common with Narcissistic Personality Disorder, likewise characterized by lack of empathy, so it may be the higher numbers are encapsulating both.

If anything though, NPDs are worse: while a psychopath will screw you because he can (opportunistic abuser), the narcissist screws you because she must (obligate abuser). Dodge the psychopath’s machinations, he can move along to find an easier mark. Deny the narc, and she will do everything she can to destroy you for that mortal insult you’ve committed upon her person.

And whereas true psychopaths are relatively rare and feared in popular culture, narcs are common as mud (more than 1 in 20) and perceived as silly and harmless.

Your vampires as a long-extinct subspecies of H Sapiens is a quaint and charming notion. In fact, they predate amongst us with frightening freedom, as a few hours reading r/raisedbynarcissists or the Cult of the Orange Shitgibbon can quickly confirm. It is a failing in our own theory of mind that we project onto them our own humanity—and continue doing so even after their behavior confounds our predictions. Be assured, such people do not return the favor.

Cluster Bs are a barrel of fun.

Predator-prey really doesn’t seem like the right dynamic for psychos and non-psychos.

Not only are they the same species, but psychos don’t directly eat other humans to survive. To get the necessities of life they depend heavily on the humans around them, in just the same way as every other human does. And they have to constantly mingle with their marks, sharing aspects of daily life, eating from the same table.

What psychos seem to do, which makes them different, is to try and exploit the empathy gap to get more energy for themselves. They try to suck up resources, and to undermine any competition that they have for those resources, even from amongst their closest conspecific allies. They don’t eat other humans; they use them.

These activities tend to backfire on them badly if they get detected for what they are by the group – indeed the concept of “fairness” itself (which goes a lot further than humans – think of those famous capuchin experiments) looks an awful lot like a defence mechanism against these guys. In the presence of such defence mechanisms, which our species presumably had from Day 1 given that even monkeys have them, the manipulative psychos would be at greater risk in smaller and closer-knit survival groups. And so, you’d think that the eras prior to the Neolithic Revolution would have been lean and dangerous times for these folks. (Maybe they were able to protect themselves with something like, just a wild guess here, religion…)

Is anything directly comparable to this relationship actually known in the animal kingdom?

Imagine a species of ant, where 1 in every 20 workers habitually steals food from the nest and takes it away to a hidden stash outside the nest somewhere. Mostly they make a show of pulling their weight, but they do a bit less than the others, and take much more reward – as much more as they can get away with. Sometimes they get another worker alone and kill that unsuspecting worker, perhaps eating some of them. What causes them to have these deviant behaviours? (Perhaps a parasitic fungus, which needs to germinate in the stash of food which the ant steals from the hive?) How does this behaviour persist, in evolutionary terms?

Why would we expect the ratios to match predator-prey ratios – and if they do happen to match, is that really just coincidence? Is this the sort of place where we’d expect coincidences, or would we look for a hidden variable?

Suppoe there’s a hidden variable. Maybe it’s that hypothetical fungus (or some more subtle informatic equivalent)? Endoparasitism is next door to predation, after all.

Suppose that the “fungus” doesn’t actually kill the host individual; but it does starve some of that individual’s brethren – and if the infected ant is “discovered” by its fellows, then this is also fatal for it. So the presence of the behaviour hijacker does take a toll on the population nonetheless.

Suppose that there are ways in which the infected individual can actually benefit somehow – not just from getting fat itself, but in terms of genetic fitness – from being predisposed to being hijacked in this way; such that the line between parasite and symbiote becomes very blurred here. I don’t know if the question of whether or not human psychos pick up a genetic benefit from their condition, alongside the individual benefits that they get as long as they remain undetected, has been answered.