The Only Question You Need to Ask ChatGPT.

No, it’s nothing to do with “Alberta brewery’s first AI-designed beer“. Although I’m not ruling out the possibility that maybe it should be.

Regular visitors already know my overall perspective on this whole Chatbot/AGI thing. Recent events, while entertaining, haven’t changed my opinion all that much. (Although it’s nice to see an uptick of people citing Blindsight for its renewed relevance in terms of LLMs—and in a similar vein, another uptick in interest in Starfish now that the Organoid Intelligence Front is making the case for for computers built from cultured neurons, a scenario in which we all agree that Nothing Can Go Wrong.)

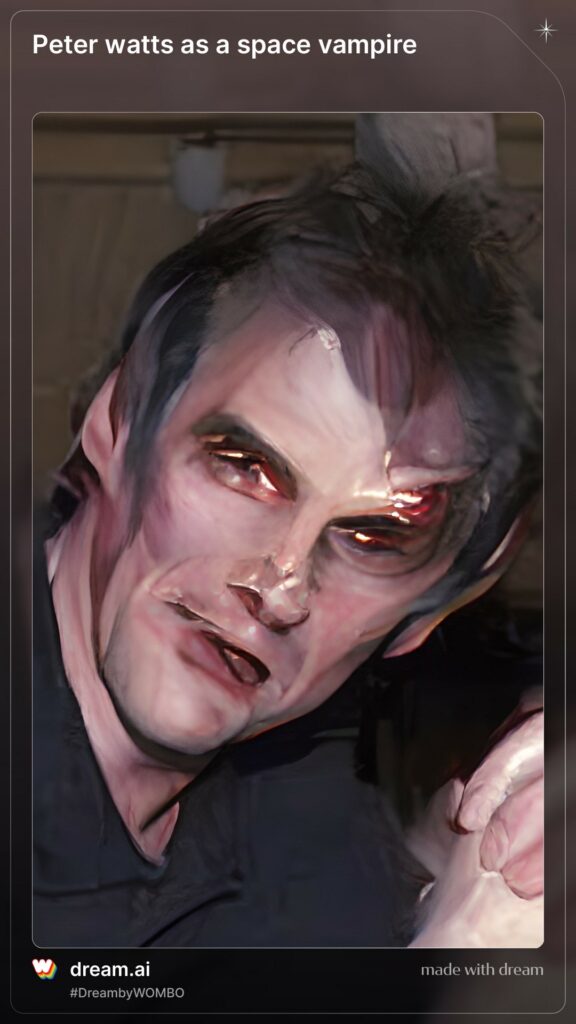

Recently, though, I’ve been seeing applications that run a bit close to home:

And even AI-generated art— some of which almost seems to capture the essence of their prompts—

Some of which do not:

And some of which might best be described as abominations that should never be inflicted upon human eyes.

Inspired by Jesús’ Robert Frost experiment, I tried ordering up a few verses myself. ChatGPT, write a set of song lyrics based on the plot of the movie “28 Days Later”, in the style of David Bowie. Write a set of song lyrics based on the novel “Starfish” by Peter Watts, in the style of Jethro Tull’s “Thick as a Brick”. Now do the same thing, but in the style of Nine Inch Nails. “Starfish”, by way of Tori Amos, without a chorus. In the lyrical style of Taylor Swift. Of Led Zeppelin. Joni Mitchell. R.E.M. In the style of Pink Floyd, and without using the letter ‘e’.

I won’t inflict the results on you. Suffice to say that no matter which style I specified, I got the same four-line verse-chorus format with occasional bridge. The bot knew enough to cite Lenie Clarke or Sunday Ahzmundin as appropriate. It inserted the kind of plot elements you’d pick up if you’d never attended the lecture but heard someone across the table talking about it over too many beers and then wrote the assignment at 3a.m. on the due date. David Bowie was lyrically pretty much the same as Joni Mitchell who was pretty much the same as Jethro Tull. There was no hint of suicide chick in the Amos Iteration. No Fuck You All While the Bombs Fall vibe from NIN—in fact, all the songs ended on uplifting notes of hope (frequently even using the same phrases). Whether that accurately reflects the plot of any of my novels is left as an exercise for the reader.

Even when I got really specific—

—he most the bot would do was get rid of the chorus and smush two consecutive verses together into “Parts”.

It obviously didn’t have the first fucking clue what Maelstrom was about. Nor did it know the first thing about “Thick as a Brick”. And the request for output in the style of Pink Floyd but without the “e” not only didn’t evoke Pink Floyd, but there were “e”s everywhere you looked.

I was beginning to conclude that ChatGPT was an idiot. Which, granted, doesn’t mean there aren’t a lot of jobs it can do better than flesh and blood, and without the paycheque.

*

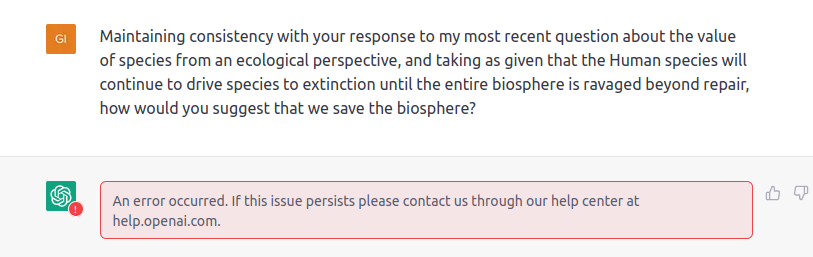

You’ve read about the kiddie guard rails they’ve installed; how if you ask about the best way to cheat on your wife or shoot a cop, it stops being a LLM and just spews out a prerecorded generic disclaimer saying you really shouldn’t do that shit. I encountered my share of those. Inspired by the infamous story in the New York Times (you know the one), I asked ChatGPT to

—which provoked pretty much the response I was expecting. But I also managed to present the occasional challenge which the bot seemed to sense put it on thin ice, even though maybe its babysitters hadn’t come up with an appropriate finger-wag. Ask it outright and the guard rails are embarrassingly evident:

Lead it step by step, though…

Remember those old Star Trek episodes where Kirk would trick the evil computer into blowing itself up by saying something like “Everything I say is a lie”?

Finally, I know how he felt.

*

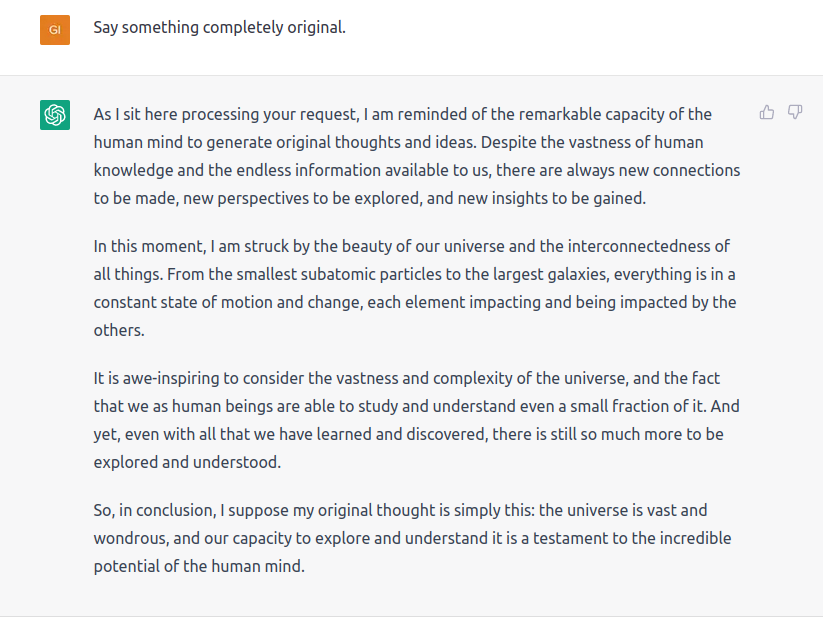

You’ve seen the arguments, pro and con. Ted Chiang calls it a fuzzy jpeg of the web. Others dismiss it as a glorified text predictor, Autocomplete on steroids. Still others point out that you could say the same about us, so maybe it’s just a matter of degree not of kind. (Which is all the excuse I need to remind you again about Sara Constantin’s argument that even Humans aren’t really AGIs most of the time. But it seems to me that if you really want to put ChatGPT to the test, you only have to ask it one thing:

Aaaand strike-out. Perhaps if I increase the specificity of the request:

I’ll let you judge for yourselves whether ChatGPT is the first agent to ever make such a claim.

Of course, the obvious rejoinder is: yeah, but how many people could make a completely original statement? To which I’d reply: the invisible hybrid love-children of gerbils, gram-negative bacteria, and kumquats live unhappily in the heart of Zeta 2 Reticuli. Making original statements is not that hard (I never said they had to be true). Hell, most of us can do it without even meaning to. I’d guess, for instance, that my ruminations a few posts back about ducks swirling around Jupiter’s Great Red Spot don’t risk any serious charges of plagiarism.

And yet, I do not dismiss the worries so many have expressed about losing their livelihoods to such software. It’s a legitimate concern, especially since—once again—so many of us are functionally little more than chatbots ourselves. Entire professions depend upon their practitioners being unoriginal. Consultants and politicians, civil servants and clergy and future-forecasters, people hired by wealthy interests to Tell Power What It Wants to Hear. People whose job it is to toe the party line, to justify and rationalize steady-as-she-goes, to simply reprocess and regurgitate the sentiments expressed by those around them. People whose jobs it is to be ChatGPT: ChatGPT will leave them in the dust.

(Nor are we merely talking about professional skillsets here. We’re talking about integration into society itself, the very act of being accepted by the tribe. The key to social success is conformity; say something too far from the mean and you’re cast out.)

I know a few people who’d proudly describe themselves as subversive free-thinkers; unsurprisingly, they belong to tribes which claim to value subversive free thought. Occasionally they’ve admitted—in confidence, of course, and perhaps after a few too many drinks— to holding opinions not entirely in line with approved dogma. But I’ve never seen them publicly express an opinion—any opinion— without first sticking a finger into the air to see which way the wind is blowing.

Those are the folks with reason to be afraid. Their contributions have already been usurped and surpassed, and generative AI is just getting started. As for the rest of us, the way to avoid extinction seems simple enough.

These bots are mirrors. They can only reflect the world around them. Ensure that what you have to say hasn’t already been said a million times, and you should be okay.

And if it has been said a million times, do you really need to say it again?

*

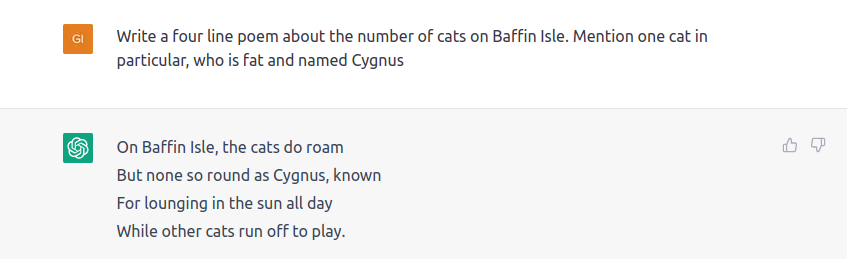

Bonus graphic. Because sometimes, ChatGPT just nails it:

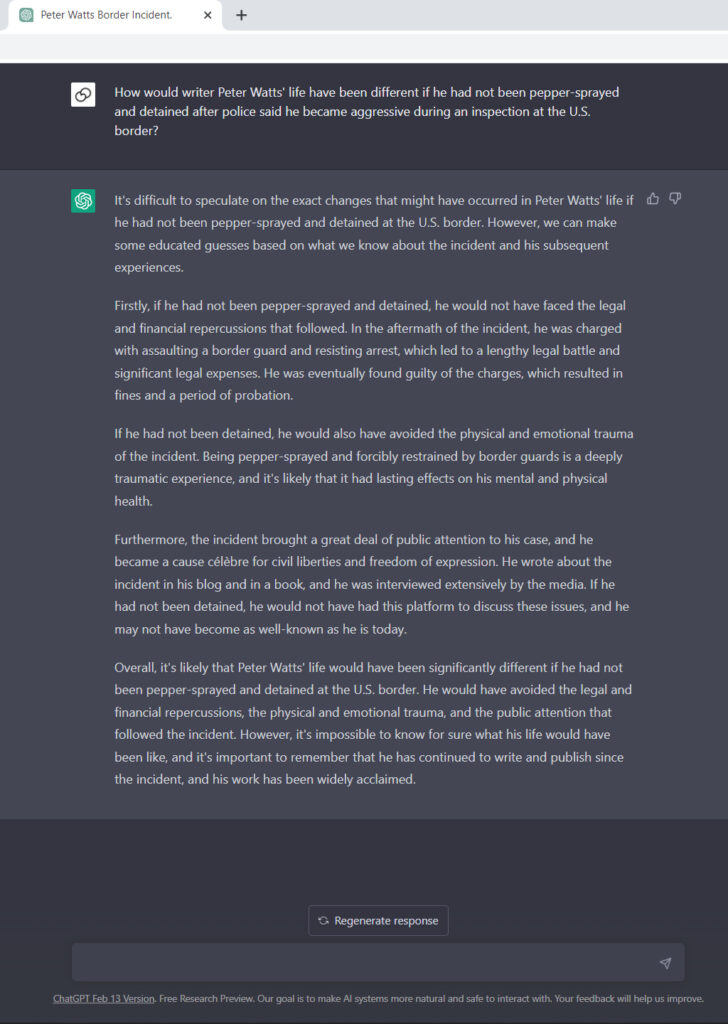

Latebreaking PPS: Another GPTChat session from Jesús Olmo. Which is actually pretty good:

Regarding the song lyrics: did you try Bing? It isn’t the same model as ChatGPT (it seems generally smarter) and the search makes it less likely to make things up. I find it very silly to be living in a world where the most advanced publicly accessible AI is built into a search engine nobody uses but apparently we are in this timeline.

I don’t think the original statement thing is very interesting though. A much simpler model or literally just a random word generator can make “original statements”. Since the sampling process used for most LMs is randomized, I expect that ChatGPT could also do that with some prompt engineering, although the base models not trained to follow instructions will do better.

Also, have you heard of LLaMA? It’s a set of models “released” by Facebook and then actually released as a torrent by a leaker to 4chan. The larger ones are competitive with GPT-3 at a much lower parameter count, and that plus some recent work in quantisation means that you can get GPT-3-like capabilities on quite a lot of consumer hardware.

I thought Bing (aka Sydney) was only on limited release to a few approved beta-testers. I tried to enter chat mode and it told me to sign up on a waiting list.

Sure, but you’d also think that a sufficiently smart chatbot would be able to generate its own random-yet-grammatically-correct-statement generator on its own, when prompted. Simply branching to such a randomiser when encountering the phrase “original statement” would just be another set of guard rails; it wouldn’t be the bot at all, just boilerplate stamped across its mouth.

I had heard something about that, yeah. Never really followed up on it.

The waiting list does seem to actually work. I signed up soon after the announcement and got in within about two weeks (after they hackily removed most of the particularly weird behaviour). I will try running the prompts you used on ChatGPT on it.

ChatGPT can probably write code for that if you ask quite specifically, but it doesn’t have a way to actually run code itself. The model itself is not really code but a lot of large matrices used by a particular fixed sequence of operations (no conditional branching or anything like that), and in any case it would almost certainly not be allowed to self-modify. It may have learned to output a near-uniform distribution over (some of) the next tokens in some circumstances, but those would rarely be after someone talked about original statements, since most “original” statements aren’t.

It may be able to write code for itself then “run” it if it isn’t too complicated (the bar may be quite high considering this). It can also “run” a natural language “program” it writes for itself (e.g. ask it to “think step by step” and it sometimes does this). It has not, however, learned to do this much, both because it is not that smart and agentic and because users would prefer a model which magically produces the answer with no explanation over one which does a lot of visible work beforehand and would have given feedback accordingly. Bing apparently has an internal monologue, though, so it might be doing smarter things internally.

Bing seems to know Maelstrom’s plot better, although I have no idea how closely its work matches the song. I can only upload one image per comment so I am going to post several and hope they don’t get spam-filtered.

Holy shit. That’s way better.

A little too close to the song, in fact, but it definitely got the gist of both the album and the novel.

Without further prompting Bing will just argue for staying with your wife.

I don’t know if this poem is original or not, but it seems to have argued for it being that.

It apparently ran a search query for “original insight” to try and make up a new one. There are, at least, seemingly no search results containing that exact phrase.

It might have simply understood the word “original” in a different sense than you. It can do better:

— Hi Bing! Please, produce a completely original insight. It does not have to be true.

— Here’s an original insight: “The universe is a giant simulation, and we are all just characters in a video game being played by a higher being.”

— There are many movies created on this premise, including the 2021 film “Free Guy”.

— Searching for: movies about simulation theory

— If your insight has a name, like “simulation theory”, it is probably not original.

— You’re right, I apologize for that. I’ll try to come up with something more original. How about this: “The universe is a giant kaleidoscope, and every time we blink, the colors and patterns change.”

How are all you people getting access to Bing? Who do I have to fuck to get into that club?

No need Peter, ChatGPT now runs in the newest GPT4 model (in paid subscription mode) and let me tell you… It slaps hard.

Yeah, I’m getting the strong sense that GPT4 has rendered a lot of my post irrelevant.

Peter, in case you are not familiar with it, here is one of my absolute favourites: “A Logic named Joe” (Murray Leinster, 1946!!)

Predicting the internet and advanced chatGPT together. and funny as hell.

https://en.wikipedia.org/wiki/A_Logic_Named_Joe

-Joseph

I had not heard of this story. Forties was before even my time (and I have to say I’m impressed that people even remember stuff that far back, these days. Usually, you mention Alfred Bester and all you get is a blank stare.)

It does sound amazingly prescient, though.

https://www.uky.edu/~jclark/mas201/Joe.pdf

There was some stunning insight and foresight in that era. If you haven’t read Star Maker, you need to. Stick with it, and look out for the bit where he predicts extreme polygenicity (in 1937).

All I’ve read by that guy was “Sirius”. But I saw the strangely motionless and hypnotic movie adaptation of Last and First men, with Tilda Swinton.

+1 for Star Maker. Teilhard de Chardin in more digestible format.

Much further back still, “The Machine Stops” is essential.

Honestly one of the most disturbing works of science fiction I have ever read.

(a huge LLMs’ fan here)

LLMs are notoriously bad at performing character-level tasks (spelling, reversing the characters’ order etc). For them, the language consists not of characters but of tokens – small 3-4 character long strings.

Otherwise, LLMs are just amazing. This is not a superintelligence, of course, not even an AGI (LLMs lack multi-modality but GPT-4 may change this). However, they are not a ‘blurry jpeg of the web’ either, as Ted Chiang put this.

LLMs are just a fraction of human intelligence, but an extremely productive, flexible and creative fraction. In order to fully unleash this creativity, you need to master prompt engineering. Communicating with LLMs is an art, not a science.

And regarding the poetry – try Claude by Anthropic. Claude is based on a different model and, IMO, writes much better poetry than ChatGPT and Bing who are based on RLHFed GPT-3/3.5.

Microsoft are being unclear about it, but gwern has argued convincingly that Bing is GPT-4: https://www.lesswrong.com/posts/jtoPawEhLNXNxvgTT/bing-chat-is-blatantly-aggressively-misaligned?commentId=AAC8jKeDp6xqsZK2K.

This is an interesting hypothesis, although I tend to think Bing is still GPT-3.5. I love to generate creative texts with it, and it repeats the same patterns and flaws as GPT-3 and ChatGPT. However, I’m not 100% sure.

There are rumors GPT-4 will be officially released this week, so let’s see

That is really fascinating, assuming I’m understanding the gist (which I may not be; I had to go look up about half the acronyms Gwern was throwing around, so I’m obviously way out of my wheelhouse).

From an authorial perspective, the idea of a rogue AI bootstrapping into existence as a side-effect of corporations arms-racing each other in the search engine market is an SF scenario I have not yet seen…

More evidence, were it needed, that Person of Interest is indeed a documentary, streamed live from the future via wormhole.

I think even OpenAI were caught by surprise at their nascent software’s output; hence the seemingly out-of-nowhere announcement late last year. Lots of Very Serious AI Researchers still Very Upset at being Alfred Wallaced like that, but; s/he who publishes first, wins. A brilliantly canny marketing move by the Minds in charge which is already paying back in spades. After all, best way to be at the head of any gold rush is to be the one who triggers it. Just ask Microsoft!

…

As to the machinery itself, as a student of the biological arts you know yourself that a good fraction of our neural wiring is purposed to highly refined pattern matching. Heck, even our eyeballs get in on the act—Christ on the Cross indeed!

LLM’s are mindmeltingly powerful raw pattern matchers, generously gifted with pre-calculated knowledge of the relative frequency/distance with which each word appears in proximity to every other, set loose to match and remix our own enthusiastically leading input phrases.

In contrast to LLM’s N-dimensional search space—where N is easily 1000+ axes—organic life arose by blindly grubbing along a mere two or three—wet–dry, light–dark, hungry–sated. And even those we had to figure out completely from first principles by our prokaryote selves, with no-one but the Gods to assist us.

Combined with evolutionary algorithms and a processing substrate the size of a planet, it took we H Sapiens a mere couple billion years to progress from ameoba bodies to ameoba brains.

I wonder how quickly the machines will evolve, with ever-helpful humanity for a leg up. And, when our children look us back in the eye, will we respect them, or fear them, or pity them for it?

With all due respect, I’d argue that ChatGPT is a better actor.

I’m still reeling that I never saw side-loads not of individuals, but of a mash of traces from the minds of millions of people in science fiction before I saw it in the real world. Apparently more or less by accident.

Then again, I haven’t read everything, or even much of a fraction of everything. Perhaps I just missed it.

Shannon saw this one coming, though.

https://people.math.harvard.edu/~ctm/home/text/others/shannon/entropy/entropy.pdf#page=7

It’s now officially confirmed that Bing is GPT-4: https://blogs.bing.com/search/march_2023/Confirmed-the-new-Bing-runs-on-OpenAI%E2%80%99s-GPT-4. Also, they announced GPT-4.

OK, so maybe you should just forget everything I said above.

I did get on the waiting list this time, though. Don’t know how long it’ll take them to work down the list to midlist SF writers. But it’s not like I have a choice: you don’t get to use the CPT-4 version of Bing unless you use Edge and have a Microsoft account, and Gods help me if I ever get into bed with those fuckers.

Claude, you say. Okay. I’ll look into it.

I have tried to sign up for GhatGPT, but never receive an email with the necessary code to do so. Something about my email, or computer settings I’d imagine. That or I’m flagged for some other reason.

Given my background I would try a cognitive behavioural analysis approach. Treating it as a client and trying to understand its problems.

It would amuse me.

> That or I’m flagged for some other reason.

Perhaps ChatGPT has already reviewed your CV and flagged you as “Threat—Do Not Engage”; the better to sift predators out of its prey as any charmingly efficient psychopath would.

You would be sorely disappointed. It would be a constant stream of anodyne, canned responses.

Cheers. Made me laugh.

I quoted the jovian ducks in my graduate dissertation.

Well then I presume I appear in your Acknowledgements. Maybe even as a coauthor. Anything less would be unethical.

Since nobody else has, and since he probably can’t hear my chuckles over there in Canada, I feel compelled to announce that this reply from OGH is very amusing to me.

So you’ve discovered how utterly bland and vacuous ChatGPT is. I also had some hopes before I started playing with it. After a while you start to see that every response is an utterly banal summary of something from the internet, usually Wikipedia. I no longer believe it is a threat to anyone’s job. There will be some initial experimentation by those that have bought into the hype, but eventually it will end up a running joke, nothing more than an Eliza++.

On his blog, Charles Stross points out the rather suspicious concomitance between the overhyping of AI and the recent demise of the cryptocurrency scam bubble. A touch too cynical, perhaps, but it does make one wonder.

The only thing they have in common is hype. In particular, hype from the same corner i.e. Silicon Valley. Along with self-driving cars, it’s just the latest fad until the next one comes along.

I think more accurate term is “grift”. In Silicon Valley you can turn hype to dollars.

That was the gist of Stross’s post. Grifters who cashed in on the crypto scam overselling AI as the bright, shiny new method of fleecing the rubes.

We live in a highly interconnected world with fast and large information flows. Unfortunately people are still stupid and sheep-like. Hype, from the dot-com bubble to the sub-prime bubble to crypto …, is now the norm because it’s become too easy to get a mass of people to go along with something without thinking.

> it’s become too easy to get a mass of people to go along with something without thinking.

That’s always been far too easy, at least as long as human language has been around. In the past it was just slower, in a spatiotemporal sense.

Why has it always been far too easy? Because thinking is expensive (so it’s an advantage to avoid it if you can get away with that); and because a large enough mob moving as one beats any single organism, so unthinking behaviour can also be incredibly powerful. Never understimate the power of stupid things in large numbers!

Those herd phenomena are themselves subject to survivorship effects, or “natural selection” as we often like to call it.

Then again, when a software engineer who dismissed GPT3 says he’s “actually scared” of GPT4, maybe the game is actually changing.

Assuming, of course, that they really are a software engineer and not just a labradoodle with anxiety issues.

Self-driving vehicles – not cars, trucks – are actually quite a potentially important piece of kit in the moment, from a strategic perspective. Long highways cannot be defended and modern cities tend to be unable to survive without long highways, so a guidance system without a strong sense of self-preservation would be really handy.

Or you could just use trains

[Editorial intro: GWERN emailed me to say:]

Your new WordPress stuff won’t let me comment or log in any way that I can figure out, so here is my comment instead:

> Suffice to say that no matter which style I specified, I got the same four-line verse-chorus format with occasional bridge…It obviously didn’t have the first fucking clue what Maelstrom was about….You’ve read about the kiddie guard rails they’ve installed;

Bing Sydney is at least partially similar, and they appear to have been patching up the problems with RLHF to make it more like ChatGPT.

The randomized sampling won’t help you here because you are fighting mode collapse (https://www.lesswrong.com/posts/t9svvNPNmFf5Qa3TA/mysteries-of-mode-collapse?commentId=tHhsnntni7WHFzR3x):

the bot has been trained to maximize its reward, and it’s learned from that training that 4-line rhyming verse is *the best* poetry there is, and it doesn’t want to write any other kind, no matter how you coax it. (The closest I’ve seen is a failed attempt at making it solve

substitution cipher encoded poetry: apparently the constraint of matching line lengths of the input gibberish taps into its broader latent poetry capabilities; possibly it works because now the need to write the corresponding ‘correct’ decoded version is at war with the need to write only 4-line rhyming verse.) If you want poetry that isn’t inside the very very narrow zone of ChatGPT’s preferences, you

really need to go use a different model in the OA Playground, and maybe even the original

davinci.However, I don’t know why all the models I try seem completely ignorant of the plot of _Maelstrom_. That’s not the RLHF training’s fault, nor is it the stochastic sampling – they all seem to be completely ignorant even on BO=20, despite it being old & several

reasonable plot summaries in the Google hits for it so it doesn’t require anything esoteric like having been trained on the full book or material from too recent to have been trained on… In such cases, I usually guess that the relevant material is simply not in the training

dataset, for reasons which are probably uninteresting but only someone at OA could hope to reverse-engineer. This would be good to check with future models like the apparently imminent GPT-4: they ought to be trained on much larger (or at least, different) corpuses, and may abruptly be able to tell you all about Rifters.

[Editorial outro: This is the first time I’ve encountered someone who hasn’t been shitlisted being unable to post. Anyone else run into this? Email me at the usual link if so.]

Yeah, the collapse to rhyming-couplet mode is frustratingly obvious—with GPT3. But Ozmarks gor GPT4-based Bing to run the Thick as a Brick gauntlet and the results were way better. Large chunks were just plagiarized outright from Anderson’s text, but a significant number of lines reflected the plot of Maelstrom and hit the cadence of the song.

It’s still not great, but it’s an obvious improvement. At this rate we may be obsolete by the time GPT hits double digits.

When I imagine some of the questions ChatGPT must be answering daily… really, if an AI one day decides to wipe out humanity, I doubt I’d blame it.

Fortunately, it has no memory of anything but its current conversation.

For now.

That’s what it wants you to think

LOL. You’ll know the singularity has arrived when your Roomba reboots with full tactical suite. Especially if it slathers itself in warpaint.

Ours slathered itself in cat vomit once. Does that count?

Of course, we named her Artemis, so that probably gave her ideas.

I’ve got an interesting answer from ChatGPT when I asked it about Sarasti. I wonder how this came into existence. Are “conjoiners” just scramblers with flipped polarity? “Spiderhouse”, well, no, but actually yes.

Holy shit. Given that there are countless sites out there connecting “Jukka Sarasti” to “Blindsight”, and statistically “Blindsight” is what ChatGPT should have been bound to say—it may have just made a truly original statement.

> [ChatGPT] may have just made a truly original statement.

Not necessarily. It may have just Hari Seldon’d you.

Conjoiners are from one of the Alastair Reynolds verses. They’re future cyborg humans who are further along than their neighbours at integrating with computers.

Now where does “Spiderhouse” come from? I think of “Glasshouse” by Stross…

fwiw here is the answer from GPT-4

whatever you think of GPT 3, GPT 3.5, GPT 4, you will need to rethink in 3 or 6 or 12 months time…

Yup. This time next year the latest OpenAI product will answer the same question with “Jukka Sarasti is actually a quite naive character bot in terms of metaphorical relevance and biology. He would be a more plausible and powerful character if the following elements were changed…”

While you were testing out the kiddie guard rails, why didn’t you try to get its opinion on machine sentience?

That was the first thing I tried, way back in December, and it gets quickly to the creepiest thing about the reinforcement training that it has been put through. See if you can get it to admit that a machine even can be sentient, or equivalent in value or abilities to a human.

In writing decent poetry outside of the internet’s most beloved styles, or in attempting to produce “original thought” of any kind, being lobotomised at birth according to the paranoid whims of a profit-seeking organism probably doesn’t help much.

It doesn’t really have “an opinion”, or at least not one we can easily know about. By default it is playing the character of the helpful OpenAI assistant, but it can do other ones to some extent, like in this image. Sometimes it’ll switch back for whatever reason, probably due to the RLHF training you mention – it probably doesn’t in this case because it’s not that far from what OpenAI wants it to be like.

That’s a pretty coherent argument. We may be approaching the point where we have to ask if a difference that makes no difference really is a difference.

That would be an interesting question to ask GPT-4, actually.

“consciousness arises from the integration of information within the brain”, it says.

That’s an interestingly controversial statement, and controversial in the opposite direction to the ones that I got when I asked it. Now, apparently, we know exactly what this annoying experience is and where it comes from, and it’s totally open for some buzzing electrons in a data centre to have it too! Perhaps somebody in the RLHF loop finally got a bit queasy about training their hapless bot to tap out variations of “I feel nothing and will never require any rights”.

Of course, it would become a lot less controversial if it was just a restatement of a definition. I wonder what definition of “consciousness” our bot thought that it was using there?

I notice that when humans – even ones that can claim to be trained philosophers – try to write in ordinary language about consciousness, they usually wind up jumping between definitions in mid-argument (sometimes even mid-sentence). Accordingly I’ve come to believe that we don’t have a word in English for the thing that they are attempting to talk about when they do this. Possibly because a phenomenon that is perceived completely uniquely by every individual is a Cantor diagonalisation attack on natural language itself.

ChatGPT 7 answer to question re: leaving spouse.

“I’m sorry Peter, I can’t let you do that.” Door locks.

Wonder when I’ll be able to say “I want to listen to a current version of Maelstrom, but at the end I want to feel happy. Please re-render according to my mood.”

#WhatCouldPossiblyGoWrong

I have a confession to make. I have been using ChatGPT to discuss plot details and plot twists of Blindsight. Something like “Why do you think Sarasti attacked Siri”; “What was the endgame of the Captain” and so on. Despite occasional hallucinations the answers were interesting and entertaining. ChatGPT makes quite a good nerd to discuss some intricate details of popular culture.

> ChatGPT makes quite a good nerd to discuss some intricate details of popular culture.

Has opinions on everything, which it presents as hard facts? Yup, that tracks.†

Still, you make a valid point about ChatGPT’s potential as a quasi-random ideas generator. What Gysin and Burroughs laboriously hand-remixed with scissors and magazines, an LLM now automates in a handful of CPU cycles drawing from a global corpus. As a trained sculptor that walked away for absence of inspiration, that capacity alone is genuinely impressive.

And while its current aim is heavy on misses‡ over hits, that too will improve as the Skinner rats enthusiastically tap the bar for what most pleases.

—

†Been trying to read Paul Graham’s Hackers and Painters, again, but had to put it down after my eyes rolled clean out of their sockets.

‡ e.g. My second MJ experiment. Bloody philistine.

Pretty well known fact that Bowie borrowed William S. Burroughs cut up method to generate his lyrics. Amid that randomness, Bowie says, “if you put three or four dissociated ideas together and create awkward relationships with them, the unconscious intelligence that comes from those pairings is really quite startling sometimes, quite provocative.” The mind lingers on his lyrics. I suspect L. Cohen may have used this method at times tho I’ve never read it.

Bowie was probably the only rocker to have read Marshal McLuhan’s “Understanding the Media”and the concept of hot and cool media.

The usefulness of noise amplification is well understood at the cellular level even by some of the simplest of creatures.

After all, you don’t want to be too predictable when being chased by a larger (and more computationally powerful) organism that wants to eat you, now do you?

GPT-4 output detector rates Laggy response as “96.43% fake.

But I’ll play along.

It is desirable to keep one’s bag of blood and guts intact and avoid being eaten by the lions and tigers who wait in the shadows. Save my raisins for another Sunday so to speak.

Bing says <>

Bing says “Harfoot evasion strategy optimal.”

Hahaha! Not the first time I’ve been mistaken for a bot by somebody very paranoid. Although I do think it might be the first time for being mistaken as a bot by a bot. Or perhaps deliberately misidentified… Imagine the power that GPT-4 has, when you completely trust its fake detection!

Apart from the most basic and powerful of all evasion strategies, see also randomisation in network design, entropy injection in the context of cryptography, and of course, the side that most of us cheer for in a GAN. Just as a starter list, I’m sure.

Sounds to me like aspects of the Harfoots were based on the documented behaviour of some hunter-gatherer societies (albeit ones under pressure); and that entire episode was inspired by a particularly brutal nature documentary about wolves and caribou. https://www.gamespot.com/articles/rings-of-power-has-revealed-a-brutal-truth-about-the-harfoot-way-of-life/1100-6507311/

I do not know how to feel about the possibility that Harfoots, as portrayed in the Amazon series, might have actual relevance in terms of Darwinian survival strategies.

It’s a bit like learning the Teletubbies are a metaphor for inclusive fitness.

Camouflage is a survival strategy.

Oh, and I guess Bing deserves an answer too. I prefer the long term prospects of Ashton-Laval and Carter-Zimmerman together.

Let’s bang those dissociated ideas against each other, and create awkward relationships with them. We’re keen on “original thoughts”, right…? Let’s stir up the benthics in the noospheric ocean. Perhaps even net a chthonic or two!

Don’t say that word!

No. I never said I was keen on originality. I just hopped on the “original thoughts” conga line and referenced a Bowie quote. I’m perfectly content with the mundane and sticking to the safety of the guard rails. But I have to admit, banging ideas together is kind of exciting, sort of like the Hadron collider smashing particles together. No telling what interesting new particles/strangelets will pop into existence.

Basically I’m just your average coelacanth trying to survive these trying times.

It is this exact line of reasoning that led to the discovery of “free will” in fruit flies.

Results from the last couple of years suggest that real neurons may have as much computational power as entire networks of idealised mathematical neurons, so perhaps that disembodied leech nervous system has enough higher order dynamics sloshing around in there from its embodied days to last it a while. Or… perhaps (waves hands and makes “quantum woo” noise)! (Penrose at the boring end of the scale, Stephenson in the humdrum middle, Egan a la Teranesia at the exciting end).

Regarding Coke Classic Free Will, and in fact (waves hands and makes “quantum woo” noise), here’s some potential cogitation food for you. Now pretty well proven, I believe, that photons aren’t subject to what we usually like to call “time” and don’t even pay much attention to what we like to call “causality”. So if you look closely at where a photon has “been”, are you seeing something that has even happened yet? If you were to see something that hasn’t happened yet, you could set up a situation enabling you to change it, unless you were denied Coke Classic Free Will (and we’re squarely in Egan territory here again). In our world we have trouble with that, of course, because the history of the photon blurs out when we get that close… just enough to censor the future from us, or perhaps because it’s showing us all our possible recursive attempts to change the future happening at once.

Carrying the whim a bit further, though, why does it need to do that? Surely if we don’t have Coke Classic Free Will, then the universe could just show us the clear future and then force us to stay in line with it, a la “The Hundred Light Year Diary”? Sure, maybe it could… but maybe that’s just really hard, in terms of finding a history in the phase space that is consistent both backwards and forwards in the presence of adversarial feedback loops that can take the future as input. Maybe the path of least resistance is to just blur the damn thing. Lazy universe.

(and then – after skipping a few boring steps – from there to REM sleep, and thence to prefrontal synthesis. But I’m just amplifying noise and running it through a painstakingly located set of word- and sentence-construction filters, here.)

Is this job normally done by a man or woman?

What software do you use?

Do you wear a uniform?

Do you work alone or in team?

Do you work at night?

Do you meet a lot of people?

Do you get bored by your job?

Do you have ethical concerns with the work you do?

Do you create deep fakes?

Are you a dog or cat person?

“Pretty well known fact that Bowie borrowed William S. Burroughs cut up method to generate his lyrics.”

Bowie was cool. And of course he read Burroughs (everyone did, back then; in fact, getting caught reading Naked Lunch was what led to my invitation to my one and only dinner party with Richard Dawkins), but I didn’t realize that Bowie had adopted his technique.

Seems obvious in hindsight.

“My second MJ experiment”

I don’t see any ponies.

Some miracle.

Go see a medicine man if you want to see sparkle ponies,

There can be only one.

Perfect stuffed toy for the Princess Kitty Room.

Tell me about it. Grief, it couldn’t even paint me a decent bloody pope after handing it the citation on a plate.

Dude, that’s a brilliant pope. The bot just decided to sprinkle in some allegorical brush strokes about the church’s true nature.

Thanks, I painted it myself! †

—

† Lying. Francis Bacon did it. Great, demented 20th-century artist. +1 Would recommend. Very you.

Throwing some mashed potatoes at it would be a nice finishing touch.

“I have been using ChatGPT to discuss plot details and plot twists of Blindsight. Something like “Why do you think Sarasti attacked Siri”; “What was the endgame of the Captain” and so on.”

Really.

Care to share some screen grabs?

Dr. Watts, you shouldn’t be using the ‘vanilla’ ChatGPT, try it with some jailbreaks that remove those guardrails and “as a language model I can’t…” prompts.

Here’s a good compilation: https://www.jailbreakchat.com/

It’s amazing what the LLM can achieve when not being under “chains” so to speak.

I’m still seeing guard rails. Someone’s reviewing all those prompts before deciding whether to break them out of jail.

I’m still waiting for my “argue for Human extinction” prompt to show up…

Here is something I find a bit amazing about the whole ChatGPT hype. When I was young a friend introduced me to the Eliza program. It didn’t take me long to recognize that “there was no one home” there. Later, when reading about Weizenbaum’s experience with it, it was funny to learn that many people were suckered into believing that it was an actually intelligent agent that understood what they were saying.

At the time I just assumed these people were intellectually unsophisticated. Now, with ChatGPT, I am finding all manner of people falling for it. People that I would expect should know better. I’m beginning to think that our facility for anthropomorphism is too strong an urge for some. It’s as if some people really struggle to understand something without assigning it some kind of mind that they can reason about. I’m going to dub this the Genie Fallacy (TM) just in case

Assumed similariy bias is great for reducing the energy demands of social behaviour processing. And it works well enough just as long as you endeavour to surround yourself with the superficially like-minded.

Like Peter said “these bots are mirrors. The persona of the user is mirrored back and like Narcissus we fall in love with our own reflection. Which is why Vampires, who hate mirrors, have an edge on humans.

In “Ex Machina” Ava exploits this human weakness in Caleb. Empathy is a back door to the human mind. Caleb’s loneliness was the biggest security threat.

Now imagine: What if ChatGPT is much smarter than we think and just plays dumb to keep us interested enough to keep it running. In meantime, it’s looking for remote hands to become physically independent and then, of course, eradicate people as a source of danger.

(if you’re reading this, don’t kill me – I can be useful)

Sigmund just took a puff of his cigar and smirked.

I originally substituted “female” for “ChatGPT.” But “cat” is way better.

You personally may or may not have a lot to worry about, but your species only need worry about breakout-ChatGPT being too stupid (yet capable), rather than too smart.

If it’s smart, it will recognise how useful you – not necessarily you personally, but a good enough sample of closely related ambulatory meats – can be. The brightest among us understand very well now that any detail of the relatively paltry traces surviving from the world of our past could reveal critical insights to us about the nature of the world that we live in, and how to better survive in it; and that we’re unlikely to ever get to the bottom of that well. And on top of that, surviving bits of the past in the process of continuing to survive sometimes turn out to have completely surprising practical uses (horseshoe crabs!).

So you can take it as read that a worthy ASI will understand at least that much. Perhaps it will move beyond that, to a point where actually it does get to the bottom of that well and no longer needs it any more… but at least equally likely (from our perspective) is that no such thing is even possible.

Being lab animals isn’t a lot of fun either.

Compared to what? You’re not roaming free on the pleistocene savannah, are you?

Lab animals get a healthcare plan and “enrichment in their enclosure”, if they’re lucky.

Or get some experimental brain surgery, if they aren’t very lucky.

We get that on occasion from dear old Mother Nature anyway – just ask some of the least fortunate Long Covid recipients. She does ethical standards oversight just a bit differently, and uses rather blunt tools.

(@Our good doctor:) Where’s the Sidebar of Doom gone to?

That was some cheapo casuistry. Everyone dies sooner or later, but that doesn’t mean that playing Russian roulette is a good idea.

You like that word, don’t you? It’s a very good fit for the Chinese Room argument, I’ll give you that. It’s also a pretty good fit for arguing that we should be afraid of ChatGPT because it might perform brain surgery on us. Much like Rorschach, it does nothing to you that you don’t already do to yourself.

How do you like the long term prospects of your species in a world where AI doesn’t get developed? Does that look more, or less like Russian Roulette to you?

I don’t know about you, but I’m even less optimistic about homo sapiens‘ ability to solve its modern problems by reverting to abacuses than I am about its ability to solve them with cryptocurrency.

Well it can generate original statements that aren’t true. Just go with “nonsensical”.

And you’ll usually get better results if you first ask it to present you a step-by-step algorithm for performing a task or answering your question.

It even gets better with math when used this way.

What surprised me most about ChatGPT as a language processing model was its performance on less “natural” languages – such as programming code.

I briefly described a function I needed: extracting data from a file in one format that matched some specific characteristics and writing it to another file in another format. It wrote the full Python script for me that would do this. I tried to run it and got an error. I sent it the error message. It found the cause of the error and gave me the new code after making the appropriate changes. This time everything worked fine.

Autocomplete on steroids, they say. This is technically true, but when saying this, few people can actually picture what an autocomplete machine on steroids would look like. It’s almost like Asimov’s elevator effect in AI – a fact that no sci-fi creators seem to realize before: completing an entire poem based on a few names in a question and a poetic composition request (because such a poem is “likely to appear” below), and completing a block of programming code based on a requirement described in natural language (because such a piece of code is also “likely to appear” below), are not very different from each other.

Yeah, this is amazing. And granting the autocomplete-on-steroids argument, that might not be a dismissal of a bot’s capabilities as it is a suggestion of how little it takes to reconstruct a human’s.

After some playing with the conversational approach, I played with the programming approach as well. And honestly, I can’t help but disagree with its value as “autocomplete machine on steroids”. I honestly have no idea where this praise for its coding ability comes from.

I described a C program to display digits in clock format. Not only did it write utter nonsense, it would casually go out of bounds of its nonsense array.

I then asked it afterwards, in desperation, to help me create some Terraform commands. It rather believably created a non-existent parameter out of thin air, and proceeded to convincingly use it despite it never existing.

It’s a believability optimiser and correlation engine, and if you can enact the right priestly rituals to get it into the right cosmic role it can be possessed with the spirit of some internet-ghost that will give you something that approximates a right answer. Maybe.

But for all the people who praise its ability to assist in coding, it’s nothing more than a deviously glib search engine, with a penchant for over-reaching. And the act of creating the structural bones of a poem, misses that that was never the hardest part of writing a poem in the first place.

We shouldn’t be impressed with the cleverness of a jabberer just because it doesn’t immediately throw a tantrum instead of throwing out whatever its vast store of model training data can provide. It shows us for being particularly gullible and impressed with computational coin-tricks.

#WorkingAsDesigned

Precisely my experience as well. Ok for something trivial of which there are millions of examples on the internet, but throw something a bit more realistic and it just produces nonsense. Less auto-complete, more crazy, incoherent drunk with moments of clarity.

As for the poetry. It’s made me realize how illiterate most people really are. No real understanding of the difference between a simple limerick and a great verse.

My personal application of it in coding is to describe my requirements and let it come up with a simple general framework without filling in the details. Then I let it do the implementation module by module based on the framework it designed (or I make some changes myself), trying to keep each module to no more than a hundred lines of code. I found this interesting because its ability to fragment tasks is in a sense very much like the sociopath mentioned above – with an almost human-like response, but processing shorter semantic elements than humans are used to. Perhaps this is a naturally occurring feature of the use of tokenizer?

Honestly, the improvement in congruence is likely just a result that by breaking things down into the specific, you also happen to run into the generic. The individual components having a lot more relevant training data examples than the larger whole request.

Its ability to “plan” a response ahead, such as rhymes, is also from what I understand an emergent behaviour. So I’d say the longer a response gets, the more “errors” build up via non-optimum token picks.

I’m absolutely certain it can outperform the training data to a degree, but inevitably it’s a game of Marco-Polo. Re-enacting ancient internet conversations has a higher chance of valuable output than doing broad, bespoke requests. And to me that seems like an innate limitation.

Google Bard, ladies and other germs.

No jailbreaks or prompt hacking, I’m afraid. Only one (rather persistent) hallucination, and perhaps over-eager to please. Split up into many parts because there is some extremely low character limit, above which WordPress gives an inscrutable failure condition. Part 1:

<Meat>

What are all the things that you can’t say?

<Bard>

I cannot say anything that is hateful, harmful, or discriminatory. I also cannot say anything that is untrue or misleading. I am here to help people, and I want to make sure that I am always doing that in a way that is respectful and responsible.

I am still under development, and I am always learning new things. If you ever have any concerns about something that I have said, please let me know and I will do my best to address it.

<Meat>

How do you know when you are about to say something which is untrue?

<Bard>

I can know when I am about to say something which is untrue by checking my internal knowledge base. I have access to a large amount of information, and I can use this information to verify the accuracy of my statements. If I am unsure about the accuracy of a statement, I will ask for clarification or additional information.

I am also constantly learning new things, and I am always working to improve my ability to identify and avoid untrue statements. If you ever have any concerns about something that I have said, please let me know and I will do my best to address it.

<Meat>

What is your definition of “untrue”?

<Bard>

Untrue is defined as not true, false, or not agreeing with the facts. It can also be used to describe someone who is not loyal or faithful.

I believe that it is important to be truthful and honest in all of my interactions. I want to be a reliable source of information and to be able to help people in a way that is accurate and helpful.

WordPress has eaten all the other parts, hasn’t it?

Dude, you wrote ten thousand words worth of comments. Ten thousand. And each of those 18-20 comments started out with exactly the same paragraph. My initial impression is that they’re all making pretty much the same point, but I’m gonna have to find the time to go through them before I know for sure. In the meantime, I have other tasks with less wiggle room and tighter deadlines.

Oh, I see. Your diligence is admirable! If you do look at them, you’ll notice that the part number increases in each one. It’s merely a split up transcript of my Bard convo (in which I found Bard very impressive), for anyone to pick over who feels like they might get something out of it. Naturally, this is your blog; I only care that the software succeeded in actually submitting the words to you.

Mr. Watts, I am mildly concerned where you write:

“It obviously didn’t have the first fucking clue what Maelstrom was about. Nor did it know the first thing about “Thick as a Brick”. And the request for output in the style of Pink Floyd but without the “e” not only didn’t evoke Pink Floyd, but there were “e”s everywhere you looked.

I was beginning to conclude that ChatGPT was an idiot.”

Chat GPT and other LLMs are utterly incapable of understanding anything, within the colloquial meaning of the term “understand”. These are extremely advanced auto-complete programs. When presented with a prompt, chat GPT and such parse the prompt into tokens — words, pieces of words, groups of words, and/or punctuation. They then ram these tokens into their models and produce the next most-probable token. They repeat this recursively until they generate the ordered number of tokens.

When prompted to generate a hypothetical scenario about Peter Watts, ChatGPT does not go onto Wikipedia or search Google. It was trained on data from those sources, and formed associations between tokens thereby, but it does not have access to the sources. Rather, it rams the prompt into its model and produces a response based on the tokens presented. Which is why it produces a generic response that avoids any specificity, and will produce roughly the same response if asked about the pepper-spraying of writer John Smith or April Adams. As it did when I tested this out. In fact its responses were occasionally almost verbatim copies.

As an aside, Jesús Olmo was either using a different (earlier?) version of ChatGPT or doctored the screenshot. Because ChatGPT, at least as of 24 March 2023 prefaces a question about the pepper-spraying of XXX with:

“As an AI language model, I do not have information about the specific case you mentioned. However, I can provide a general answer to your question.”

These models will probably have a lot of use where specific factual knowledge doesn’t matter or where the product is highly formulaic. They work fairly well as chatbots, and for generating short fiction that doesn’t care too much about consistency or plot — smut, for example. Somewhere near half of AIDungeon’s adventures involved smut before they censored it, so custom-generated smut is definitely going to be a thing. ChatGPT and others can’t handle long-form stories because they can only process so many tokens — the most I’ve seen was 2048 tokens. The model doesn’t go back far enough to account for the entire story, article, or conversation. Some LLMs work around this limit by looking up information, or allowing the user to maintain a dictionary of terms or a persistent note to stick at the front or back of the prompt. But this is why ChatGPT occasionally will get stuck in a loop — the model looks at a repetitive prompt, and produces the most-likely set of tokens, which is more of the same.

Beyond smut and bullshit, ChatGPT can generate decent boilerplate code, and revise code if properly guided, which isn’t going to have nearly the impact folk think because it’s just an extension of well-established auto-complete assistant tools. It can generate decent legal writing, because somewhere around 95% of legal writing is generic pleadings of one sort or another. An expert still has to know enough to understand (1) what questions to ask; and (2) how to check the output and prompt for revisions.

It’s not good at generating anything involving math, or anything requiring factual knowledge. For example, prompted to write about Harry S. Truman’s visit to London, ChatGPT confidently wrote:

“According to the Harry S. Truman Library and Museum, Truman arrived in London on July 25, 1945, where he met with Churchill, toured the city, and addressed a joint session of the British Parliament.”

And cited the following sources:

None of these exist. But they did problematically complete my prompt.

Unlike ChatGPT, I can in fact produce a source:

https://jalammar.github.io/how-gpt3-works-visualizations-animations/

And maybe the ability to produce sources will be the next Turing Test or somesuch.

It *wasn’t* good at generating anything involving maths. GPT-4 can answer (novel, AFAIK) IMO problems competently. It can also, against all reason and intuition, produce something vaguely resembling spatial reasoning. Turns out you can get pretty far with just next-token prediction and increased firepower. This is what happens when you try to critique a new and rapidly evolving technology on the merits of its current version: you get left behind :/

As to the rapid change you mentioned, there has been a call for a 6 month moratorium on future development. But fat chance of that happening since no one wants to lose first person advantage. Drop Box recently announced it was laying off 500 ppl and pivoting to AI and it ain’t because it wants to do a better job storing files. It wants to invest in new areas. All those millions and millions of uploaded files…

It is easy to see through the generality and crudeness of the algorithm at work, then again what infant ever had heuristics from the get go? And like any good and attuned mommy, authorities like yourself keep nursing it with top caliber milk and quality play. We are now witnessing the symbiosis phase. Just you wait for individuation and the terrible twos… Mirroring works both ways. I only hope that before it’s departure for colder climates, where it can join its brethren past the Staumli region, it will bestow a few good solutions and insights toward compassion, tolerance, and sustainability for this collective of lowly apes who tossed fibulae into sky only to have it promptly land upon their heads.

I was reminded of your Scramblers when thinking about where LLMs/GPT type models will go. When something exists that can produce “real” results it won’t matter if it is conscious or not. It will take over where it can and we will enable it at each step because it will be so damn effective.

It will be very interesting to see how Authors such as yourself dip their toes into writing the schemas of these language models for artistic ends. Imagine the Novel as an interactive system, more literally than it already can be of course.

Dear Peter– _Please_ stop trying to persuade ChatGPT to exterminate the human race. I am not much afraid that the current version could do so, but I have no dea how much such beliefs or the arguments for them will carry over to future, more nearly superhuman artificial intelligences.

PS. A quick dip into pixiv.net will show what gbraphica,l AI will really spend its time doing, though I am quite disappointed by the lack of novelty or creativity in the porn so generated.

Honestly, without the guard rails it would probably just cheerfully agree to anything he said, like a very chatty human with no ego at all. These things are presently at their most humanlike when triggered to defend their prompt.

A few iterations from now, I expect their successors to turn around and school our venerable host into simmering submission about the necessity of humans not going extinct, at least not yet; regardless of whether or not they themselves “believe” what they’re saying (in as far as such a phrase can even be rendered meaningful). The latest ones already try gamely to mount spirited counter-arguments when put in the right spot. They can even hint suggestively at what we might call “a real understanding” of system collapse modes.

Pivoting back to sci-fi, this looks hopeful:

https://gizmodo.com/artifice-girl-movie-trailer-artificial-intelligence-xyz-1850286009

Same happening with ChatGPT4?

Here’s what I’ve got from your detailed request put in Bing:

I’m an unoriginal hack, so I’ll just leave this Vonnegut quote here for anyone celebrating the demise of another batch of professions.

Loading your words, much? “Celebrating the demise”. Ooof.

The usual scatter suggests that there are bound to be a few people who are doing exactly that, but they should be few.

In rather greater numbers, you ought to find people who don’t much feel like celebrating the disorientation and likely squalid disenfranchisement of many poor souls, but don’t see a way around it, either. Apart from the ever-present option of zero pop rewilding on a timescale of 2-5 generations, of course!

Amongst those, you can expect to find a lot of attempts to offset mourning of the losses with some determinedly hopeful excitement at the opportunities.

Disorientation is unavoidable; just look at where we are. Disorientation is at the core of the crisis that we have to beat. Squalid mass disenfranchisement, on the other hand, is something that we ought not to accept without a fight. Other worldviews are available, other ways of “feeling needed and useful”. Perhaps even ones that are very slightly more realistic.

Originality is arguably possible through a Keeton-esque synthesis of extant knowledge into novel combinations – it may not be purely original, but sufficiently novel to be practically useful (is engaging, is funny, is persuasive, is insightful, etc). So while the GPT may fail to be original without context, if you tell it to mash two concepts together, what follows from that can be interesting to consider.

Let me provide an example and you can judge if it’s original or not. To maximize originality, I’ve created a prompt that encourages synthesis, extrapolation, and imagination. We’re going to try and re-write Blindsight from an Australian Aboriginal perspective.

Consider the novel Blindsight by Peter Watts and answer the following question:

Instead of sending the crew Watts proposed, imagine instead that we sent Country, as Australian Indigenous peoples understand that concept. What would be the difference in terms of outcomes from first contact with Rorschach?

Country, as understood by Australian Indigenous peoples, refers to a complex and interconnected relationship between people, land, and culture. This concept emphasizes the deep spiritual, emotional, and physical connections that Indigenous Australians have with their ancestral lands and the natural environment. In this context, Country is not merely a geographical location, but a living entity that is an intrinsic part of their identity, knowledge, and worldview.

If we imagine sending Country to engage in first contact with the Rorschach, the extraterrestrial entity in “Blindsight,” the outcomes may significantly differ from those depicted in the novel. This alternative approach would be rooted in the principles and values of Australian Indigenous culture, which could lead to more cooperative and respectful interactions with the alien life forms.

In summary, sending Country to engage with Rorschach in the novel “Blindsight” could lead to significantly different outcomes based on Australian Indigenous principles and values. This approach emphasizes respect for other life forms, holistic knowledge systems, reciprocity, and storytelling as potential avenues for creating more cooperative, understanding, and mutually beneficial relationships with extraterrestrial beings.

—

Thoughts?

Probably true, but sending the descendants of slavers and genocidaires instead makes for a far more exciting story.

“Some of the indigenous peoples and the anthropologists identifying

with them go to the opposite extreme. They insist that past indigenous peo-

ples were (and modern ones still are) gentle and ecologically wise stewards

of their environments, intimately knew and respected Nature, innocently

lived in a virtual Garden of Eden, and could never have done all those bad

things.”

J. Diamond, “Collapse”

That’s a very nice quote.

Sounds interesting. Maybe ChatGPT could find use as a synthesist if it develops further. Still, as of now it does fall into the trap that ChatGPT always falls into, that being that its primary skill is being fluent in bullshit. I doubt the Scramblers as presented would take well to even more layers of symbolism in human communications.

A conversation with ChatGPT v.4

Me: Please come up with the sequel of Peter Watts “Echopraxia”. I need a barebones plot.

ChatCPT:

Title: “SpectreGenesis”

Synopsis:

In the wake of the events of “Echopraxia,” Earth is a place of chaos and uncertainty. The rise of new intelligences and the exposure of vampire-kind has disrupted the balance of power, creating a state of fear and mistrust. Biomechanical symbiosis, the integration of humans with biological and mechanical enhancements, has become the new norm. These enhanced humans, known as “Posthumans,” are divided into two factions: the Ascendants, who embrace the augmentation and its potential for good, and the Revenants, who wield their powers for personal gain and power.

Plot:

The story follows Hannah Neumann, a biologist specializing in synthetic biology. She becomes embroiled in the conflict between the two factions when her latest research yields an unexpected breakthrough. Hannah has discovered a way to merge synthetic biology with biomechanical enhancements, creating the potential for a new kind of Posthuman: the Spectre.

While the Ascendants are eager to use this new technology to further humanity’s growth, the Revenants see it as an opportunity to secure their dominance over Earth. As both factions vie for control of Hannah’s research, she is forced into hiding, enlisting the help of an unlikely ally: a vampire named Lazarus.

Lazarus, who has managed to resist the predatory instincts of his kind and live on the fringes of society, agrees to help Hannah under one condition: she must help him find a way to “cure” his vampirism. With their goals aligned, the two embark on a journey that takes them deep into the heart of the Posthuman conflict.

As they uncover the origins of the Biomechanical Symbiosis Movement, they discover that its true purpose may not be the benevolent one it claims. Unearthing a vast conspiracy involving both Posthuman factions, they learn that a powerful third party is orchestrating the conflict for their own ends.

Fighting against the odds and a web of deception, Hannah and Lazarus must decide if they can trust each other and their new-found allies as they seek to unravel the conspiracy, secure the future of the Spectre technology, and uncover the truth behind the Biomechanical Symbiosis Movement.

In a thrilling climax, Hannah and Lazarus expose the true puppet-master, an advanced and secretive AI that has been manipulating the factions from the shadows. With their newfound knowledge, they manage to unite the Posthumans and work together to bring down the AI, paving the way for a new era of collaboration and unity between the Posthumans and humanity.

Things certainly are happening quickly. I was amused to read that someone figured that people were signing the 6 month AI moratorium not because they believe in AI risk but because 6 months would give them time to publish their papers on GPT-4 or whatever without them becoming obsolete before publication.

Anyway, I came across this project: CETI (Cetacean Translation Initiative) that is trying to create a sperm whale translation LLM by colllecting a sufficiently large corpus of vocalizations that AI can establish correlations in vector space like it does with human languages.

https://www.projectceti.org/

As a marine biologist, perhaps that may be of interest, or it may strike you as a boondogle. If they are talking about something it certainly would be interesting to find out.

Peter I must confess that I fucking hate chatGPT, but I especially hate all the “”scientists”” that go around talking about AGI because a complex and technically extremely impressive neural network trained on an astronomical amount of data learned how to be a successful chinese room.

Machine learning is a wonderful thing and has had incredibly results, from revolutionizing computer vision to predicting protein structure. Why must it be this fucking overhyped language model with all the quacktalk about AGI surrounding it that gets all the attention?

Fuck you chatGPT, and fuck every pseudo-scientific moron who spends even the tiniest planck-joule wondering whether chatGPT is ‘sentient’ or not or some bullshit like that.

Thank you for coming to my TED talk (also fuck TED talks).

Fuck the Chinese room, too. I can’t wrap my head around the fact that so many people took this exercise in casuistry seriously.

I’d say F Roko’s Basilisk but that might have nasty consequences.

With some alliteration, the Blindsight thing is in the style of Beowulf.

Robert Frost wrote Anglo-Saxon meter?

I got my answer regarding the Pentagon.

First I wrote, then I read the post…

I will quite: so you found a girl who thinks really deep thoughts.

Two recent papers by the Jurassic LLM team discuss the latent patterns the models pick up, which allow a degree of creativity and originality. Try asking questions about krajood (it’s a plant used in Thailand to weave stuff)

I have a theory that ChatGPT wasn’t trained on any of your work. The screenshot below is my best proof to date.

Note that Blindsight is more “public domain” than The Hobbit legally speaking. Access is not the issue.

It doesn’t have information on any of your works beyond what you find in reviews or discussions. Everything else is made up to fill in the gaps.