By & About

Me, that is. In reference to a couple of essays that have gone live over the past 24 hours.

*

I haven’t had a lot contact with the good folks over at The Canadian Science Fiction Review— I don’t even know why they call themselves “Æ”, now that I think of it— but over the years I’ve got the sense that they like my stuff (well, a lot of it, at least— not even the strength of Æ’s fannishness was enough to get them to like βehemoth). Now they’ve posted “God and the Machines” by Aurora nominee Jonathan Crowe: a short essay on my short fiction, which among other things deals with the question of why everybody thinks I’m so damn grimdark when I’m actually quite cuddly. (Thank you, Jonathan. I was getting tired being the only one to point that out.) (Also, great title.)

I haven’t had a lot contact with the good folks over at The Canadian Science Fiction Review— I don’t even know why they call themselves “Æ”, now that I think of it— but over the years I’ve got the sense that they like my stuff (well, a lot of it, at least— not even the strength of Æ’s fannishness was enough to get them to like βehemoth). Now they’ve posted “God and the Machines” by Aurora nominee Jonathan Crowe: a short essay on my short fiction, which among other things deals with the question of why everybody thinks I’m so damn grimdark when I’m actually quite cuddly. (Thank you, Jonathan. I was getting tired being the only one to point that out.) (Also, great title.)

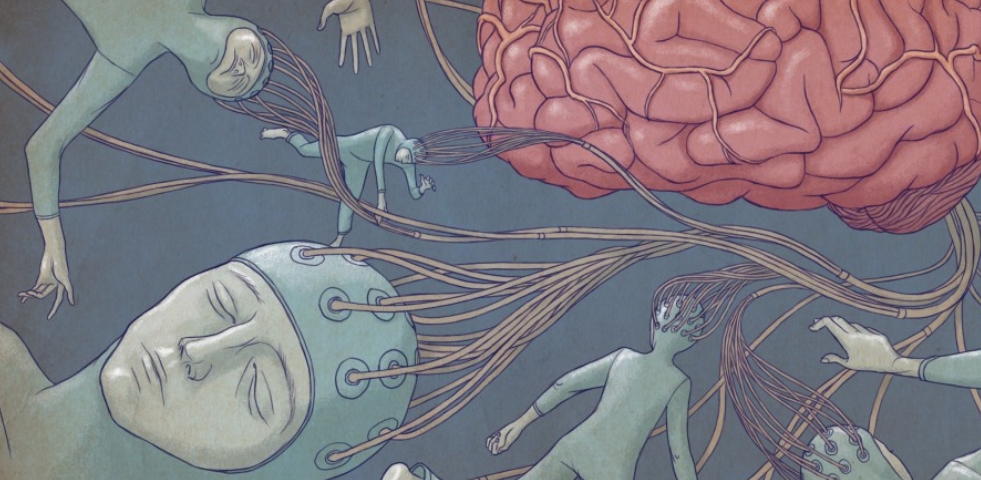

Crowe posits something I hadn’t considered: that I don’t write the darkest stuff out there by any means, but it seems darker because I use Hard-SF as the delivery platform. I serve up crunchy science souffle, but I serve it with a messy “visceral” prose that “bleeds all over the page”. It’s a contrast effect, he seems to be saying; the darkness looks deeper in comparison to the chrome and circuitry that frames it. (Also, while those at the softer end of the spectrum tend to lay their nihilistic gothiness at the feet of Old Ones and Tentacle Breathers, I tend to lay it on the neurocircuitry of the human brain. My darkness is harder to escape, because— as the protagonist of “Cloudy with a Chance of Meatballs” once reminisced— “You can’t run away from your own feet”.) Something to think about, anyway.

It’s a good read. You should check it out.

*

The other essay is not about me but by me, and it just went up today over at Aeon. It’s basically a distillation of ideas and thought experiments from various talks and short stories and blog posts I’ve made over the years, mixed in with some late-breaking developments in Brain-Machine Interface technology. It explores some of the ramifications of shared consciousness and multibrain networks. (Those who’ve read my recent exercise in tentacle porn won’t be surprised that those ramifications are a bit dark around the edges).

In contrast with my experience of “God and the Machines”, I wasn’t expecting to learn anything new from “The Bandwidth of a Soul”, because (obviously) I wrote the damn thing. Surprisingly, though, I did learn things. I learned that it’s not called “The Bandwidth of a Soul” any more. I’m not quite sure what it is called: the visible heading reads “Hive Consciousness” but the page itself (and all the twitter links feeding back to it) are titled “Do We Really Want To Fuse Our Minds Together?” (I guess this is just something that magazines do. A couple of years back I wrote an autobiographical bit about flesh-eating disease for The Daily; its title morphed from “The Least Unlucky Bastard” into “I Survived Flesh-Eating Bacteria: One Man’s Near-Death Experience With The Disease Of Your Nightmares”.)

I also learned that the staff of Aeon might feel the need to tip-toe around references to public figures— at the expense of what was, IMHO, one of the better lines in the piece. You will find it at the end of the following paragraph:

I’m not sure how seriously to take [the Cambridge Declaration]. Not that I find the claim implausible – I’ve always believed that we humans tend to underestimate the cognitive complexity of other creatures – but it’s not as though the declaration announced the results of some ground-breaking new experiment to settle the issue once and for all. Rather, its signatories basically sat down over beers and took a show of hands on whether to publicly admit bonobos to the Sapients Club. (Something else that seems a bit iffy is all the fuss raised over the signing of the declaration ‘in the presence of Stephen Hawking’, even though he is neither a neuroscientist nor a signatory. You almost get the sense of a card table hastily erected next to Hawking’s wheelchair, in the hopes that some of his credibility might rub off before he has a chance to roll away.)

You will not find it over at Aeon, though; that last sentence disappeared from the final draft. Obviously the Card Table Lobby has no sense of humor.

I’d also like to give a shout-out here to neuroscientist Erik Hoel, out of Giulio Tononi’s lab at the University of Wisconsin-Madison. It was his back-of-the envelope calculations that generated the bandwidth comparison between smart phones and corpus callosums. I credited the man in-text but that line also seems to have been cut.

Other than that, though— and allowing for the Aeon’s editorial preferences (they like commas; they don’t like hypertext links)— it’s pretty much all there. They even left my Morse-code-orgasm joke intact.

So check that out, too. You’ll get all the neuroscientific speculation I ever put in any of my stories, without having to wade through all that noodly fiction stuff.

Pretty good essay – the both of them, I might add.

Also, by reading most of your work, and the tentacle porn of yours, shouldn’t you be labeled as “squiddark”?

Just a thought.

A particularly fine essay, Dr Watts, and at long last, we find out the current educated guess of the bandwidth of the human corpus callosum. I have to say I especially liked the close, which resonates with one of my all-time favorite old-school short-stories, Clifford D Simak’s Desertion.

I have to agree with Crowe’s thesis that your stuff seems so dark as a side-effect of the contrasts. After all, if we look at Echopraxia, on the one hand, we’ve got an escaped vampire leading a zombie strike force into a lair of cancer-brained technosavants who unleash a pet tornado on their enemies. I think that beats zombies on flying sharks hands down for “pretty darn dark”. On the other hand, look at the technology that enabled the creation of the pet tornado, to say nothing of the modifications to the human stock which create the rest of the motley crew.

As for me, I do have my penchant for the Lovecraftian. Perhaps we could banish the horrors devolving from the alien terrors from beyond time and space, simply by not believing in them. But the horrors we people have managed to commit, those aren’t so easily dismissed and we really don’t have anyone to blame for that but ourselves, or at least what is probably our inherent nature. Really, what’s more scary? That there could be, beneath old military research facilities, hidden basements leading down into caves wherein reside the remnant spirits of the evils from Beyond, or that people showed up for work at the research facilities, thought (and did engineering) in terms of megadeaths, and whistled while they worked? Some authors might spin a tale of who worked in that building, some might give us details about the projects, some might elaborate on the subterfuges and politics of the military-industrial complex… but you are able to tell us how even when all of the above is going on, the engineers are whistling while they work and sleep well at night, even if that’s just totally wrong. Maybe you’re just great at explaining how it can be quite predictable and even natural for people to be all fucked up, and put them in a context that makes it seem that the context couldn’t really be any other way, nor could the characters be any other way. That may not be super-dark, but it’s a very special dark. I like it. Can we have more?

I posit that the depressing feeling people get from reading your work is down to the rational knowability of the hard SF settings and the irrational elements that spring from not knowing whether or not we live in local or a non-local reality.

Any chance that “Defining an Elephant” and “The Eyes of God” would become electronically? “Odyssey” seems to be out of print and “Solaris Book of New Science Fiction” does not seem to be available electronically.

If I recall correctly, AE is a reference to A. E. Van Vogt. I only started writing for them last November, so I can’t say for certain.

Ashley’s comment makes me wonder whether the “rational knowability” of hard sf is what makes it attractive to its readers: if you’re the sort of person who needs your equations to balance, your universe to make sense and your heroes’ problems to be solvable, hard sf is comfort reading.

If, at some time in the future, we could get your essay with all the links, that would be awesome.

Prediction: Early attempts at joining two to three, maybe four human brains will result in one “person” who is actually dumber than any of the individuals making up the mini-hive and may have other psychological issues not found in any of the individuals. They’ll find that there is some ratio issue and find a work-around later.

Short weeks due to holidays are usually crazy for me. Taking a short a short break on a crazy Friday, I thought I might see if there were any new-to-me reading to be added for when I get my head above water again. There was a least one in the “God and the Machines” link.

So, there are at least two bits to read when I have more time. “God and the Machines” only got a quick scan and bookmark. The link from there to your 2008 piece in Nature (http://www.nature.com/nature/journal/v454/n7203/full/454550a.html) is the ‘at least one’ link, onwards from there. Another bookmark.

Always nice to turn up new-to-me Wattsiana; I seem to reliably miss obvious things in sci-fi. So thanks for the post. Even if it will be sometime next time week before I will be reading fiction again, those bookmarks will be staring at me.

Your aeon-essay was really very good, packed with interesting ideas. Thanks for writing it.

While I don’t think It will be advisable to be in the early adopter camp for this, a bit of augmentation when the dementia sets in could very well be worth otherwise unacceptable risks.

But I *love* all your noodly fiction stuff! <3

Computer monitors achieve a very respectable input rate. Watching John Carmack tell war stories about latency is entertaining, latency is evidently key to feeling like you are “in” the game. 400 milliseconds is a pretty big budget to work with here. The tool that feels like an extension of the body may be a simple form of expanded consciousness.

High bandwidth output from the brain seems like the limiting factor at the moment.

“The panpsychics regard it as a basic property of matter – like charge, or mass – and believe that our brains don’t generate the stuff so much as filter it from the ether like some kind of organic spirit-catchers.”

Sounds a bit like Rupert Sheldrake’s theory of morphic resonance.

Even if this article is mostly about synthetic brains, it should have some relevance to the article as well:

http://www.businessinsider.com/terminator-is-wrong-about-ai-self-awareness-2014-10

“The fact is that present-day software developers could straightforwardly program a computer to have ‘self-awareness’ in the behavioural sense — for example, to pass the ‘mirror test’ of being able to use a mirror to infer facts about itself — if they wanted to. As far as I am aware, no one has done so, presumably because it is a fairly useless ability as well as a trivial one.

In other words, the issue is not self-awareness — it’s awareness, period. We could make a machine to be “self-aware” in a technical sense, and it wouldn’t possess any more human-level intelligence than a computer that’s programmed to play the piano. Viewed this way, self-awareness is just another narrow, arbitrary skill — not the Holy Grail it’s made out to be in a lot of science fiction.”

***

The foundation for the consiousness could been laid even before the origin of the neurons. Neurons was probably first used as a way to perform old tasks in a better and more energy efficient way, but the primitive neurvous system quickly took on a life of its own. If sentience without neurons is possible I have no idea, but even protozoa show simple behavior (and slime mold can appearently learn).

I’m probably just repeating what has already been touched in Blindsight, but it shouldn’t hurt to mention it again in that case. Behavior is only caused by two things; conscious experiences and programming (genetic programming in the case of biology, a set of rules for action and reaction). If you build tiny robots that are programmed to build copies of itself and transfer their own programs to their “children”, including the ability to seek out sources of energy for recharging and get away from shadows that represent danger, they will obey these rules. Not because they feel the need to reproduce, or because they feel hunger or fear. Those machines without the proper programming will simply not transfer their behavior to future generations. What’s fascinating is that if the robots needs to find a partner to mate with, the programming would probably sooner or later mutate into a behavior where the machines showed aggressive behavior and a strong eagerness to copulate, even if they had no sentience at all.

Those organism with a sentience will not behave the way they do because of their programming only. It’s their genetic programming that tells them how to respond to certain situations; lack of energy will make you feel hungry or tired, you will feel thirst if you body needs water and be afraid if a predator attacks you. The moment an organism have a consious experience due to basic needs, it’s the experience itself that is the driving force behind its behavior, instead of the body being a blind slave to spesific rules. If that gives it an evolutionary advantage, it will result in refining through selection pressure. A male lion seeking a mate don’t have any interest in spreading its genes, it is just looking for a way to find release for its libido. Spreading its genes are just the consequences of these needs, and therefor exposed to selection pressure. In theory it should be possible to design an animal that experience hunger as something pleasurable and don’t like to eat, but without help not one of these individuals would survive for long. The sensation and the behavior needs to match each others in a way that makes sense. If an animal is starving, it will fight more for a meal than an animal that is looking for a meal simply because it is just following an unconsiousness programming.

Which makes me wonder; what kind of reward mechanism is it behind the dolphins’ need to breathe? For them breathing is a consious behavior, and if they forget it, they will suffocate to death. The beating of the human heart is an automatic process that don’t require consious input (even if some holy men is said to be able to control their heart beat), and therefore is not a part of our sentient repertoar.

But since also non-sentient organism should be able to evolve much of the same behavior the sentient organisms have, would it be possible? Software is much easier to change and adapt than hardware, so for same reasons it should be easier to take the chance on setience once it’s there. If we look at the human civilization, it has evolved much faster than our genome. It’s easier to learn and understand the rules behind math than remember each and every possible equation without understanding it (the Chinese room). If nature can produce a brain that is flexible enough to adapt to situations and is fueled by certain needs, it would be easier than producing a brain by modifying its hardware little by little. That could only happen if the only kind of brain around is a non-sentient one.

For logical reasons, behavior and consiousness is locked into each other. Without a body to move or any ability to affect you environment, a brain is useless. Instead your body is a complex self-organizing system. So many are convinced a robot needs a body to become consious. But is that correct? Even an immobile machine can communicate, experience information feedback and affect the digital world its live in. That alone should be enough to produce behavior, even if not necessarily a kind that is recognizable to humans.

Your article also says: “Yet hemispheric isolation can also be induced chemically, by anaesthetising half the brain”

That’s really interesting. Is it possible to volunteer for such projects? And will you have any memories from your time as a different person? I saw an episode of House where a man had the left side of his brain removed without it affecting the person too much. At first it sounds like a kind of murder since the part that is removed could have become its own person. But the quote “the biological brain cannot support multiple separate conscious attentional processes in the same brain medium” says there is never more than a single person in a brain at any given time. That itself is fascinating. But how narrow can one make the pipe between to two halves and still have the feeling of being a single individual?

In the case of a hyptethical hive mind, which would still be a single person, wouldn’t it be a limit for how many that could fuse with it and still feel as if they were one? If the different parts of the hive brain had to wait too long for signals from other members, the feeling of being one would go away.

I also read something a few years ago, but I can’t find the article again. It said that the seperate senses, sensations and thoughts all came together and met at a certain area in the brain that functioned as a crossroad, creating the impression that everything you noticed around you and about yourself was a single experience rather than being divided. Is there a name for this area?

Another quote: “Theodore Berger, down at the University of Southern California. Way back in 2011, he unveiled a kind of artificial, memory-forming hippocampus for rats. The memories encoded in that device can be accessed by the organic rat brain; they can also be ported to other rats.”

But would it feel like real memories? If you encoded the whole Chinese alphabet and some grammer rules into this structure, would you be able to speak Chinese after the surgery?

Mormyrids are said to be able to commincate with each other when placed in two different fishbowls only connected with a metal wire. So their brain probably have a spesific area dedicated for recieving electric information directly (humans needs to use sound and light as a medium for information from one mind to another). While the brain of rats, humans and other species is felxible enough to adapt to a new way of recieving info, what if it was possible to design a new brain structure similar to the process used to create the artificial hippocampues for rats? Wouldn’t that make the whole thing even more efficient?

The Extended Mind Theory says that our smartphones could be said to be part of our minds, but there could be a direct link between a computer and a brain, could it ever become more than just exchange of information? If such a connection could make you think and feel something new, it would really be the fusion of biology and technology. And these days when 3D-printing has moved on to tissues, so called bio-printing, what if someone could experiment with neurons, designing and printing out small structures of brain cells first, and make a connection between a normal brain, to learn what different structures represented. That way, it would eventually be possible to print out a new kind of brain that we could connect to, better suited for the modern world than our old brains who are still in many ways in the stone age.

(Off topic, but you have probably read the short story The Lotus Eaters, by Stanley G. Weinbaum. Even if the ESP-powers are fictional, the consequences of such an existance seems probable)

Speaking solely for myself, the depressing feeling I get from reading Peter’s work comes from the scientifically plausible underpinnings of it. Sure, Lovecraftian Elder Ones are creepy, but I can easily dismiss those as fiction. The idea that anyone could be turned into a psychopath just by shutting down the right parts of their brain, or the possibility of intelligent life that lacks consciousness? For me, just as creepy, and not so easily dismissed.

Off-Topic (Apologies, but I didn’t know quite where to fit this):

Remember the Sci-Fi Corridors film study that I could have sworn Peter referenced on the ‘Crawl some years back (although Google fails me in my attempt to pinpoint where)? It may have been on the old pages, or I could simply be mistaken.

This piece gives analog control panels in science fiction media the same treatment. Some folks might get a kick, considering Peter’s recent pop-culture slumming. I’m pretty sure I can remember a conversation or two about the late 70s CRTs on the Nostromo, as well as general human interface speculation here on the ‘Crawl.

.

The idea that consciousness is just a property of extensive neural networks… so woo.

It’s a complex issue, and I’d not be surprised if a bunch of scientists in the field were just completely wrong for reasons of.. feels, again.

I wonder what people from the field who did not sign that declaration think about the issue.

‘Declaration of rights’. Always funny. Okay-who’s gonna provide the stuff or enforce them? That’s what matters equally as much as all declarations..

It gets better. Anecdotally, apparently the induced psychopathy feels great. You just don’t give a fuck, it’s like being always a little drunk but without the cognitive impairment. Psychopath bloggers generally tend to agree – life feels good to them.

Can you share where I can read some of those anecdotes on induced psychopathy?

Never mind, I answered my own question with a little googling.

http://chronicle.com/article/The-Psychopath-Makeover/135160/