Spock the Impaler: A Belated Retrospective on Vulcan Ethics.

When I first wrote these words, the Internet was alive with the death of Leonard Nimoy. I couldn’t post them here, because Nowa Fantastyka got them first (or at least, an abridged version thereof), and there were exclusivity windows to consider. As I revisit these words, though, Nimoy remains dead, and the implications of his legacy haven’t gone anywhere. So this is still as good a time as any to argue— in English, this time— that any truly ethical society will inevitably endorse the killing of innocent people.

Bear with me.

As you know, Bob, Nimoy’s defining role was that of Star Trek‘s Mr. Spock, the logical Vulcan who would never let emotion interfere with the making of hard choices. This tended to get him into trouble with Leonard McCoy, Trek‘s resident humanist. “If killing five saves ten it’s a bargain,” the doctor sneered once, in the face of Spock’s dispassionate suggestion that hundreds of colonists might have to be sacrificed to prevent the spread of a galaxy-threatening neuroparasite. “Is that your simple logic?”

The logic was simple, and unassailable, but we were obviously supposed to reject it anyway. (Sure enough, that brutal tradeoff had been avoided by the end of the episode[1], in deference to a TV audience with no stomach for downbeat endings.) Apparently, though, it was easier to swallow 16 years later, when The Wrath of Kahn rephrased it as “The needs of the many outweigh the needs of the few”. That time it really caught on, went from catch-phrase to cliché in under a week. It’s the second-most-famous Spock quote ever. It’s so comforting, this paean to the Greater Good. Of course, it hardly ever happens— here in the real world, the needs of the few almost universally prevail over those of the many— but who doesn’t at least pay lip-service to the principle?

Most of us, apparently:

“…progress isn’t directly worth the life of a single person. Indirectly, fine. You can be Joseph Stalin as long as you don’t mean to kill anyone. Bomb a dam in a third world shit-hole on which a hundred thousand people depend for water and a thousand kids die of thirst but it wasn’t intentional, right? Phillip Morris killed more people than Mao but they’re still in the Chamber of Commerce. Nobody meant for all those people to die drowning in their own blood and even after the Surgeon General told them the inside scoop, they weren’t sure it caused lung cancer.

“Compare that to the risk calculus in medical research. If I kill one person in ten thousand I’m shut down, even if I’m working on something that will save millions of lives. I can’t kill a hundred people to cure cancer, but a million will die from the disease I could have learned to defeat.”

I’ve stolen this bit of dialog, with permission, from an aspiring novelist who wishes to remain anonymous for the time being. (I occasionally mentor such folks, to supplement my fantastically lucrative gig as a midlist science fiction author.) The character speaking those words is a classic asshole: arrogant, contemptuous of his colleagues, lacking any shred of empathy.

And yet, he has a point.

He’s far from the first person to make it. The idea of the chess sacrifice, the relative value of lives weighed one against another for some greater good, is as old as Humanity itself (even older, given some of the more altruistic examples of kin selection that manifest across the species spectrum). It’s a recurrent theme even in my own fiction: Starfish sacrificed several to save a continent, Maelstrom sacrificed millions to save a world (not very successfully, as it turns out). Critics have referred to the person who made those calls as your typical cold-blooded bureaucrat, but I always regarded her as heroic: willing to make the tough calls, to do what was necessary to save the world (or at least, increase the odds that it could be saved). Willing to put Spock’s aphorism into action when there is no third alternative.

And yet I don’t know if I’ve ever seen The Needs of the Many phrased quite so starkly as in that yet-to-be-published snippet of fiction a few paragraphs back.

Perhaps that’s because it’s not really fiction. Tobacco killed an estimated 100 million throughout the 20th Century, and— while society has been able to rouse itself for the occasional class-action lawsuit— nobody’s ever been charged with Murder by Cigarette, much less convicted. But if your struggle to cure lung cancer involves experiments that you know will prove fatal to some of your subjects, you’re a serial killer. What kind of society demonizes those who’d kill the Few to save the Many, while exempting those who kill the Many for no better reason than a profit margin? Doesn’t Spock’s aphorism demand that people get away with murder, so long as it’s for the greater good?

You’re not buying it, are you? It just seems wrong.

I recently hashed this out with Dave Nickle over beers and bourbons. (Dave is good for hashing things out with; that’s one of the things that make him such an outstanding writer.) He didn’t buy it either, although he struggled to explain why. For one thing, he argued, Big Tobacco isn’t forcing people to put those cancer sticks in their mouths; people choose for themselves to take that risk. But that claim gets a bit iffy when you remember that the industry deliberately tweaked nicotine levels in their product for maximum addictive effect; they did their level best to subvert voluntary choice with irresistible craving.

Okay, Dave argued, how about this: Big Tobacco isn’t trying to kill anyone— they just want to sell cigarettes, and collateral damage is just an unfortunate side effect. “Your researcher, on the other hand, would be gathering a group of people— either forcibly or through deception— and directly administering deadly procedures with the sure knowledge that one or more of those people would die, and their deaths were a necessary part of the research. That’s kind of premeditated, and very direct. It is a more consciously murderous thing to do than is selling tobacco to the ignorant. Hence, we regard it as more monstrous.”

And yet, our researchers aren’t trying to kill people any more than the tobacco industry is; their goal is to cure cancer, even though they recognize the inevitability of collateral damage as— yup, just an unfortunate side effect. To give Dave credit, he recognized this, and characterized his own argument as sophistry— “but it’s the kind of sophistry in which we all engage to get ourselves through the night”. In contrast, the “Joseph Mengele stuff— that shit’s alien.”

I think he’s onto something there, with his observation that the medical side of the equation is more “direct”, more “alien”. The subjective strangeness of a thing, the number of steps it takes to get from A to B, are not logically relevant (you end up at B in both cases, after all). But they matter, somehow. Down in the gut, they make all the difference.

I think it all comes down to trolley paradoxes.

You remember those, of course. The classic example involves two scenarios, each involving a runaway trolley headed for a washed-out bridge. In one scenario, its passengers can only be saved by rerouting it to another track—where it will kill an unfortunate lineman. In the other scenario, the passengers can only be saved by pushing a fat person onto the track in front of the oncoming runaway, crushing the person but stopping the train.

Ethically, the scenarios are identical: kill one, save many. But faced with these hypothetical choices, people’s responses are tellingly different. Most say it would be right to reroute the train, but not to push the fat person to their death— which suggests that such “moral” choices reflect little more than squeamishness about getting one’s hands dirty. Reroute the train, yes— so long as I don’t have to be there when it hits someone. Let my product kill millions— but don’t put me in the same room with them when they check out. Let me act, but only if I don’t have to see the consequences of my action.

Morality isn’t ethics, isn’t logic. Morality is cowardice— and while Star Trek can indulge The Needs of the Many with an unending supply of sacrificial red shirts, here in the real world that cowardice reduces Spock’s “axiomatic” wisdom to a meaningless platitude.

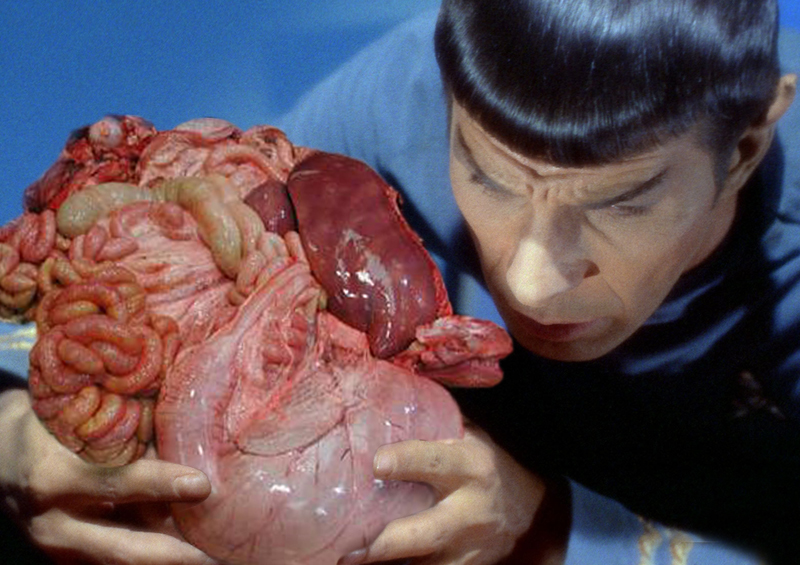

Trolley paradoxes can take many forms (though all tend to return similar results). I’m going to leave you with one of my favorites. A surgeon has five patients, all in dire and immediate need of transplants— and a sixth, an unconnected out-of-towner who’s dropped in unexpectedly with a broken arm and enough healthy compatible organs to save everyone else on the roster.

The needs of the many outweigh the needs of the few. Everyone knows that much. Why, look: Spock’s already started cutting.

What about you?

[1] “Operation: Annihilate!”, by Steven W. Carabatsos. In case you were wondering.

Nicely reasoned, Peter, and very thought-provoking. I love that you hashed it out with David Nickle, too, just to make the argument even more air-tight.

Maybe there’s some variation where the executives of the cigarette companies have to provide the experimental subjects?

The key point is self sacrifice. We have no problem with the needs of the many when it’s the one who voluntarily and heroically jumps on the grenade. Spock saves the ship by voluntarily walking into the reactor chamber. Tobacco users voluntarily put the cancer sticks in their mouth, most of the legal wrangling is about how well informed they were and what information the sellers truthfully knew to give. And healthy people donate organs to strangers daily.

Medical trials: People do die in them, and people do volunteer to participate, don’t they? I assume there are disclaimers and liability waivers for the dangerous ones, and the truly dangerous and destructive ones get done on animal models because that’s what they’re for. Is medical science really being held back because doctors can’t kidnap fair maidens off the street for vivisection?

Start asking fat men if they’d jump in front of trolleys, we might have to frame that question tactfully, though it might improve the morale of the morbidly obese…

(Peter, sorry if I’m comming off as pedantic, but I’ve seen the same typo a few times already, so I think maybe you just miss-remembered the spelling: it’s “Nowa FantasTyka”, pronounced like, I don’t know, French “fantastique”+”ah”. Pedant out.)

Perhaps I am just paranoid, or maybe I’ve seen one too many of those movies about people who get lost in Appalachia and wind up feeding some tribe of mineshaft-dwelling albino mutants. (Now that’s “Needs of the Many”!) Or perhaps I impute far more logic and far less squeamishness to medical personnel than television programming would warrant. Maybe I just read James Gunn’s the Immortals once too often, and even watched the abominable network-television adaptation, to boot. Maybe it was Larry Niven’s “the Jigsaw Man” that was the last straw.

However, whatever the causes, when I am an unconnected out-of-towner, I am damned cautious about avoiding the ER. One might wonder about how the world would work if people knew that walking into a hospital was like picking up a carton of Marlboros, “you pays your money and you takes your chances”, but in the one case you might get disassembled under anesthesia and in the other it’s vaguely possible you might die of old age. People would have a different risk calculus, if it was a coin-toss as to whether they’d be a donor or a recipient if they set foot on hospital grounds. Not much of a point for me to make here, other than that if metaphorically fat people knew life in general was a trolley paradox, metaphorically fat people might tend to go on an allegorical diet and avoid the rhetorical rail lines because there’s just no telling what people might ask of them, and not take “no” for an answer.

For now, at least, the whole medical industry is relying on the notion that it’s far more about caring than about logic, and it might not last very long if it was the other way around.

This is a super interesting question. I think it might be the question I’m most interested in when writing fiction: the clash between deontological morality and utilitarian calculus. When do you pull the lever to divert the trolley?

I think our learned preference for the deontological ‘no, not that, not ever’ Kantian refusal exists for an adaptive reason (which of course won’t surprise you, and doesn’t mean it’s Right, at least for a lot of possible constructions of Right). I think it’s a social stabilizer we’ve built for three big reasons: to spread out psychological costs, to prevent outbursts of retaliation caused by selfish/self-protective agents, and to jam up mistaken moral calculus (including gamesmanship).

I’m gonna bullshit the idea of ‘system stability’ here, acknowledging that it probably needs to be looked at.

When we consciously choose to push the lever, sacrificing someone for a greater good, we find it very easy to declare victims and perpetrators. This fires our justice impulse and we get all tangled up arguing about who should be punished, what else could’ve been done, and where recrimination should fall. It’s better for system stability to be able to say, oh no, the trolley tragically killed five people, as a result of unattributed and unanticipated trolley safety issues. Don’t get mad! It’s just how it is.

Nobody wants to be the person who’s on the other track, the person who gets levered. If you’re in this position, you’ll rationalize reasons you shouldn’t be sacrificed, conjuring up tactical morality to protect yourself. You’ll fight the choice. Fights are costly. It’s better for system stability to avoid these fights by saying ‘those losses were a result of outside forces, not a leadership/powerholder decision.’

And maybe most importantly, we don’t always have good models of the utilitarian decision. We might have bad information on where the trolley will go. We might not understand how the lever works. If we have a social principle saying ‘prefer deontology, NEVER LEVER,’ we sidestep this whole issue — and we evade sociopaths who want to feed bad info into the lever calculus for their own gain! A great example of this is ‘never torture’. We feel that if we permit torture under some circumstances, those circumstances will be expanded. So we prefer to set an unconditional no-torture rule.

In terrain where information is uncertain, players like to game information, and we only have shaky cause-effect maps, I think I see why people prefer Never Lever.

I dunno, I hope that’s interesting!

edit: Entity above me brings up an interesting point. Deontological rules are much easier to remember. It may be that societies in which individuals can count on deontological principles are less cognitively taxing, making them efficient. The alternative is constantly gaming out everyone’s utility, which is exhausting. And maybe that’s part of why we admire individual self-sacrifice: we feel people are embracing a deontological principle to put the community’s welfare above their own.

Maybe the reason the doctor example strikes people as more alien than the cigarette example, despite it being functionally the same thing is as simple as protective moral legislating. I am never likely to be in a position where I can cure a disease, all by sacrificing a few dozen people (or hundreds, if it’s a really big disease). I AM likely to be in a situation where my actions to improve my own life, materially impact many people distant in a negative way. I’m in such situations all the time, every time I buy from WalMart and so contribute money to people working in sweatshop conditions instead of paying a little more and not having anyone risk losing an arm to make my shirt. I don’t like to think about it, but it happens all the time. So if I see somebody else in a situation like that: trying to better themselves and not really caring what the consequences are, I’m more inclined to forgive them because I see myself in them (unless they either go too far, or are too in-your-face cold-blooded about it, like calculating it being cheaper to pay the lawsuits for dead victims than fix the problem, and in doing so force me to see the unpleasant similarities in myself). But I don’t see myself in the doctor’s position… I might LIKE to be that talented, but I don’t. And it’s unlikely I’d be in a position to shove a guy onto the tracks to save the runaway train, (even if I wound up in the unlikely situation, let’s face it, I’d probably freeze up) so I’m free to judge that as wrong if others do it.

And of course, there’s a good dose of “as long as I don’t see them every day, they don’t count” involved: hurting 5 people you know to improve the lives of a million you don’t is judged worse than helping five people you know at the expense of millions, for the usual evolutionary reasons.

And, I’m not really sure I’d want a person who will cut up a stranger to save five people working as a doctor because of all the knock-on effects: fewer people would show up in the hospital in the first place because they’d fear they’d get chosen as dispensable (by a fallible human). Not to mention that this is a guy who might decide to kill me and steal my kidney for the greater good whether I’m in a hospital or not, so for purely selfish reasons I’d never want to associate with them at all, nor would anyone who might train them as a doctor or have to deal with their consequences. It’s kind of the Tragedy of the Common Serial Killer. We can’t have these moral “needs of the many” doctors offing people left and right for the greater good, because all of our self-interests would individually be against it.

I love that I hashed it out because it meant beers. Also I don’t get to hang out with Dave as much as I’d like to.

Arrrgh. Sorry. Fixed. I actually know the spelling. It’s just typo I keep making.

Also, in situations like this, you can see the adaptive utility of sentiments like “All part of God’s Great Plan” and “Not the place of the clay to question the potter.”

(The ceramic potter, of course, not the Harry one.)

This makes me wonder: has anyone ever done a trolley study on doctors, who have to make such decisions in real life way more often than the rest of us? As I understand it, even people who come up wit the right solution to a trolley problem due so after a measurable delay— they have to override their gut response with a considered one, and that slows them down. But maybe performance improves with practice— and they do say that medical doctors are especially susceptible to God Complexes…

It is an interesting question and I’m not saying it doesn’t sometimes, perhaps often, apply to the real world.

But there is a problem with the premise in that it discounts the occurrence of false motives. Investigations of human experimentation sometimes found no scientific basis for what was being done. This is not to say that Mengele didn’t happen upon some interesting discoveries {but see broken clock analogy}, but what about all the work done under the guise of Progress that was simply a few sadists getting their rocks off? While not medical, what about HAARP simply being a con artist’s path to getting rich on taxpayer’s tab? What about all the stuff done in the name of one God or other or just for the Almighty Dollar? What about rewarding banks for screwing up, too big to fail, corporate welfare? You perhaps prevent some kind of economic chaos but reward the behavior that lead to it.

The key there: If a person wants something and has the means to get it, they will invent justifications in order to assure themselves and others that there’s some Progress to be had, whether there actually is in a particular case or not.

I think Seth makes good points about uncertainty, upon which I would like to expand:

Not only are situations almost never so clearly binary as they are presented in the trolly problem, not only are you unlikely to encounter situations where you have both enough time to comprehend a stark moral choice clearly and yet so little time that searching for the traditional “third option” is not plausible, but people screw up, consequences are unpredictable, and people lie.

A person who encounters the trolly problem in real life WILL NOT BE CERTAIN about the situation. Your hypothetical Doctor Spock (BTW – I see what you did there) is not in a position to be sure that he will be saving five lives at the cost of one – the operations could fail, the donor could have hidden issues (happened on Scrubs once), the donor’s relatives could swear bloody revenge and kill all five donees, the Dr. Spock, all the scrub nurses, and their little dogs too. In real life, you can’t really ever be sure you are doing good.

So the calculation isn’t “kill one to save five,” it’s “almost surely kill one, and thus be a murderer, to save up to five. Maybe.” How confident are you?

Spock was, in his own way, cocky as hell. He confidently predicted the future and was willing to act on those predictions despite being shown wrong about them quite often. Most of us aren’t that confident, with good reason. Folks with the courage of their convictions are often very scary.

And that’s not even getting into the lying part. What if some tech in the lab who knows you and hates her weird uncle Stanley faked those compatibility tests? What if the “researcher” asking you questions about trolleys is really looking for an excuse to put a big red “psychopath” stamp on your permanent record? What if some political muckamuck back in Starfleet council is about to suffer politically due to (stuff) at colony X and has coerced a science team to report that relatively benign symbiote infestation Y is actually apocalyptically dangerous parasite infestation Z instead?

Things are clearer in fake scenarios because we control the options. Things are clearer in most fiction and news because ambiguity in the narrative makes folks squirm. But in a real life trolley problem most folks wouldn’t pull the lever because they didn’t want to possibly murder someone on a probably imperfect understanding of the situation, those who were confident enough to pull the lever would probably immediately sprint towards the potential victim screaming, and almost no-one would be confident enough to make themselves an unambiguous murderer (or attempted murderer) by pushing the fat person on their best guess on what would stop a train.

I’d say the general uncomfortableness with it comes with the issue of incomplete knowledge – how do you know that the needs of the many are outweighing the needs of the few here? How can you trust that the person making the call isn’t a bad actor?

If Saddam Hussein really was behind 9/11 and has WMDs and is about to use them, then the needs of the 12 million who live in New York City out weigh the needs of the 1.5 million who will die in our war and next decade of occupation. Wait, what do you mean Bush is lying his ass off and using this to fulfill unresolved daddy issues and pay off his cronies in war profiteering while redefining the executive like his people have wanted to do since Nixon?

I think a large portion of the difference in our intuitions about the two trolley scenarios comes down to agency detection. Just as we get wound up about statistically negligible terrorist threats because they evinced a malevolent, potentially permanent agent, neither do we want to be perceived as such an agent. Thus we have evolved to be hugely resistant to actions that signal our willingness to actively harm others, even when a formal moral calculus might justify our doing so.

The question of the surgeon, and the patients in need of transplants is an interesting one.

It would seem at first glance that the utilitarian would be morally obligated to sacrifice the one, to save the five. However, I do think there is a way for the utilitarian to get away with saying no, to killing the one.

In the real world, there is a high chance that the killing of the individual, and the motives behind it, will be released to the general public. Keeping secrets can be very hard. This of course could have severe consequences, the killing of an innocent by an authority, an authority employed to resolve harm, not intentionally inflict it, would doubtless stir horror in many, undermine faith in the medical establishment, lead to distrust in doctors, and the more paranoid may even refuse to enter a hospital, even in an emergency. Presumably the surgeon would wind up in prison for quite sometime too. These cumulative, downstream consequences, while likely small in any given individual, a slight feeling of disgust say, might result in massive total suffering, when millions of people might be involved. Quite likely, in my mind, greater than that of the five who would otherwise die.

Of course, you could alter the scenario, so that there was somehow no way of anyone finding out, and under those circumstances a classical utilitarian would have to agree to the rightness of killing the one. However, I suppose someone who agrees with the ethics could nonetheless admit that they wouldn’t have the will power to act in accordance with their beliefs, in such a situation. I certainly wouldn’t, it would all feel too frightening, and wrong too, even if it is in fact right.

Peter Watts,

Do trolley problems actually turn up in real life? I can only think of emergency triage, off the top of my head, as a similar situation…

I find it interesting then that jumping on a grenade does have a built in automatic moral calculus fast enough to actually carry out the action, in case anyone thought it only happens in movies wikipedia records over a dozen cases https://en.wikipedia.org/wiki/Falling_on_a_grenade

I’d be curious to see the difference in results between the “shove a fat man” trolley problem and a variant of same in which the respondent *is* the fat man.

In answer to the question posed, I’m not cutting yet. I *am*, though, asking the unconnected out-of-towner whether he’s willing to die as an organ donor in order to save five strangers’ lives, because my ethics encompass killing someone who isn’t willing to die only within a relatively small and extremely well-defined set of cases, of which this is not one. (My opinion on capital punishment is extensive, well-reasoned, and probably dull enough to suffice as a method of same.)

And, sure, I know the stranger’s answer is going to be “no” within a negligible margin of error, but asking costs nothing (in this strange, chilly Platonic plane of analytical ethics), so I may as well on the off-chance he might surprise me.

Finally, since it’s vaguely relevant: Blindsight is, IIRC, set ca. 40 years after the Rifters trilogy, and one of the chapter quotes is credited to Ken Lubin. Am I correct in inferring from these facts that Blindsight and the Rifters trilogy are set in the same timeline? As far as I remember, there’s nothing in the text of either to confirm one way or the other, and I haven’t had any luck asking Google to answer this question.

And then there’s this doctor, and notice how fast and hard the sky is falling on him. I suspect he has seriously slight (probably nil) support in the medical community, the government is on him for the fraud, people died and it was arguable on account of this guy loading them up with poisons that were medically totally unnecessary… to say nothing of the State and all of the outraged kinfolk.

I suppose this might be a good example why you will not likely find a doctor who sacrifices one patient to potentially save others, at least not at the extreme of seeing a broken arm and then dissecting them.

@seth: I like the way you think. Black-and-white choices are fast choices and easy choices, which can cause all sorts of problems if misapplied, but I suspect we continue to make them, because if they were well-reasoned before they were passed out by the Lawgivers in concise formats, they should be widely applicable and will probably be frequently used to solve problems in a generally beneficial way. If more people benefit than are harmed, people will keep doing it. Of course, this doesn’t mean we won’t have a need to discuss them in detail, looking for the exceptions to the rules. Maybe we don’t need to discuss them at Ayn Rand novel sorts of lengths, but that might be because the more abstruse/obscure the exception, the less likely it’s going to be encountered.

@Nestor: I am guessing the trolley problems show up in real life mostly as the sort of test seen in “Solomon’s Choice”, where two women show up in court each claiming to be the mother of an infant. Solomon finally suggests that the infant should be cut in half and one woman agrees, while the other weeps piteously. Solomon wisely decides that the woman who actually cares if the child lives or dies is the one who should be responsible for it.

I might be getting outside of the concept here, but aren’t a lot of divorce settlements, especially involving custody, fairly close to trolley problems? The element of peril is there, the element of a failure to produce a solution involving sacrifice causing greater damage than inaction is there, although the element of timeliness and hurry is missing.

Well, doctors do have that oath thing. “Do no harm.” So it’s pretty deep-seated for doctors not to make those sorts of trade-offs (at least in theory).

In the long run, we’re all dead. Cigarettes do kill a lot of people … eventually. But a lot of people smoke casually and infrequently, so are much less likely to suffer from its health effects. And once you are in your 60s or so, even if you didn’t get the lung cancer, the odds were pretty high that something nasty was going to start affecting you.

But if you do think of the tobacco industry in those terms, don't you have to start thinking of everything that way? Automobiles? Sugar? Long-distance running? I guess that taking a life (for whatever purpose) is a lot different than offering someone a risk-tradeoff choice — a choice that isn’t helped by people’s innate inability to understand risk very well, especially over the long-term..

Mister_DK,

That’s exactly the example I was thinking of.

Start from a bad premise, generate a false dichotomy and suddenly There Is No Alternative and the Hard Men must make their Hard Decision.

Which turns out to be exactly what they wanted to do anyway.

What if we modify your last Trolley paradox a little, give society enough time to find a suitable donor. Wouldn’t convicts make sense for that? Let’s start with the violent ones, but given the demand, wouldn’t we sooner or later end up at convicted midlist SF-authors? And if we add in the premise that among the five there is political royalty (presumably carrying a high utility value for the masses that votes for them), say Harper (I don’t know anything about Canadian politics, but since you are a fan of him…), wouldn’t we expect utilitarian midlist sf-authors to throw themselves on their sword happily for an opportunity like that? I personally believe that you will slippery slope towards a tyranny of the many and then just a tyranny of the elite as even more of the softer values get included in the utilitarian evaluation function. It’s late and I haven’t thought i through, but I think you would also run into problems with Arrow’s impossibility theorem,

https://en.wikipedia.org/wiki/Arrow's_impossibility_theorem

So let’s build a computer to make the utilitarian choices for us. I think Asimov explored something somewhat similar in I, Robot.

As a FYI, there is a possibility (albeit labeled as conspiracy) that Churchill had to make the utilitarian call with regards to Enigma and The Coventry blitz,

http://www.bbc.com/news/uk-11486219

https://en.wikipedia.org/wiki/Coventry_Blitz

And the movie “Unthinkable” presents a similar problem in the frame of a captured Terrorist, interrogators personalizing different philosophical views and the possibility of hidden nuclear bombs in the city.

How badly would you torture a human being for information regarding _potential_ nuclear weapons in your city?

For the re-routing paradox I think that you get a “buying time”, maybe the lineman is a Denzel Washington-movie character and, depending on how you frame the paradox, you might feel in your gut that the lineman has a “fighting chance”.

In the end I think I’ll go with Kant, at least until the utilitarian evaluation function spits out something that can’t be ignored.

Every “needs of the many versus the needs of the few” problem I’ve come across assumes that the final goal is to have the most amount of living humans as possible, but how do I personally benefit from that as a lone human living in the modern world?

For example, in the last ethical quandary you’ve presented, the subject of the thought experiment has no particular reason to choose to save the individual or the group other than the number of people left alive in the end. Without any reason to choose one action over another, the decision of who to save is completely arbitrary. It’s hard to care about a game that you have no stake in.

At the end of the day, the theoretical people that have to make ethical decisions in these scenarios are individuals with different motivations other than ensuring that the human population is as large as possible. As individuals, we need to decide what our goals are before we can answer the question of what we should do in a given situation.

Second read take-away: Yeah, it’s about what we have been taught, what makes us feel better about ourselves, that makes these situations “good” or “bad,” at least when we can leave out scenarios involving motives other than “make it better” like the Iraq invasion one described above. Lots of things are backwards, the abhorrent acceptable and the pragmatic not so due to factors besides the actual results.

On the flip side, this may be kind of happening.

Petition FDA to stop drug testing on mentally ill, homeless.

Peter D: This makes me wonder: has anyone ever done a trolley study on doctors, who have to make such decisions in real life way more often than the rest of us?

Speaking from my experience when training to be a nurse, then yes we are run through exercises like this to teach us about ethical behaviours.

The rules are simple: Do no harm, Do good (Non-maleficience & beneficience).

I think this is a mostly Western outlook on morality, though.

This might seem unrelated at first, but bear with me.

One of the things that struck me most when I toured South East Asia was the food. Deliciousness aside, the most interesting thing was their attitude towards the animals that it came from, and the reactions of my fellow travellers.

While in the West we have no problem consuming vast amounts of meat, we don’t want it to look like anything that was once alive. It should come plastic-wrapped, geometrical, preferably boneless and necessarily sans eyes. This is because most of the people in our part of the world don’t want to confront the consequences of their indulgences.

“Yes, I want to eat a steak, but I don’t want to acknowledge the fact that a cow had to die for it.”

This is the reason, I think, that many vegan campaigners incorporate videos of standard animal slaughter into their often successful propaganda. In my country (Israel), for instance, one campaign was made up of vegan protesters entering butcher shops or the meat departments in supermarkets carrying living baby lambs in their arms and asking the butcher for lambchops or something. This shocked people, despite the fact that the meat they were buying obviously came from a similar animal.

Where am I going with this? It seems to me that in the trolley paradox, most (Western?) people would rather switch rails instead of throwing the fat man on the tracks because it would make the necessary death seem less personal. Personally, I would rather throw the fat man in front of the trolley because it would allow me to assume more personal responsibility for the life I am about to take, as well as allow the fat man to protest and perhaps ready himself for death, whereas the people who would die should I switch the rails would get no such opportunity. It seems more ethical to me, just as it seems more moral to me to acknowledge the killing of the animal you are about to eat.

Another good example of this type of morality, again exemplified by the clash between Asian sensibilities and Western ones, is in the judge’s speech in the film Return to Paradise, in which he explains why he thinks it is more moral to execute a man for a minor drug offence than to let him live, precisely because the needs of the many outweigh the needs of the few. I took this off a film transcript site so I apologize for the all caps thing:

“WE KNOW YOU WESTERNERS DON’T UNDERSTAND OUR ATTITUDE TOWARD DRUGS, BUT WE DON’T UNDERSTAND YOURS.

YOUR CITIES ARE DECAYING.

THE SOULS OF YOUR YOUNG PEOPLE ARE STOLEN FROM YOU.

OUR LAWS MAY SEEM HARSH, BUT OUR STREETS REMAIN SAFE, AND OUR YOUTH IS PROTECTED.

SO WHEN AN AMERICAN JOURNALIST TRIES TO CRITICIZE OUR JUDICIAL SYSTEM…”

The judge, in this case, has no problem looking the cow in the eyes and killing it anyway, because he knows he needs the meat. He just has a stronger stomach.

That’s not a problem with the premise, it’s a problem with people— and you can apply it to anything. “Med school shouldn’t have final exams, because some med students will cheat.” That’s an indictment of cheaters, not exams.

Stark simplicity is a hallmark of thought experiments; it’s designed not to be realistic, but to strip away extraneous details and get to the philosophical heart of the issue (it’s unlikely that even a really fat person would be big enough to stop a runaway train, but you accept the premise in order to deny the thought-experimenter the option of nobly jumping off the bridge herself). In that sense it’s a feature, not a bug; it lets you get to the heart of the issue.

But if you’re looking for messy real-world applications, there’s the one I offered up near the top of the post: medical experimentation vs. corporate greed, curing cancer vs. causing it. There’s lots of noise, lots of uncertainty, but nobody’s going to deny that millions more died from cancer than would die in even the most draconian research program designed to end cancer. There’s no such thing as a treatment N of one hundred million (at least, not when your subjects are large mammals). Why then don’t we let medical researchers kill a few to save many, when we let corporations kill millions for profit? The answer seems to be, simple squeamishness; the researcher is more directly hands-on in her killing. And that’s not a difference that should make a difference, logically.

In terms of confidence, there are expected payoff functions that quantify that stuff statistically. You’ll never be able to predict with certainty whether Frank or Francesca are going to die, but you can estimate with a high level of confidence what your overall mortality rate is going to be.

Thanks for making me look up “glyptodont”, by the way.

Again, see above. And again, invoking “bad actors” is something that can be legitimately done with regard to every human activity under the sun— “scientists might fake their results, so we should stop doing science”— and yet such invocations only seem to occur in cases where people really, viscerally don’t like the take-home message. It kinda proves the point; we don’t like the news, so we’ll bend over backwards coming up with reasons why it can’t be true, even when those reasons would be laughed out of court if applied to anything else.

This is a really good point, and it’s a perfect case of needing-the-person-to-be-really-fat-so-you-can’t-jump-off-the-bridge-yourself. As you say, you have to assume for the sake of the thought experiment that there are no social downstream consequences— but even if there are, that only changes one particular equation. It doesn’t change the underlying algebraic rules. You’ve reprogrammed the Kobayashi Maru so that you can win this encounter, but you haven’t solved the dilemma; you’ve only avoided it.

This, I think, is the heart of the issue.

The whole reason the fat man exists is to prevent the respondent from being him. If you can sacrifice yourself, you’re not forced to make the nastier choice— and it’s the nastier choice that’s of interest here.

I’d say they’re set in different forks of a timeline that split prior to the events in Starfish. At the end of the trilogy, N’Am was a devastated depopulated ruin and life itself was being rewritten at the molecular level; even if someone came in and hit a magical reset button on that second point, βehemoth had such massive global impacts that it’s inconceivable to me that no echoes would remain visible in Blindsight. OTOH, both stories have a character named Ken Lubin with a military background.

So I’m saying Blindopraxia occurs in a timeline where βehemoth never got out. But which didn’t split until Lubin had made his career choices.

Well, yeah, except for the running thing, which actually increases health and lifespan. And sugar is not nearly so addictive as nicotine, so the argument that free choice has been subverted doesn’t really apply. And automobiles, while certainly convenient, don’t force people to drive them— so again, people have a choice.

So actually, on second thought, I guess No. You don’t have to start thinking that way about everything.

Um, I think you might not be entirely clear on what thought experiments are designed to do. They’re deliberately, artificially simplistic, to get to the heart of philosophical issues. They’re not intended to be accurate reflections of reality, but rather a kind of Platonic idealization thereof.

In this particular case, I cited the trolley paradox not prescriptively, but because its results are better explained by squeamishness than by consistent ethics. That squeamishness is fundamentally at odds with “The needs of the many…”, as evidenced by our hypocritical stance on the cancer issue. That was my point. I’m not advocating that we toss innocent girls out of airlocks.

Hey, who you describing as “nonviolent”? Don’t you know I was high on meth that time at the border, because I was immune to pepper spray and picked up a woman by the throat and hurled her across the tarmac? Nonviolent, my ass.

If I had my druthers, though, I’d probably start with SJ-hacking trolls who base their campaigns of harassment and intimidation on the grounds of Open Season on The Privileged, only to turn out to be Paris Hilton when the sock comes off the hand. With some literal skeletons in the closet, as it turns out. I might actually volunteer, if I could take her with me. Harper, though, not so much.

Thanks for citing that Arrow’s Impossibility Theorem, btw. I hadn’t heard of that.

You know, I’m embarrassed to admit this never occurred to me. But you’re right: the bottom line of all these ethical thought experiments is the maximization of human biomass. Which itself, on a planet with7.2 billion people, is arguably an unethical stance.

The rules may simple, but their implications may not be. What if the only way to Do Good to those ten people is to Do Harm to these two?

I really should have titled this post “The Ones Who Walk Into Omelas”…

That is an excellent point. I wonder if there are any cross-cultural trolley surveys out there…

That is brilliant!

To a point? I’ve also seen {many years ago} the claim that it can be dangerously addictive and cause health problems. Think the author compared it/called it anorexia for men. Lost a favorite prof this way.

Here is another sort of thought experiment on the case:

https://en.wikipedia.org/wiki/The_Case_of_the_Speluncean_Explorers

A legal philosophy puzzle published in 1949, it presents the case of four trapped explorers killing a fifth (chosen by a dice throw) to avoid starving to death, and shows five hypothetical judicial opinions on whether the four should be convicted of murder.

***(Peter Watts) Stark simplicity is a hallmark of thought experiments; it’s designed not to be realistic…it’s a feature, not a bug; it lets you get to the heart of the issue.***

Thought experiments are great in physics, where it is often hard to make a clean experiment, but the progression from individual behaviours to aggregate ones is fairly well understood, and there is rarely billions of dollars and lives riding on any particular outcome.

I am less convinced of their utility in ethics. I used to love them, admittedly – they put a neat perspective on things.

But again and again I see ethics based thought experiments used to justify atrocity. “If torturing a suspect could save New York from nuclear Armageddon it is obviously justified, so our policy of secretly torturing folks we’ve arrested for secret reasons is also obviously ok.” This is a serious argument used by actual politicians and spooks all the freaking time.

As George_Spiggott on MeFi said about this very entry (BTW this post of yours is linked on Mefi – I happen to have both sites bookmarked) “Anyone who says they’re going to kill a hundred people to save a million can be relied upon to achieve the first part.”

***(Peter Watts)But if you’re looking for messy real-world applications, there’s the one I offered up near the top of the post: medical experimentation vs. corporate greed, curing cancer vs. causing it.***

My counterargument is this – there have been quite a few actual real world instances of unethical medical experimentation, and as far as I am aware, most of them were at best marginally useful, and most were just horror shows. So, no, despite that fact that thought experiment Dr. Spock can sacrifice 100 test patients to cure 1,000,000, in real life it doesn’t seem to work.

***(Peter Watts)Why then don’t we let medical researchers kill a few to save many, when we let corporations kill millions for profit? The answer seems to be, simple squeamishness; the researcher is more directly hands-on in her killing.***

I’d argue that if a regular medical researcher unambiguously killed as many people as Phillip Morris did in the name of profit they would suffer hugely. Phillip Morris gets away with it because they are powerful, because the causation has wiggle room, and because diffusion of costs and responsibility is a core superpower of corporations.

BP is not evidence that as a society we should think it is ok to dump dirty oil on seabirds.

***(Peter Watts)In terms of confidence, there are expected payoff functions that quantify that stuff statistically. You’ll never be able to predict with certainty whether Frank or Francesca are going to die, but you can estimate with a high level of confidence what your overall mortality rate is going to be.***

But the statisticians can lie, so I can’t have confidence unless I spend the time and effort to verify. If the question is important (and these questions are life and death by definition), a statistician might have significant motivation to do so.

To sum up:

A. I am very suspicious of any argument that anyone needs to be sacrificed to save (N>1) others, unless the person who needs to be sacrificed is the person calling for the sacrifice. When this is called for in the real world, it very often means someone is trying to get away with murdering the sacrifice, not that someone is actually trying to save the others.

B. Researchers trying to test people on ethics problems are going to encounter their ingrained knee-jerk reactions. If a psych researcher told you “As part of this experiment I need you to give me your wallet” a lot of folks are going to hesitate, not because they logically think researchers are running cons out of the psych dept., but because that kind of question triggers automatic defenses. Asking someone to kill someone else, even in theory, is the same.

So you can’t really have starkly simple thought experiments about ethics, because while you can posit to the testee that they are in a morally clear situation, they are a human being with all the baggage implied, and a lot of that baggage involves defending themselves from folks trying to twist morality around for their own benefit.

OK, this got way to long. Hope it was interesting.

***(Peter Watts)Thanks for making me look up “glyptodont”, by the way.***

De nada.

By the way, we are … I mean gylptodonts are … extinct. I am not one.

Just to be clear. 😉

Quick Coda:

I have probably misunderstood your intent. You *can* have starkly simple thought experiments about morality if the moral agent in question is also part of the experiment.

So Doc Spock is a valid thought experiment, but the trolley problem as usually described is not. When the dilemma is presented to a real human they cannot be neatly severed from their moral universe. Just like you can’t Schrodinger a real cat by telling it it’s in a box with yadayada, you can’t do a “thought experiment” on morality by quizzing a real person.

The conclusion of Doc Spock (that most people are not utilitarians) of that seems perfectly obvious to me, and I have spent a couple pages explaining why I don’t think people are, and why they won’t think that way even when prompted by a chance to insert themselves into a hypothetical. Hope it wasn’t too much of a digression.

After running several scenarios and sleeping on it, here we go.

Assumptions: There is a cost associated with the transplant operations {time for recovery, financial burden on State/family/insurance co. and/or resources} and these vary depending on the organ and operations in question. They are ranked 1 being the most “expensive” to 5 least. Otherwise, things are equal. Each patient is approximately the same age and “value” to the state or would be were it not for their health problem. Also, the time to recover from a broken arm is less than any of the transplants.

For the Greater Good, we tend to the broken arm dude so that he heals quicker. We harvest the organs of 1 and 2 and transplant them into 3, 4, and 5. The State {regardless of system, I think. Back to work, whether that’s being a fascist or a commie or in between} is best served because we have minimized human downtime and recycled a pair of burdens.

“Live long and prosper…some of you.”

Re: Vulcan ethics. We have a few problems.

First, the back story is about embracing logic due to passions having been the culprit in Vulcan’s previous bloody history. As such, can we really trust that they are completely logical and not biased towards morality albeit arrival from a different path?

Next, of course, there’s what actually happened. Several people died in order to–on the surface–prevent the Klingons getting their hands on a weapon. The end result, though, resurrecting Spock. Which resulted in Kirk shrugging it off by reversing the phrase in question.

In the real world, yes, things get much murkier. Torture and the ticking time bomb scenario mentioned above, for example. That was most likely one of the justifications, though instead the bar was lowered to any potential roadside IED. The question as to whether torture works, and even if sometimes it does, whether is the best way to acquire intel from a purely pragmatic standpoint gets overlooked because anger is the real driving force and the IED question is the excuse.

Interestingly, one of the problems in US Army training Iraqi police was getting them to acknowledge that roadside bomb locations and times could actually be predicted and to let go of the “God’s will” notion of let it be, que sera, sera.

Governments are great at capitalizing on the wounded moose illusion {one of the most moanable moments, and there were many, in Skyfall was young Q connecting an acquired laptop to MI6’s network in a clearly underfunded workplace}, but have much better capabilities at times than they let on. Again, profit and power grabs trump reality, and so we get “More please and even if you don’t please” instead of honest debate.

I believe part of the reason the emotional reaction to the medical researcher is worse than Philip Morris is the role of expectations. We except large corporations to be greedy and uncaring, but medical researchers are not supposed to be murderous.

“In terms of confidence, there are expected payoff functions that quantify that stuff statistically. You’ll never be able to predict with certainty whether Frank or Francesca are going to die, but you can estimate with a high level of confidence what your overall mortality rate is going to be.”

I don’t agree with that. Sure now, with fifty years of data we can predict lung cancer rates with some reasonable accuracy, but at the time these decisions were being made there was a lot of uncertainty. Eg, how do the incorporate the possibility of a nuclear war between US and USSR is going to kill all the smokers before cancer becomes an issue.

Regarding the fat man trolley experiment, we did that at work recently. Interestingly, the two regular Star Trek watchers here responded with ‘neither of those choices, look for a third option.’ The one libertarian we have said, let those five people die cause its their own fault for being on the tracks while not paying attention to oncoming trains.

There is a point at which this all gets reduced to absurdity–complex ethics quandaries can be imagined, especially by extrapolating consequences unknown to the decision-maker at the time.

That becomes a lot of the issue: when talking about “kill one to save ten,” how certain are you of the “save ten” part? “Torture one to save thousands” is indeed reasonable… if you can draw a cause-and-effect line between Part A and Part B. However, “torture one to get information which may be part of a plan that may, in retrospect, turn out to have saved thousands” is too thin a line.

Regarding the original trolley: why can’t the person who knows about the problem, leap onto the track him/herself, instead of causing the death of the line worker or the fat person? (Why is it a “fat person”?” Why not “a movie star?” How much would the answers change?)

On the flip side, we are loathe to apportion blame the same way. “Perform experimental surgery that kills 1% of patients with the hopes of saving thousands later” is considered murder; “create an ad campaign that encourages voluntary use of an addicting substance which may lead to health problems which will cause early death in .1% of users” is, again, a very thin line.

There’s a reason class action suits worked but there have been no murder trials–that kind of causality is provable at the level of civil court, but not at the “beyond reasonable doubt” level of criminal court.

Side thought: I have heard a theory that Vulcan “logic” as its highest virtue, is not English “logic,” but like “dharma,” includes a set of values intended to shape all life choices. How do you avoid unnecessary violence? Logic. Choose a spouse? Logic. Raise healthy, productive children? Logic. Pick a career? Logic. Evaluate potential choices with limited data? Logic. And so on. The ethics used to avoid their ancestor’s tendency to violence are grouped together under a single label, for which “logic” is the closest English approximation, but it is not as strictly objective as English “logic” tends to be.

If I’m a utilitarian, my goal is to maximize total utility. I believe that total utility would be maximized when everyone operates according to a first-do-no-harm moral logic. The reason I believe this is that, in practice, most applications of the utilitarian kill-few-save-many logic are mistaken, especially since the save-many step often comes much later and is highly uncertain. In practice, the kill-few-save-many logic is applied almost entirely during times of war, and in practice it is usually factually mistaken (ie, more in fact were not saved than were killed). This of course is not always the case — there are times when killing the few to save the many may actually work out. But these are sufficiently few cases that it is worth the occasion short-term utility losses for the long-term utility gain, particularly since we are not gods and in general are unable to distinguish ahead of time the will-work-out from the won’t-work-out cases.

To take a hard case, even if you think that firebombing millions of German and Japanese civilians saved more lives than it took, it would still be worth letting the Germans and Japanese gas and murder for a few years longer by applying the first-do-no-harm logic, because the total utility — including all the many other wars where the kill-few-save-many logic was mistaken — is still improved by the universal application of the first-do-no-harm logic.

So if I’m a utilitarian, killing one to save five is fine if there are only five people in the universe. But in the real world, even if we are certain in this case that killing one will save five, there are still fewer people killed overall by adopting a first-do-no-harm logic and letting the five die for the sake of the many, many more others.

But of course I’m not a utilitarian. There are many other perfectly consistent moral logics that both make the world a better place, and make the individuals who practice them better people.

I think it’s more a matter of me not being able to stay focused on the pure thought experiment. I can’t avoid the urge to generalise it to the messy real world or, worse, imagine these arguments being used by those I don’t like to justify their doing things I think are bad.

OK, I’m going to play devil’s advocate for the tobacco company.

Phillip Morris and similar companies employed, or generated employment, for millions of people world wide. If they’d stopped selling tobacco products, those people would have been out of a job.

At least in Australia, unemployed people are thought to be four times more likely to commit suicide. There’s a bunch of other health issues associated with being unemployed and poor, which I believe is especially bad in the USA.

If a company closes down because they think that a product might be unsafe but aren’t sure, are they responsible for the higher death rate among the ex employees?

This is widely accepted: Multinationals provide jobs.

It is also a fallacy. Productivity is higher than it’s ever been in history. While there are many factors {the PC, for example}, I don’t think it is arguable that these big cos aren’t looking for ways to achieve that intentionally. It then manifests itself in two ways: exorbitant top officer salaries and stock value. This latter is required to be more than the previous year {as opposed to same + dividends} or the company is thought to have failed.

IOW, 60 people doing the job it took 100 30 years ago means 40 people out of a job. Multinationals are job killers.

One more thing about smoking– a lot of people enjoy it. No one has fun dying of a medical experiment.

Are thought experiments worth the damage they cause? (Flippant question, not a flippant article.)

Criminal medical experimentation which led to very useful results. Skip down to frostbite.

I believe it’s a fat man because a thin person isn’t heavy enough to stop the trolley. There may also be an assumption that the fat man can’t defend himself, though I don’t think most people could move someone who weighs 400 pounds and is resisting. Perhaps this only works by releasing the brake on his hypothetical wheelchair. In any case, it should be worth killing two or three average weight people to save five people (weight not specified).

Possible heart risks of long distance running. More about risks.

What about gylptodos and glyptotrys? …Ok, I guess there is no glyptotry.

Well, I’ve been running for decades, and believe me, I’ve never been addicted. In fact, I hate every step.

It does seem to increase lifespan, though. Why, you gain an extra hour of life for every three hours you devote to running!

I know, I know. But again, the fact that Hitler was a vegetarian is not an argument against vegetarianism. Everyone who says “In the real world, that’s just the kind of thing evildoers would say!” is engaging in a really obvious fallacy.

Also a really popular one, apparently.

You know what? It kind of is. For all the wailing and gnashing of teeth, for all the financial “compensation”— BP had a banner year that year. If society didn’t think it was okay to dump oil on seabirds, you’d think we would’ve put them out of business. Or at least make a significant dent in their profit margin.

Okay, I’m seeing this a lot; some people seem to think that I wrote this post to diss Spock, or to misrepresent elements of the Trek canon. Clever title notwithstanding, the Vulcan element here is intended merely to act as a springboard to explore inconsistencies in our own moral standards, not as an end in itself. (I’m not actually accusing you of doing this, btw, but your invocation of Vulcan history gave me an opening.)

I’m pretty sure the industry knew what was going on. One of the reasons the class-actions suits succeeded was because of all the high-level confidential memos among high-level tobacco executives, talking about how to suppress the fact that they were killing a lot of people.

Fair point. But I do believe we learn the most about a system by examining its behavior near its tolerance limits. If you want to know what someone’s made of, you’ll learn a lot more by sticking him in a lifeboat with two other people and one can of beans than you would if you just followed him around to the office and supermarket all day. These “absurd” thought experiments take us to those limits, at least conceptually, and so I think they’re valuable. Of course, it would be a lot more valuable (in terms of valid results) to actually put people in lifeboats and force them to fight over a can of beans, perhaps, but even I don’t want to go there. Yet.

Elf, you’ve answered your own question: It’s a “fat person” to prevent the noble self-sacrifice of the guy who knows about the problem, because that guy doesn’t have enough mass to stop the trolley via self-sacrifice. The scenario was carefully constructed to take the self-sacrifice option off the table— to simplify the game board enough to put the respondent in the most difficult moral position.

Really, I feel like I’ve made this point about seventy three times by now.

Whoa. This whole post, and all my replies and counterarguments thereafter, would have been worthwhile just to see someone bring this up. Good job, dude.

To a point. You learn about how those people tick under those circumstances. A well fed, well rested man’s decision making process isn’t less valuable or telling of him than that of his actions in an extreme situation. I know this from my military training – I have some good friends who were terrible to serve with, but with whom I get along splendidly under civilian circumstances. Does that make our civilian friendship hollow or something? I don’t think so. It’s just that circumstances matter.

Not the kind of thing i’d normally mention but you gave me an opening. 😉

Guessing Dr. L was 69 or so. Think it was circa ’98 and was 49 in ’78, so…

Runner to Give Talk on surviving after 45.

There was another, a biology prof around the same time, part of the same running group, but didn’t know him as well. Likely same age or older.

Hear ya on the idea behind the post. Tough for me, as others have said, to leave out all extraneous issues {cloning organs from stem cells, anyone?}. Otherwise, solution would be euthanize just one patient with some criteria for which assuming we’re throwing out the oath.

We still don’t believe in the no-win scenario 🙂

Aargh! Been thinking entirely too much about this.

OK, evolutionary hypthosesis for the trolley paradox from somebody who has never formally studied any of this and is speculating wildly.

First, I want to start with a lifeboat problem in our distant past.

Tribe A has 100 members. But the weather has been bad, winter is setting in, and there’s only enough food for half the tribe. (If they try to share reduced rations, everyone gets too weak and dies.) So, they kill 50, starting with the senile elders, cripples, storytellers, etc.

Neighbouring Tribe B also has 100 members and the same problem. But they have a different solution: kill tribe A and take their stuff.

Ethically, both kill the same number of people. But for spreading genes, Tribe B comes out ahead.

OK, now fast forward to the present day trolley paradox. Is it possible that people are reluctant to throw the fat man in front of the trolley because, for almost our entire evolutionary history, the people spatially closer to you are also usually genetically closer?

(Westeners often joke about spending more time with co-workers than our families, but that is very much an exceptional situation.)

Has the trolley paradox been studied with variations on which people are family members or not?

The dialogue contains a clue: intentions.

The corporation provides people with a pleasuring device that comes with consequences and warnings about those consequences. The people themselves make their own decisions (or so: advertising, social pressure, addiction, &c). They do not intend to kill people in the name of anything. Not even profit: they intend to provide a device for people’s pleasure, at a profit.

The medical researcher intends to kill people in the name of something. This is no different than, say, linking together four rats to use the spare processing power to predict the weather—no different in the sense that what’s being studied are the mechanisms and operations of flesh and how to engineer them for specific instrumental goals. We already routinely allow the killing of many different experimental animals (I use to run an alt account called C57Black6: despite all his rage, he was still just a mouse in a wheel).

Maybe what bothers people about the medical researcher is twofold: 1) reduction of humans to their flesh mechanisms (people tell me most folks don’t like the use of a perfectly useful neuter pronoun in gender-ambiguous or genderqueer situations, because it’s dehumanizing, and we cannot dehuman humans); 2) the intention is specifically to kill someone human.

We know a lot of people are not comfortable with deanimalizing animals if it’s something seemingly frivolous—the usual complaint about “testing cosmetics” on mammals. They are getting to be uncomfortable with deanimalizing animals when it comes to “industrial farming.” But I like the point raised earlier by Adam Etzion. Maybe it’s not an east-west thing, since killing animals and immediately serving them up is something rural folk do when hunting, farming, living. I take care of two goats. I cannot imagine killing and eating those two goats. I know some farmers. The people who raise livestock for slaughter come to know and understand the subtle personalities of the living beings they will consume. They don’t just know how the meat gets to the plate, but how it ate the things that helped it become tastier meat, too. They saw what it ate. Heard how it called. Or maybe not. Some farmers have lots of cattle or coops. Hard to know them all.

Perhaps if we did allow more people to slaughter their own animals for food, the calculus involved here becomes different. Looking into the eyes of a living being who responds to pain inwardly and outwardly as you kill it is not a common experience for people. But over time, people learn not just the moral necessities involved with killing animals, but how to do it well. How to make the choice as difficult as you want it to be, which might mean not that difficult at all.

Giving death is hard, and we create lots of situations to explore why. But taking a life for a reason fades away into routine the more people become accustomed to giving death. Flesh has a number of uses, and we’re finding more uses for it all the time, like organic computing. The more need we will have for the flesh of other living things, things with deep subjectivity and inward expression and personality, the more we will grow comfortable giving death to reap the benefits of the flesh.

In this sense, Phillip Morris does not intend to kill, but to turn the flesh of people against themselves, in order to keep them purchasing pleasure devices. We’re largely comfortable with that, especially now that we have fashionable and modular e-cigs while the other parts of the world are using up the cigarettes. There was some legislation and law suits, but the argument is right: it was not enough of a moral outcry to stop PM as there is to stop the intentional and goal-oriented killing of humans. We’re comfortable enough with selling death, and maybe in time with giving it away for free, for the right cause.

Since we’re all giving different scenarios, how about this one?

Suppose analysis of social patterns reaches a point where we can predict with high confidence when someone becomes suicidal. We engineer through the usual behavioral promptings a situation where these people are at the right places where they can give up their lives for a Noble Cause, such as stopping an assassin’s bullet at the right time or saving five people through organ retrieval or forcing a train to stop or whathaveyou—but without them knowing they’ve been placed at the scene. Call it: the Clarence Leap. Is this unethical, particularly if in their last act they do manage to save a few people, a few cars of cargo, a few rogue nations, a few multinational corporations, or a few kittens?

I know the right response is supposed to be that we’re supposed to use the predictive power of social forecasting to help people. But, what a way to go out, and to become the hero of many while doing so. Isn’t that what a lot of people want?

And, on an point of Mr. Non-Entity’s re: Solomon:

Isn’t it interesting that the reasoning leading us to think Solomon was wise—the one who saves the kid loves the kid more—is not what leads us to think the Father is love—he loves everyone else so much he kills his Kid, his only begotten Kid?

I think that problem that many people have is that victims are being actively selected as opposed to just generally being affected, and further that they don’t trust how the experimental ‘victims’ or organ donors or whatever would be chosen. No one is going to trust ‘logic’ because they know they wouldn’t be logical about it and would exclude anyone they liked, and they also know that ‘logic’ can be easily biased by the starting principles. The same goes for algorithms or similar machine-driven methods. Someone, somewhere programmed in the initial values and chose the data set it’s being given. Pretty much everyone has person experience with how easily that can be twisted, especially among historically disadvantaged groups. A Republican-programmed machine is obviously going to spit out “woman=baby factory” and logically declare that ethnic minorities make better test subjects because on average they make less money and are therefore less valuable to society. Other people will have different biases but they will be there. It’s something born out by history and by current events.

This sort of thing gets emotionally ingrained early. Everyone’s got a story about a boss maliciously screwing with evaluation criteria or a gym teacher singling them out or something like that. Only a very select group is going to be viewing it from a supposedly clinical perspective – rich old white guys who are never, ever going to be experimented on and who are the most likely to benefit from any expensive new treatment or organ donation. People might be more willing to be ethically flexible if it weren’t for a deep-set suspicion about motive and fairness that throws the entire premise into doubt.

(I also think it’s instructive that people keep wanting to construct more elaborate backstories for the train dilemma. The entire scenario is so simple and seemingly straightforward that we smell something fishy about it, thanks to a lifetime of our innate sense of fairness being battered about by experience with jerks.)

Reading that I’m just getting pissed off with what small minded thugs can get away with without any planning nor real effort as long as they have a uniform to hide in. And it is almost the no effort part that irks me the most.

Haha, if that is true it is almost too good…

You are welcome. In the harsh light of day it seems I botched my I, Robot reference, or at least referenced the plot from the movie and not the book so hopefully the Impossibility Theorem reference makes up for that.

… instructive that people keep wanting to construct more elaborate backstories for the train dilemma. The entire scenario is so simple and seemingly straightforward that we smell something fishy about it…

I caught the same scent. There’s a braiding of two (at least) distinguishable discourses from the OP onward: one about the merits of more or less consistent, more or less quantitative utilitarianism…

And one about the meta-merits of lifeboat/trolley ethics: do high-contrast, high-relief test cases reveal more than they obscure?

On the latter, I lean more to “no” than our gracious host does. In natural science, we all get a chuckle from “Assume a homogeneous spherical frictionless elephant…” But on the way from that to the engineering and operation of any real pachyderm processing facility, at least there’s are broadly shared understandings about error bars and tolerances, how much precision and certainty is given up, how much :”messier” it all gets, at each stage.

I rarely see a counterpart to that when people argue from lifeboat/trolley ethics examples to “…and therefore in the political/social world we should agree on behavior X”… or “policy Y,” or “institutional incentives Z1 through Z7.” I much more often see a single leap and flourish of “Q.E.D.”

(All of which may simply be a windy restatement of your “battered by experience with jerks.”)

5 people need organs, one man has the organs that can save them… but our entire society needs to be able to trust that they aren’t going to be randomly condemned to death for the benefit of others. We sacrifice those five lives for the greater good, that greater good being our faith that we are safe from being randomly cast in front of a trolley.

I thought I remembered something relevant to the “doctors asked about trolley dilemmas” question appearing on BoingBoing some months ago…. and I couldn’t find it. But while searching, I did find this:

‘Utilitarian’ judgments in sacrificial moral dilemmas do not reflect impartial concern for the greater good: http://www.sciencedirect.com/science/article/pii/S0010027714002054

All greater goods are created equally, but some are more greater than others.

Two things:

First, a reaction to the post – I note that the real human population has a range of personality types, some (a few) being always pro-social, most being sometimes pro-social & sometimes not, some being consistently ant-social.

One thing I notice is that your scenarios involved *lying* to the medical volunteers about the risks and *pushing* the person onto the tracks. I understand that the use of deception & force keeps the moral decision in the hands of the person being posed the conundrum, but it really poses TWO ethical problems: should you save the larger population by allowing the sacrifices of the few, AND, are you justified in ENFORCING that sacrifice on someone else’s part?

ASK the fat person if they’ll jump. Some will say yes, some no. ASK for volunteers for the medical trial with the understanding that it may be fatal. Some forks will take the risk, others won’t.

The actual ethical answer may not be so much a first-order answer, involving exactly who you get to sacrifice, as a second-order answer, involving respect for the actual spread of behaviors we have evolved over time…

Second, some recent bits from Science Daily which strike me as things Peter Watts might like to play with:

1) Basic computing elements created in human gut bacteria

http://www.sciencedaily.com/releases/2015/07/150709132444.htm

2)Consciousness has less control than believed

http://www.sciencedaily.com/releases/2015/06/150623141911.htm

and

3) Carbon nano particles you can make at home, which can elude the human immune system and deliver drugs

http://www.sciencedaily.com/releases/2015/06/150618122233.htm

Peter D,

None of this would matter to utilitarian philosophy though. The entire point is that only consequences have ethical significance, intent, character, and everything else are, in and of themselves, irrelevant. So as long as the person acted in a way which maximised utility, no secondary fact would be of concern.

EthicsGradient: I think the point is that the exact same people who CLAIM to be maximizing utility are the same sort of people who would throw maximum utility out the window if they could get a benefit, and therefore can’t be trusted to make the judgement about what maximum utility actually is: maybe the doctor who says he’s saving five lives by taking the organ from one patient is actually more interested in earning five times the fee, and the surgeries and death of the one weren’t really all that necessary, the patients could have survived long enough to get legitimate transplants. Maybe the guy pushing the man onto the trolley tracks just wants to kill his business partner and there were other easier options to stop the trolley. Maybe the real cold equation is the bonus the pilot makes for not over-spending on fuel, but there actually is enough for to carry an unforseen stowaway down (or maybe he doesn’t feel like paying the fee for damaging company property if he cuts out a heavy chair and tosses it instead of the girl).

Sure, there will be times when their selfishness actually is in line with the greater good, but, on the whole for human society the maximum utility might (not necessarily will, but might) be achieved by shunning anybody who proposes these particularly cold branches of utilitarianism, because it lets us notice and be more wary of the sociopaths that would exploit that desire to help the most people.

Peter Watts,