What Dreams May Come.

Interrogating the Dream

Anyone who caught my talk at Ratio back in 2017 might vaguely recall a curious claim: that the Human corpus callosum—that bundle of neurons connecting our cerebral hemispheres—has a bandwidth in the same ballpark as that of a modern cell phone, once you take noise correction and synaptic redundancy into account. I didn’t get that factoid from the peer-reviewed literature. I got it from a hotshot neuroscientist name of Erik Hoel (who also, as it turns out, is a pretty good author in his own right). He scribbled out those calculations on the back on an envelope at my request, and I ran with them.

Now, Erik has scribbled out a few more calculations, and come up with a whole new theory of dreams. The paper is “The Overfitted Brain: Dreams evolved to assist generalization“, it’s posted over on arXiv, and it’s not only fascinating: it’s accessible. This is not something you encounter a whole lot in the technical lit.

Over the years I’ve read the occasional article about dreaming. Back in the eighties I read that dreams served to consolidate long-term memories, that dreaming rats fired up the same neural pathways that were active while they learned to run mazes during their waking hours. Somewhere around the turn of the century I encountered the idea that dreams were just an epiphenomenon of random sparks in the visual cortex: weak low-level noise, normally swamped by real visual input during wakefulness, but the only game in town when the optic nerves stopped sending signal. The brain, first and foremost a pattern-matcher, reflexively interprets that noise as though it were signal, reads meaning into meaningless, internally-generated Rorschach blots. If something happens to be preying on your (waking) mind, the brain might be more likely to shoehorn those blots into something conceptually related to that subject. (I liked that interpretation: it explained why the solution to my M.Sc thesis came to me in a dream.)

Erik calls bullshit on these and other explanations for the dream state—or at least, he argues that none of them tell the whole story. Memory Consolidation theory doesn’t really hold up in light of the fact that even when people report dreaming about things they’ve experienced, those dreams are anything but faithful re-enactments; a hallmark of dreams is that they tend to be batshit insane almost by definition, although the dreamer doesn’t generally realize that when they’re in one. (This also weighs against the theory that dreams model different scenarios to prepare the brain for novel challenges. How often am I going to encounter the Titanic in outer space while riding a small red wagon with a couple of 2x4s strapped to the gunwales?)

I think Erik may stray a bit into foamy-adaptationist mode in the course of said arguments. Even a dream life containing 95% hallucinatory insanity can be adaptive if the remaining 5% generates usable insight. (I’m far from the only person to be stymied by a scientific problem while awake, only to have my dreams step in and serve up a solution; it’s actually a pretty common feature of the grad school experience, not to mention such famous examples as Kekulé‘s benzene daydream.) And a lot of theories dismiss the specific content of dreams as epiphenomenal anyway, side effects of some deeper process with no functional significance.

That last point highlights the most significant single way that the Overfitted Brain Hypothesis stands apart. It posits that the narrative absurdity of dreams is not a side effect: that it is, in fact, the whole point of the exercise. Dreams, according to the OBH, are injections of noise that keep the brain from overfitting experiential datasets.

You’ve probably heard about overfitting. It’s the bane of deep-learning programs everywhere. You train a neural net on a given data set—reward it for identifying MAGAnites in a crowded theater, for example (you remember theaters, right? You remember crowds?). The network learns to perform perfectly on that training set, so you try it out on new data—only to see its performance drop back down to random chance. Maybe there was some quirk peculiar to the training data (perhaps all the sampled MAGAnites had just come from a rally for Breast Cancer Awareness, and the system concluded that it could identify Trump supporters by looking for pink ribbons). Whatever the particulars, the system has focused on idiosyncratic details within the training sample at the expense of broader patterns that extend beyond it. It has memorized the data instead of generalizing from it.

The people who train these nets use a couple of tricks to avoid overfitting: injecting little bursts of noise into the signal, for example. Another technique is the “drop-out”: randomly removing data from the training set to make the whole thing sparser, and weaken local correlations. Simplifying the network is another approach since, as Erik writes, “reductions in model complexity in machine learning are generally associated with a reduction in overfitting”.

(The paper presents all this stuff in the context of machine learning, but I can’t help seeing the fingerprints of my own biological background all over these concepts. Drop-outs seem very much like the old statistical techniques of “jackknifing” and “bootstrapping”, in which you run repeated analyses on slightly different subsets of the same data pool—basically a way to fake multiple datasets with the same distributional parameters. And the virtues of reducing model complexity were hammered home to me on Day One by my old doctoral mentor Carl Walters, who never let us forget that any model as complex as Nature is going to be as difficult to understand as Nature. In a lot of cases, hi-def realism is the last thing you want in your models; they have be caricatures if they’re going to generate useful insights.)

(But I digress.)

Anyway, the OBH proposes that dreams serve the same function in meat brains that drop-outs and noise injections serve in mineral ones. They keep the brain from overfitting mundane reality by injecting a little hallucinatory static.

Sounds plausible. Also sounds a bit handwavey—I mean, how do you test something like that?—but Erik backs up his hypothesis with some interesting points:

- We tend to dream a lot more about boring, repetitive activities—stocking shelves, playing Tetris all day—than we do about interesting and diverse ones. In other words, we are most likely to dream about low-variance behaviors that are especially prone to overfitting.

- Those dreams do not recount the specific details of said activity (as you’d expect if the goal was memory consolidation). They take place in the same conceptual space, but they are—like most dreams—fundamentally absurd.

- Sleep deprivation does not compromise all types of cognitive performance equally. Rote memorization tasks are relatively unaffected. Generalized performance, on the other hand—i.e., the kinds of activities that would benefit the most from countermeasures against overfitting—really take a hit.

- This is apparently true even among babies (and man, would I ever love to have sat in on that experiment. What I wouldn’t give to see a statistically-significant sample of infants strapped upright into customized baby car-seats, being bombarded with Metallica 24/7 to keep them from dozing off…)

So there does appear to be significant, albeit circumstantial, evidence that dreams with absurd (but subject-relevant) content serve an adaptive function. As Erik points out, though, there would be certain survival implications should a brain experience drop-outs or noise injections while awake and active; tripping balls in a predator-filled environment might reduce one’s inclusive fitness, for one thing. If you’re going to massage the experiential dataset in real time, it’s best to choose a time when the individual is inactive and (relatively) safe. It stands to reason that evolution would repurpose the sleep state (which probably already existed for other reasons) to this end.

This is the place where I usually take the findings of staid peer-reviewed research and run with it, extrapolate into exciting sfnal territory as only I can do. This paper really served those up, too; I just knew I’d be injecting elements into Omniscience[1]1. Maybe the Bicamerals use sleep-like noise-injection substitutes to allow them to stay awake and functional for longer periods. And hey, sleep aside, wouldn’t reduced overfitting be beneficial to any kind of cognitive analysis? Of course, that could be weaponized; injecting too much noise into the system reduces its ability to function at all. Maybe a microbe targeted at Moksha Minds…

But I never really got the chance to let myself off the leash, because Hoel beat me to it. The back end of his paper explicitly talks about inducing artificial “sleep-like states” to serve the purpose of dreams in waking brains working long shifts. He even applies these cool cutting-edge speculations to one of the most mundane of Human activities: vegging out in front of the TV…

“It is worth taking the idea of dream substitution seriously enough to consider whether fictions like novels or films act as artificial dreams, accomplishing at least some of the same function.”

It’s an interesting thought. Given how utterly derivative and repetitive most Hollywood movies are, though, I’d have to wonder if they’re more similar to playing Tetris all day. They might not serve as fake dreams so much as provoke a greater need for the real thing.

Interrogating the Dreamer

If the OBH is right, we better hope these guys don’t get their way.

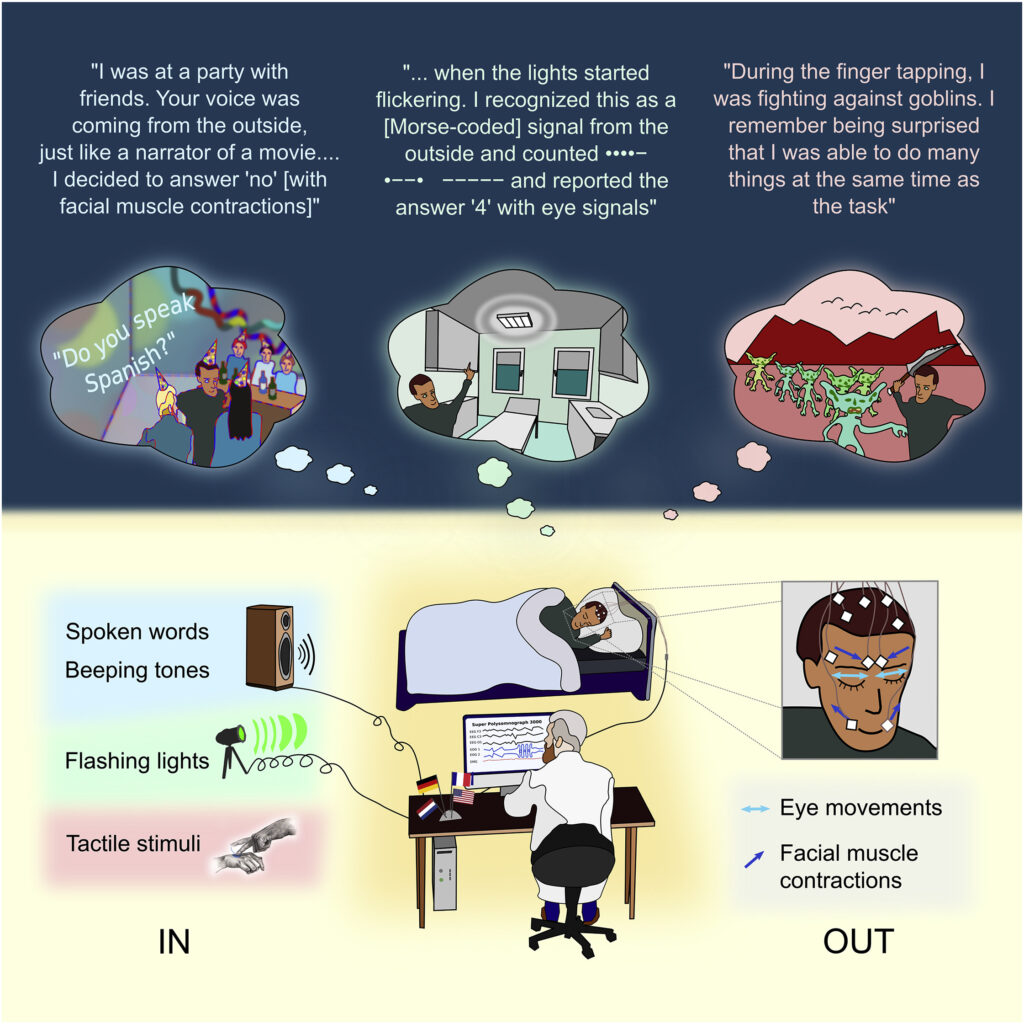

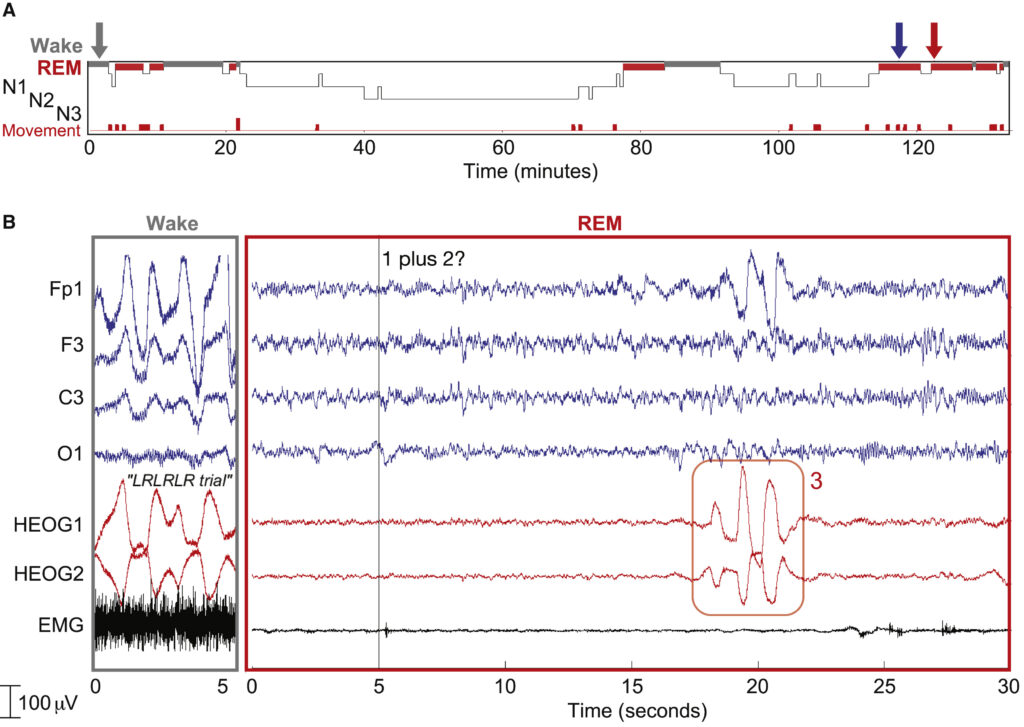

Konkoly et al have figured out how to communicate with lucid dreamers while they are dreaming. They pose simple math problems, present word discrimination tests. They ask yes/no questions. Sometimes they use spoken words; other times they interrogate their subjects via taps on the hand, coded beeps, or pulses of light (bright enough to perceive through closed eyelids, obviously). The dreamers respond using prearranged eye movements or contractions of the facial muscles: Four. Yes. Down.

Believe it or not, it works.

Upon waking, the dreamers debrief. Unimaginative subjects simply heard the researchers’ voice superimposed over their dream like the Voice of God; others incorporated the stimulus into the dream itself, heard the question coming from a car radio or spoken by an NPC. At least one dreamer heard the words “four minus zero” and answered accordingly, even though the researchers had not actually spoken any words; they’d conveyed the information via light pulses. And if Fig 1 is to be believed, one subject answered a question conveyed through hand-taps while fighting off a horde of goblins.

The information makes it through, apparently—but the receiving brain makes its own choice of how it’s perceived. (Which reminds me of something I call “tonguesight”, but this post is already too long.)

It’s only a first step, of course. And the methodology is arcane almost unto Rube-Golbergianism: Morse code and prearranged eye-twitches, double-blinded referees voting on ambiguous signals, corroborative EEGs to make sure your subjects aren’t just yanking your chain by pretending to be asleep. Still: as Konkoly et al point out, being able to communicate in real time with sleeping brains does more than simply open up vast areas of potential research in consciousness and sleep studies. We can use these techniques, they suggest: edit dream content to treat PTSD, or teach new skills during sleep:

…dreams could be curated in accordance with an individual’s objectives, such as to practice a musical or athletic skill…. interactive dreaming could also be used to solve problems and promote creativity—the next moonshot ideas could be produced with an interactive method that can combine the creative advantages of dreaming with the logical advantages of wake. Artists and writers might also gain inspiration from sleep communication.

All of which would seem to involve tamping down the random, batshit elements of the dream state and replacing them with something more, more instructional. Replacing noise with signal. Potentially overwriting the very purpose of dreams, if Hoel’s right.

Granted, we are talking about lucid dreaming here; a relatively infrequent phenomenon in which you’re explicitly aware of the absurdity of your experience. Maybe that makes a difference. Then again, maybe it doesn’t: the lucid dreams I’ve (rarely) had are no less hallucinogenic than the usual kind. (I was once trapped with a bunch of people in a renegade glass elevator that was accelerating uncontrollably upward. As it smashed through the roof of the building and arced into the night I force-conjured a little control panel at waist height, which I used to deploy a pair of stubby wings and some kind of propulsion system from the base of the car. I brought us down gently on the water and took everyone below the surface—apparently the elevator was also a submarine—for a glorious moonlit tour of a coral reef that happened to be just offshore.)

(What do you want from me? I was, like, thirteen.)

Even Konkoly et al admit that

…lucidity can be tenuous, in that individuals can transition from lucid dreaming one moment to believing that the experience is a waking experience the next, and maybe back again…. Addressing this issue is an exciting challenge for future research.

So: two papers, one theoretical, one applied. One on what dreams are for, the other on how they can be repurposed. If the first one’s right, the second points us down a dangerous road—one which might ultimately short-circuit an essential cognitive adaptation older than our species. You could strap on your DreamweaverTM headset with every intention of learning Mandarin or breaking some cognitive logjam—and after a few nights, find yourself not much good at anything but playing Tetris.

That’s by no means certain, of course. It’s more of a worst-case scenario. Which is what you come here for anyway, amiright?

Still. I don’t think I’ll be lining up to be an early adopter.

1 Don’t ask. Isn’t it enough that I gave you an excerpt a while back? ↑

And you deserve them. Fortunately Nestor already covered it downstream…

Yeah, but information is still being transmitted, right? No matter how noisy, fuzzy, or analog, signals are still being conveyed, and the carrier pipe still has an upper capacity limit.

How you’d figure that out for a corpus callosum I’d have no idea. I just point you to the guy who actually knows what he’s talking about.

Do we know that, though? I’ve always liked the idea of gutbrain fuming down in the abdomen, resentful because Head Brain gets all the cool sensory input and motor control, biding its time and plotting insurrection. I’ve even thought of coauthoring a children’s book on that theme…

I had this experience once, in junior high school: I’d finished very early on a biology final exam and was reading (as always, when I could managed); in this particular case, King’s The Talisman. I was deeply engaged in the narrative, but not more so, subjectively, than usual.

I sat there, head on hand, burning my way through the words. Gradually I became aware that people were saying my name from somewhere far away. Strange. Even stranger: I realized people were touching me on the shoulder and shaking me gently. I slowly dragged my consciousness out of the book-world and realized the alarm had sounded for the end of class. I was surrounded by my teacher and classmates, worried looks on their faces, as they had been trying to ‘wake’ me for almost two minutes.

Never happened before, never again. I think about it often.

I’m quite excited for this, we spend half our lives doing nothing. Imagine what we could achieve without that burden.

In a hypothetical better society of course.

You cannot mention “tonguesight” and just leave it at that! I demand details! Preferably a dedicated post about it.

Playing Tetris, worse case scenario? Surely not.

All those linked being trapped into a virtual reality as processors unable to escape is surely worse? 😉

Not directly related, but neither really off-topic, is Vernor Vinge’s notion of “Just In Time Training” in his last couple of novels, and the risks of lasting impairment it poses to trainees.

Emme,

IIRC there was an implant that converted video signals to an implant worn over the tongue that used an array of… needle like projections that would give a sort of equivalent to visual signal, delivered through the tongue. It’s something I’ve read about but never seen any visual evidence of, but it sounds cool. It doesn’t seem to have gone beyond a curiosity since we don’t see blind people walking around with Geordi laForge implants in their mouth, and it’s been over a decade.

My dreams tend to the depressingly mundane most of the time, usually stress related travel dreams when I’m due to voyage somewhere. At least the dreams of being unprepared for school and toilets overflowing seem to be dying down. I did finally succeed in controlling a lucid dream for a while earlier this month, but my expectations of having hi def vr sessions nightly have been sorely disappointed.

One thing against lucid dreaming is that often when I go to bed what I want is oblivion, not more goddamned consciousness.

>the Human corpus callosum—that bundle of neurons connecting our cerebral hemispheres—has a bandwidth in the same ballpark as that of a modern cell phone

You often talk about this, brain bandwidth, but the brain is an analog computer, it doesn’t do discrete messages that can be decoded, each area is specialized in it’s own thing. The cat’s worth of nerve tissue in your guts can’t do speech anymore than broca’s area can make you throw up – directly. Think about licking grody vomit off a leper’s wound, transmit that to the visual centers, convince them to send the appropriate signal to the gut and then you can get some vomit going. It’s all analog signals, which is why I’m not surprised at all you’d hear a voice from a series of light pulses or hand taps.

RE: footnote one….yes, the excerpt was *delicious*, but of course we want more! 🙂

G M,

It sounds like you had a dissociative episode. I don’t think they’re particularly related to sleep or dreams, it’s just a strange effect on attention and consciousness that makes you completely disconnected from the outside world if you’re focused hard enough on something.

The Tech Big-Wigs can take my dreams about fighting Kim-Jong-Un in a gundam in a subsurface lunar colony away only over my cold, dead body!

Fascinating stuff, though. Ive also immediately ordered the novel by Erik Hoel, may it tide me over till Omniscience arrives. I didnt order it from the great Satan Bezos though.

Senpainoticeme!,

Nancy Kress – Beggars in Spain. It didn’t work out well

@laurence:

That may be, but my experience of the fiction itself felt like a vivid dream, and so I wouldn’t be all that surprised if there’s a connection between fiction/narrative and dream. A university prof of mine was fond of pointing out that the very experience of fiction – what we do when read, what we feel – is very under-theorized.

Incorporating all of this into head-canon for the tape-learning in CJ Cherryh’s work. Fits well.

Hm, you already mentioned “tripping balls”, so I wonder how “hallucinogens” would fit into said mechanism. Mainly classical 5-HT2a psychedelics and intermittent use of cannabinoids[1], dissociatives like NMDA antagonists (ketamine et al.) or kappa opioid agonists (hello salvinorin) might work, too.

What about some moksha for the Bicamerals…

[1] IIRC tolerance for the “psychedelic” effects of THC develops fast with daily use.

Interesting TED talk about bioelectrical signalling in body plan development. Turns out the Thing was right and we should be shapeshifters. They managed to turn frog skin cells into little autonomous creatures that swim around with cilia made out of little intestinal hairs. Wild.

https://www.youtube.com/watch?v=XheAMrS8Q1c

A little correction if you don’t mind: Dropout does not mean removing data from the training set (in fact you pretty much always want to train it on as much data as possible, you can actually see this directly in e.g. the VC generalization bound). Dropout means randomly setting the weights of certain neurons to zero during training. This helps reduce overfitting. Another common method is adding the norm of the weights to the loss function, so that you end up with a smaller “mass” to distribute among your weights.

Something very similar called cross-validation is often used where you split the dataset into K pieces, train it with K-1 pieces, and then estimate the validation error with the last K’th piece. You do this for all combinations and report back the average error.

Anyway, I quite like this hypothesis. Had a few vivid dreams about work last week and I like the idea that these dreams were my brain desperately trying to regularize the annoying tasks I was doing.

>>> Simplifying the network is another approach since, as Erik writes, “reductions in model complexity in machine learning are generally associated with a reduction in overfitting”.

I wondered if you’ve read an old, 8pg paper that contains my personal favorite solution to fix an overfit NN: “Optimal Brain Damage” (1990) by Yann LeCun.

Back in the day, they found a typical NN could have *half* of its synpases deleted and yet retain enough structure to relearn its task very well. The idea was to make the NN /just senile enough/ to start generalizing.

K S,

An obvious choice of synapses to delete would be the ones with small magnitudes so Y LeCun goes “screw that, I’m calculating the Hessian instead. Magnitude doesn’t equal salience, and maximizing salience is what we’re after”.

That gives you what’s basically a hypothesis test, one for each synapse, in order of salience. An order in which to try deleting them.

Stepwise hypothesis testing (with early stopping) is a generally godawful way to find an optimum. AT BEST it’ll give you the path of small moves to the local optimum that can be best be reached by a path of small moves. But (like you say in Echopraxia) gotta take that first step downhill.

Yet, Y LeCun’s method seems to work better than it has any right to and I’m still trying to figure out why

Pretty interesting, but is it really novel?

I’ve had CONVERSATIONS with sleeping people…

Just recently I stumbled upon a related research paper: https://journals.sagepub.com/doi/abs/10.2466/pms.1989.68.2.567

My area of expertise is quite far so I can’t say if it is sound. At least it was peer-reviewed. But the idea is both strange and plausible.

In case you missed it, I would strongly recommend reading up on The Defensive Activation theory (https://www.biorxiv.org/content/10.1101/2020.07.24.219089v1). David Eagleman has also some ambitious goals regarding expansion of human senses (and a jaw-droping TED talk).

Trottelreiner,

It is a well known fact among stoners that frequent weed consumption suppresses dreams, and when you stop you have weird dreams for a few nights.

Classical psychedelic alkaloids don’t have the same effect IME, either tripping or micro-dosing. I dream quite normally when I microdose regularly. One thing I noticed is that ever since my first time breaking through with DMT, when I first fall asleep my dreams are a sort of impressionistic fractal haze without any sort of narrative structure, not unlike the DMT zone. But, if I just take a nap, or wake up and fall back asleep, normal sorta-realistic dreams come easily. Don’t know what’s up with that.

G M,

That happens practically any time I read a book, especially fiction. It’s just intense focus.

Just off the top of my head, I’d guess they’d fuck things up. If Erik’s right, dreaming is an evolved system that’s been fine-tuned over evolutionary time. Hallucinogenics would rewire that system; the odds are, the result would not be an improvement.

Although now you’ve got me wondering about the effect of hallucinogenics on a sleeping brain. I don’t know if anyone’s ever looked at that before…

Ah, okay. I guess I misunderstood tat. (Although at least back in my ecologist days, taking subsamples of a dataset was a legit model-building technique; you’re reducing the number of data points per sample but you’re increasing the number of “samples” by pretending that each new subsample comes from a different data set (which just happens to have the same distributional parameters). It always struck me as cheating— looked too much like pseudoreplication for my liking— but better minds than mine swore by it.

I had not heard of that paper. Sounds kinda similar, though, to the idea that small-world network effects might explain why some people can present as cognitively normal despite having, like, fifteen percent of a normal person’s brain tissue…

Me too. But I don’t think those people know they’re dreaming. They’re active (hell, there are cases of people committing murder, cruising for sex, and even creating works of art while asleep), but not “lucid” in the sense of the paper.

Cool!

Whoah! Battling Brain Bits! This is very cool (judging by the abstract, at least; will read further).

Appreciation for really being thoughtful and also for deciding on certain marvelous guides most people really want to be aware of.