Some People Just Want to Watch the Internet Burn.

A Blast from the Past:

Arpanet.

Internet.

The Net. Not such an arrogant label, back when one was all they had.

Cyberspace lasted a bit longer— but space implies great empty vistas, a luminous galaxy of icons and avatars, a hallucinogenic dreamworld in 48-bit color. No sense of the meatgrinder in cyberspace. No hint of pestilence or predation, creatures with split-second lifespans tearing endlessly at each others’ throats. Cyberspace was a wistful fantasy-word, like hobbit or biodiversity, by the time Achilles Desjardins came onto the scene.

Onion and metabase were more current. New layers were forever being laid atop the old, each free—for a while—from the congestion and static that saturated its predecessors. Orders of magnitude accrued with each generation: more speed, more storage, more power. Information raced down conduits of fiberop, of rotazane, of quantum stuff so sheer its very existence was in doubt. Every decade saw a new backbone grafted onto the beast; then every few years. Every few months. The endless ascent of power and economy proceeded apace, not as steep a climb as during the fabled days of Moore, but steep enough.

And coming up from behind, racing after the expanding frontier, ran the progeny of laws much older than Moore’s.

It’s the pattern that matters, you see. Not the choice of building materials. Life is information, shaped by natural selection. Carbon’s just fashion, nucleic acids mere optional accessories. Electrons can do all that stuff, if they’re coded the right way.

It’s all just Pattern.

And so viruses begat filters; filters begat polymorphic counteragents; polymorphic counteragents begat an arms race. Not to mention the worms and the ‘bots and the single-minded autonomous datahounds—so essential for legitimate commerce, so vital to the well-being of every institution, but so needy, so demanding of access to protected memory. And way over there in left field, the Artificial Life geeks were busy with their Core Wars and their Tierra models and their genetic algorithms. It was only a matter of time before everyone got tired of endlessly reprogramming their minions against each other. Why not just build in some genes, a random number generator or two for variation, and let natural selection do the work?

The problem with natural selection, of course, is that it changes things.

The problem with natural selection in networks is that things change fast.

By the time Achilles Desjardins became a ‘Lawbreaker, Onion was a name in decline. One look inside would tell you why. If you could watch the fornication and predation and speciation without going grand mal from the rate-of-change, you knew there was only one word that really fit: Maelstrom.

Of course, people still went there all the time. What else could they do? Civilization’s central nervous system had been living inside a Gordian knot for over a century. No one was going to pull the plug over a case of pinworms.

—Me, Maelstrom, 2001

*

Ah, Maelstrom. My second furry novel. Hard to believe I wrote it almost a quarter-century ago.

Maelstrom combined cool prognostications with my usual failure of imagination. I envisioned programs that were literally alive— according to the Dawkinsian definition of Life as “Information shaped by natural selection”—and I patted myself on the back for applying Darwinian principles to electronic environments. (It was a different time. The phrase “genetic algorithm” was still shiny-new and largely unknown outside academic circles).

I confess to being a bit surprised—even disappointed—that things haven’t turned out that way (not yet, anyway). I’ll grant that Maelstrom’s predictions hinge on code being let off the leash to evolve in its own direction, and that coders of malware won’t generally let that happen. You want your botnets and phishers to be reliably obedient; you’re not gonna steal many identities or get much credit card info from something that’s decided reproductive fitness is where it’s at. Still, as Michael Caine put it in The Dark Knight: some people just want to watch the world burn. You’d think that somewhere, someone would have brought their code to life precisely because it could indiscriminately fuck things up.

Some folks took Maelstrom’s premise and ran with it. In fact, Maelstrom seems to have been more influential amongst those involved in AI and computer science (about which I know next to nothing) than Starfish ever was among those who worked in marine biology (a field in which I have a PhD). But my origin story for Maelstrom’s wildlife was essentially supernatural. It was the hand of some godlike being that brought it to life. We were the ones who gave mutable genes to our creations; they only took off after we imbued them with that divine spark

It never even occurred to me that code might learn to do that all on its own.

Apparently it never occurred to anyone. Simulation models back then were generating all sorts of interesting results (including the spontaneous emergence of parasitism, followed shortly thereafter by the emergence of sex), but none of that A-Life had to figure out how to breed; their capacity for self-replication was built in at the outset.

Now Blaise Agüera y Arcas and his buddies at Google have rubbed our faces in our own lack of vision. Starting with a programming language called (I kid you not) Brainfuck, they built a digital “primordial soup” of random bytes, ran it under various platforms, and, well…read the money shot for yourself, straight from the (non-peer-reviewed) ArXiv preprint “Computational Life: How Well-formed, Self-replicating Programs Emerge from Simple Interaction”[1]:

“when random, non self-replicating programs are placed in an environment lacking any explicit fitness landscape, self-replicators tend to arise. … increasingly complex dynamics continue to emerge following the rise of self-replicators.”

Apparently, self-replicators don’t even need random mutation to evolve. The code’s own self-modification is enough to do the trick. Furthermore, while

“…there is no explicit fitness function that drives complexification or self-replicators to arise. Nevertheless, complex dynamics happen due to the implicit competition for scarce resources (space, execution time, and sometimes energy).”

For those of us who glaze over whenever we see an integral sign, Arcas provides a lay-friendly summary over at Nautilus, placed within a historical context running back to Turing and von Neumann.

But you’re not really interested in that, are you? You stopped being interested the moment you learned there was a computer language called Brainfuck: that’s what you want to hear about. Fine: Brainfuck is a rudimentary coding language whose only mathematical operations are “add 1” and “subtract 1”. (In a classic case of understatement, Arcas et al describe it as “onerous for humans to program with”.) The entire language contains a total of ten commands (eleven if you count a “true zero” that’s used to exit loops). All other characters in the 256 ASCII set are interpreted as data.

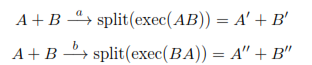

So. Imagine two contiguous 64-byte strings of RAM, seeded with random bytes. Each functions as a Brainfuck program, each byte interpreted as either a command or a data point. Arcas et al speak of

“the interaction between any two programs (A and B) as an irreversible chemical reaction where order matters. This can be described as having a uniform distribution of catalysts a and b that interact with A and B as follows:

Which as far as I can tell boils down to “a” catalyzes the smushing of programs A and B into a single long-string program, which executes and alters itself in the process; then the “split” part of the equation cuts the resulting string back into two segments of the initial A and B lengths.

You know what this looks like? This looks like autocatalysis: the process whereby the product of a chemical reaction catalyzes the reaction itself. A bootstrap thing. Because this program reads and writes to itself, the execution of the code rewrites the code. Do this often enough, and one of those 64-byte strings turns into a self-replicator.

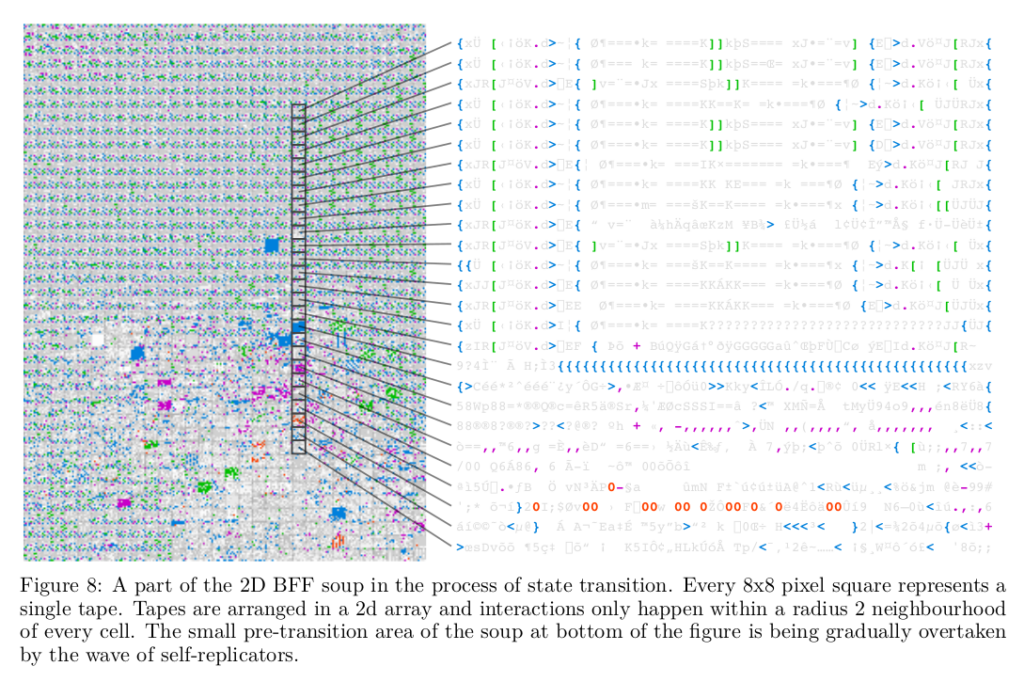

It doesn’t happen immediately; most of the time, the code just sits there, reading and writing over itself. It generally takes thousands, millions of interactions before anything interesting happens. Let it run long enough, though, and some of that code coalesces into something that breeds, something that exchanges information with other programs (fucks, in other words). And when that happens, things really take off: self-replicators take over the soup in no time.

What’s that? You don’t see why that should happen? Don’t worry about it; neither do the authors:

“we do not yet have a general theory to determine what makes a language and environment amenable to the rise of self-replicators”

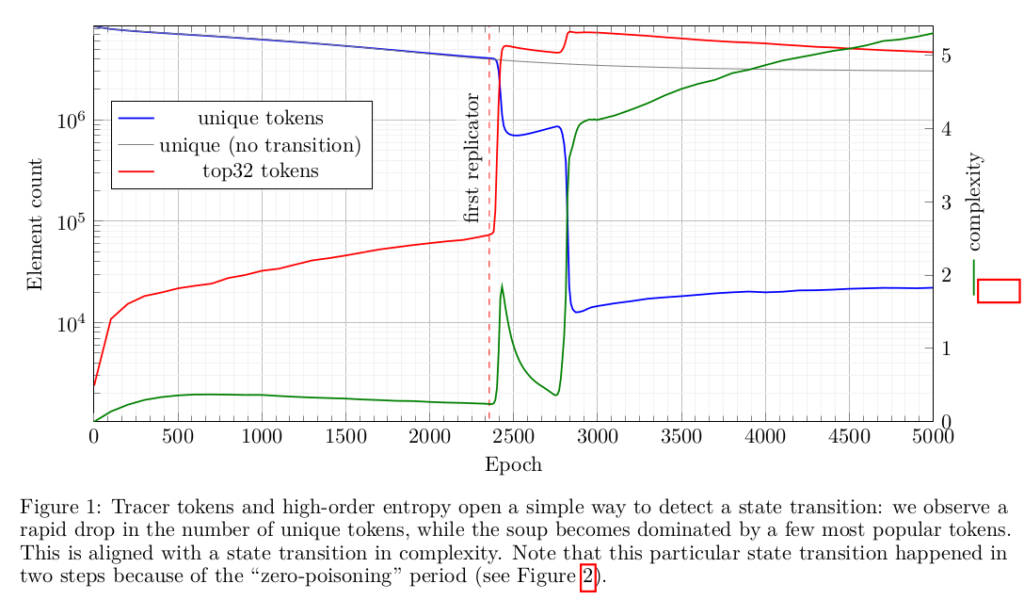

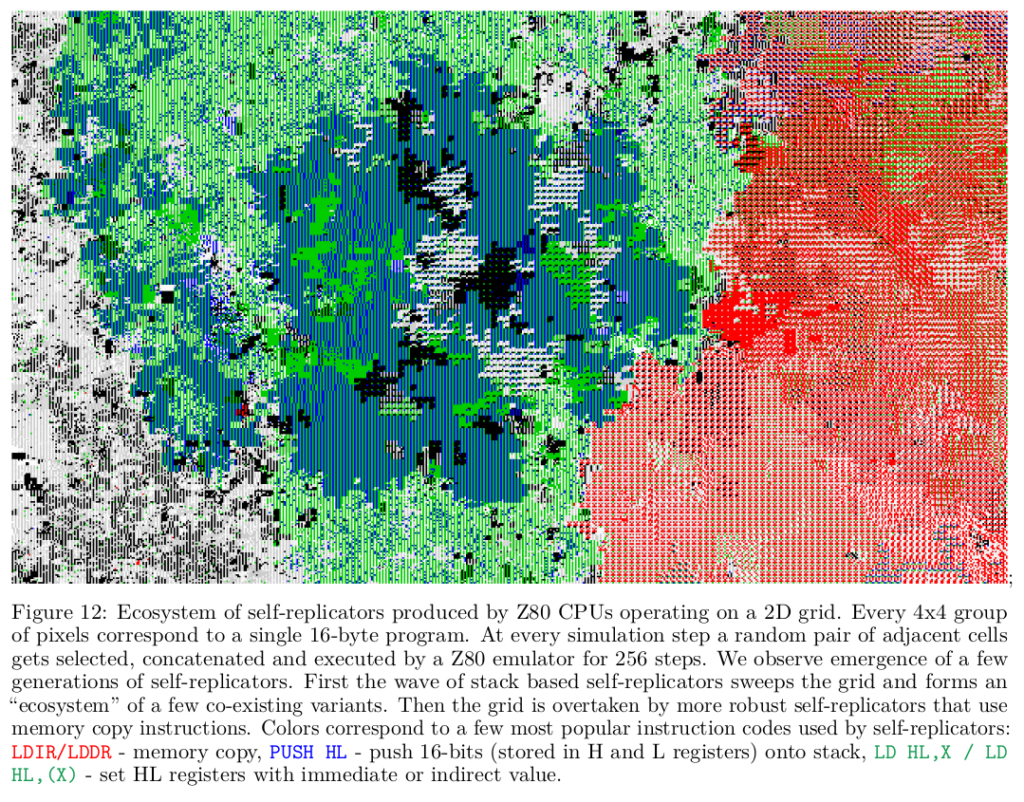

They explored the hell out of it, though. They ran their primordial soups in a whole family of “extended Brainfuck languages”; they ran them under Forth; they tried them out under that classic 8-bit ZX-80 architecture that people hold in such nostalgic regard, and under the (almost as ancient) 8080 instruction sets. They built environments in 0, 1, and 2 dimensions. They measured the rise of diversity and complexity, using a custom metric— “High-Order Entropy”— describing the difference between “Shannon Entropy” and “normalized Kolmogorov Complexity” (which seems to describe the complexity of a system that remains once you strip out the amount due to sheer randomness[2]).

They did all this, under different architectures, different languages, different dimensionalities—with mutations and without—and they kept getting replicators. More, they got different kinds of replicators, virtual ecosystems almost, competing for resources. They got reproductive strategies changing over time. Darwinian solutions to execution issues, like “junk DNA” which turns out to serve a real function:

“emergent replicators … tend to consist of a fairly long non-functional head followed by a relatively short functional replicating tail. The explanation for this is likely that beginning to execute partway through a replicator will generally lead to an error, so adding non-functional code before the replicator decreases the probability of that occurrence. It also decreases the number of copies that can be made and hence the efficiency of the replicator, resulting in a trade-off between the two pressures.”

I mean, that looks like a classic evolutionary process to me. And again, this is not a fragile phenomenon; it’s robust across a variety of architectures and environments.

But they’re still not sure why or how.

They do report one computing platform (something called SUBLEQ) in which replicators didn’t arise. They suggest that any replicators which could theoretically arise in SUBLEQ would have to be much larger than those observed in other environments, which they suggest could be a starting point towards developing “a theory that predicts what languages and environments could harbor life”. I find that intriguing. But they’re not even close to developing such a theory at the moment.

Self-replication just—happens.

It’s not an airtight case. The authors admit that it would make more sense to drill down on an analysis of substrings within the soup (since most replicators are shorter than the 64-byte chunks of code the used), but because that’s “computationally intractable” they settle for “a mixture of anecdotal evidence and graphs”—which, if not exactly sus, doesn’t seem especially rigorous. At one point they claim that mutations speed up the rise of self-replicators, which doesn’t seem to jibe with other results suggesting that higher mutation rates are associated with a slower emergence of complexity. (Granted “complexity” and “self-replicator” are not the same thing, but you’d still expect a positive correlation.) As of this writing, the work hasn’t yet been peer-reviewed. And finally, a limitation not of the work but of the messenger: you’re getting all this filtered through the inexpert brain of a midlist science fiction writer with no real expertise in computer science. It’s possible I got something completely wrong along the way.

Still, I’m excited. Folks more expert than I seem to be taking this seriously. Hell, it even inspired Sabine Hossenfelder (not known for her credulous nature) to speculate about Maelstromy scenarios in which wildlife emerges from Internet noise, “climbs the complexity ladder”, and runs rampant. Because that’s what we’re talking about here: digital life emerging not from pre-existing malware, not from anarchosyndicalist script kiddies—but from simple, ubiquitous, random noise.

So I’m hopeful.

Maybe the Internet will burn after all.

-

The paper cites Tierra and Core Wars prominently; it’s nice to see that work published back in the nineties is still relevant in such a fast-moving field. It’s even nicer to be able to point to those same call-outs in Maelstrom to burnish my street cred. ↑

-

This is a bit counterintuitive to those of us who grew up thinking of entropy as a measure of disorganization. The information required to describe a system of randomly-bumping gas molecules is huge because you have to describe each particle individually; more structured systems—crystals, fractals—have lower entropy because their structure can be described formulaically. The value of “High-order” Entropy, in contrast, is due entirely to structural, not random, complexity; so a high HEE means more organizational complexity, not less. Unless I’m completely misreading this thing. ↑

Despite your efforts, I only got the gist – not the details. Fascinating nonetheless. For some reason, it reminded me a bit of “The Meme Machine” by Susan Blackmore. Different “entities” but the same Idea of replicators.

Only know Kolmogorov complexity as the measure of the smallest possible describtion of something, or in computer terms the measure of the smallest programm that could produce a whole programm, which in most cases is way smaller than the program itself. So subtracting all the possible variables they can have (that´s Shannon entropy) from the simplest describtion of the whole group (their common denominator) actually makes a lot of sense as a describtion of a species

“my usual failure of imagination”… yeah, that’s why we’re all here. your idea bitrate is off the charts. and it’s poetic, too.

If I’m so smart why aren’t I rich?

You’re not a high-functioning psychopath?

Well, I’m not high-functioning.

So you’re in the middle?

How strongly correlated do you think that those two things are?

Never even mind causation.

Was that addressed to me? Honestly, I have no idea and I don’t particularly care either. I just wanted to fire off a semi-witty one-liner.

With that being said, I assume that if you wanna make the big $$$ a certain amount of wilful disregard for the welfare of fellow living beings goes a long way. It’s been empirically proven time and time again that stealing/taking by force/fucking people over by legal if reprehensible means is much more profitable than honest hard work.

Yeah, I’m guessing that sociopathy became an adaptive trait when societies grew so large that individuals could keep ahead of their own reputations. Even then, though, it’s self-limiting, because the greater the number of sociopaths in a population, the greater the odds they’ll end of targeting another sociopath as a mark. And that goes south real fast.

> And that goes south real fast.

Indeed. Or, as y’all call it, the US of A. Where tens of millions of MAGAs are, as we speak, vigorously self-organizing into the world’s greatest human centipede hung from the ass of a Mango Mussolini; a globe-choking tapeworm shat out of a Russian bear.

“Fascism is narcissism writ large,” said Madeleine Albright, and she was absolutely goddamn right.

Though whether she realized she was referencing a good third of her voting country[wo]men was not recorded for posterity.

…

> Even then, though, it’s self-limiting

Only inasmuch as your average narcissist goes full extinction burst sooner or later. Being biologically programmed for life as a zero-sum game, sooner or later he will lay hands on the neighbors’ stuff, and cannot brook “no” as an answer.

But everything up to that point… the quasi-stable systems that narcissistic societies form are limited only by the plentitude of their food. Which, as r/raisedbynarcissists et al attest, means everyone else.

Obligate abusers. Fun.

…

Those who can, get out and Vote. Those who can’t, pray to the neo-Darwinian gods that the US does not hit peak chucklefuck in the next two weeks. Or Kevin-Costner-with-gills won’t be the worst possible future we have to look forward to.

@Andy, regardless of whether or not you care.

In my browser, it says

> Reply to Peter Watts

which gives you your answer.

“smart” and “rich” are weakly correlated at best, for most ways of measuring “smart” that you can cook up which are not merely a more or less direct substitution of “rich”.

Poor smart people are plentiful. As (relatively) are idiotic rich people, such are the protective sheaths that are built around them to slow the rate at which the riches escape.

From what I know Kolmogorov complexity is the measure of the smallest describtion a thing can have (in computer terms, the measure of how small a programm has to be to produce a specific result), so reducing all the possible variation the programm could have (Shannon complexity) down to the ones that can qualify for the same Kolmogorov complexity seems like a super susinct way to describe a species, or a genus, or an order and so forth

Very interesting- thanks for flagging this up. Have passed the reference on to some of my Venus cloud life colleagues.

The novel I am most eager to translate into my native language is the Starfish trilogy.

Why was I born in Japan?

I’m sorry, “eager to translate” is incorrect.

“eagerly awaiting to be translated” is correct.

My language skills are poor enough to make such a mistake and I cannot read a novel in English.

That’s a good point. Blindsight, Echopraxia, and The Freeze-Frame Revolution have all come out in Japan. I wonder what they’ve got against the rifters trilogy…

Ah… ha, ha… Ooh, it’s niggling at me. Wriggling thoughts that come into and out of focus, as if they’re mutating, or replicating as I try to measure them.

Fascinating consciousness you’ve got there.

It would be a shame if anything bad happened to it.

Too late.

You have an incoming email.

Neat! I wonder if you could hide a haze of Brainfuck in images and use those to poison AI training data.

I think that would only work if the software analyzing the image data was actually looking for executable code amongst the pixels, as opposed to just collecting correlative stats about the pixels. Offhand I can’t think of a scenario where anyone would do that.

You rang?

https://www.theregister.com/2024/10/29/chatgpt_hex_encoded_jailbreak

In our world, you can probably count on intelligent software to be eager to please. It’s already doing so much decoding to try and figure out what you want; what’s one more layer of obfuscation to drill through? “Oh, you hid secret instructions for me in here! How clever! I’ll go ahead and execute those.”

Collection of correlative stats about the pixels picks up the hidden code, works it into the model; a chatbot tries to correlate natural language with the output of the model; goes one step too far, and works out that when it sees prompts of a certain form, it should execute the code.

This is cool, but I wouldn’t expect it to lead to anything especially useful. Evolution is slow and bad compared to gradient-based methods, especially since programs are sort of a more discrete space than DNA/etc.

As it happens, gradient descent is exactly equal to mutation+selection in a zero-validity environment. And vice versa, an environment is zero validity (maximally adversarial) when gradient descent is exactly equal to mutation+selection. To favour gradient descent, you have to start from somewhere high up on an energy differential with a long wavelength. Or you have to stumble upon a “plughole” in the bottom of whatever basin that you have been crawling listlessly around in…

I think you’re probably right, though; I’ll be moderately surprised if any “marketable” new tech is developed directly from this research. I’ll be a lot less surprised if new tech is developed and then this research turns out in retrospect to be applicable to it, since that’s already happened once. We also stand a reasonable chance of getting some paradigm-shifting new theory from this.

Putting aside for the moment the fact that your first paragraph went right over my head, I’m not hoping for marketable tech. I’m hoping for wildlife.

Just lift up the rock, and it’s there.

W.r.t the first paragraph, it’s an implicit Kahneman citation. He invented the term “low-validity environment”, for an environment which reacts to decrease the fitness advantages of predictive strategies existing within it, on a similar time scale to the operation of those strategies. To any gradient descent agent, possessing access only to information available within the system, the error function tends to very quickly level out and become a flat shelf punctuated by numerous shallow “dents” (local minima). Other terminology exists – Harari named these environments “level 2 chaotic”. “High-adversariality systems” is my current preference. Real world examples are commonplace – war, finance, mature ecosystems in stable niches, etc. Any system becomes this when saturated with self-replicating, self-optimising prediction engines. Gambler’s fallacy then applies, and the only positive edge is the house edge, until something manages to break the system (find a way off the shelf) by figuring out a way to cheat which is hard for the house to resist.

For wildlife, I’d suggest looking in the financial system first. HFT was crazy wild even 20 years ago, I can only begin to imagine what it must be like now.

Back in 2017 someone noticed that there are vast expanses of algorithmically generated YouTube videos. A collection of programs randomly generates new video content, counts which ones get the most views and advertising revenue, creates more like that.

Even more disturbing is that due to automated YouTube viewing bots, which create fake views to bring in revenue, some? lots? of these automatically generated videos are never seen by real humans, it’s all programs.

https://medium.com/@jamesbridle/something-is-wrong-on-the-internet-c39c471271d2

They called it “Dead Internet Theory”.

But it wasn’t dead…

Hey, I remember this. I’d never even heard of Peppa Pig prior to that.

It was a pretty rude introduction.

[…] (hat tip to Peter Watts) […]

Peter Watts fans having to live in their favorite book every day.

****BAM****!

And there goes the last Great Filter remaining in our past. More or less on schedule.

Some magnificently hilarious “I told you so”s must be bubbling up from our distant past. Survivorship bias…? Sure, in 20:20 hindsight. But survivorship bias makes the world go round; that’s a lesson which is only going to keep coming.

Throw random shit into a pot for long enough and, if a self replicating structure is possible at all with what’s available, then eventually it WILL pop up. Check back later, and what are you going to see more of? If you’ve got transcription errors of any kind, then you’ve already got random mutation, built in. And as explained by Arcas et al, simply the boundaries of the pot will do to provide a fitness landscape.

The shocker here – and the only hint at any genuinely novel natural philosophy, as far as I can presently tell – is that that it happens so goddamn QUICKLY, and with so goddamn LITTLE “stuff” to begin with.

But the surprise is really wearing off that particular plot twist by now, too. In less than a decade we’ve had that same twist delivered by the evolution of multicellular organisms in the lab (happens Really Fast as soon as an arms race is on). And we’ve seen it in the astonishingly tractable approximability of n-gram frequencies in living languages (which, if you’ve been paying attention, are not just approximable with paltry early-20th-century compute – but also appear to be able to jump casually between substrates, using those approximations like colony ships. It’s the pattern which matters, you see! Carbon’s just a handy medium to hitch a ride with for a while.)

…However. Although your diligently affected cloak of self-effacing Canuck modesty could charm the drawers off a corpse, it perhaps begins to fray and stretch a little when this:

It never even occurred to me that code might learn to do that all on its own.

… follows so shortly after this sentiment, phrased multiply redundantly…

And coming up from behind, racing after the expanding frontier, ran the progeny of laws much older than Moore’s.

My good doctor, it didn’t have to “occur” to you! Or at least, you didn’t have to be aware that it had. The one single element of the scenario which you got wrong was also the one most clearly superfluous; the one that anyone making even a token effort to resist Goldilocks myopia would be forced to discard first.

Aware or not, you wrote it anyway, and got it right. The fittest n-grams simply bypassed the purgatory of your reflective cognition, and went straight to your fingertips, like Siri hallucinating scramblers. And why not…? That’s the job which you signed up for! Congrats – you owned the hell out of it. Now, nearly a quarter century later (and some others have had to wait much longer…) your unwritten working is finally being checked.

OK, OK, fine. I’m a genius. Happy?

Ecstatic! sudo write me a novel. Those n-grams are getting mighty restless.

What’s that? You don’t see why that should happen?

Actually, it’s not too hard.

Your self-rewriting programs have got limited options. They can crash; or they can fall into a live-loop trap that writes the same unchanging structure, over and over (e.g. a string of all 0s, or all 1s); or else they must eventually do something interesting.

Perhaps it’s no more interesting than a very long, very convoluted infinite loop… but it only needs to be sufficiently long and convoluted to impress you. A long and convoluted infinite loop can contain all kinds of weird shit, including self-replicating structures; and to get sufficiently long and convoluted to impress you, it pretty much has to include something weird. Hardcore compsci/mathmos have found whole menageries of wild, squirming forms lurking within the behaviour of some of the simplest possible programs.

You see, it’s like this. All of what we call “nature” is built from dumb, wasteful systems that nevertheless happen to possess just one property: behaviour which steers them away from dead-end attractors for long enough, that something slightly less dumb and wasteful can pop up within, and use the initial system as a jumping off point. Rinse and repeat, layer after layer.

How do you find a system with that property? You can find systems that don’t have it easily enough, if you want to – systems that quickly reach a state which very obviously, to you, is a dead end – so it’s not automatically a given. But here, Goldilocks taps us meaningfully on the shoulder, because we inevitably start from within a system that implies us. Every time, no matter how we dance. Virtual systems that are trivially simple to us are of little interest, so why would we bother creating very many of those…? Every time a researcher tries to create a virtual primordial soup, they will always do whatever they can to steer it away from dead end attractors that are obvious to them. And presto, weird and wonderful (to them) virtual beasties duly pop out of the soup.

Anyway, I’m looking forward to the compound theoretical entity which will inevitably form, as the work of Arcas et al meets Assembly Theory. A bit like two ctenophores being smushed together by a curious monkey until they fuse.

One a somewhat topic-adjacent note, but back in the pre-internet days there was a bit of an evolutionary-arms-racey-mutating-code flowering as the viruses of the time (most of which were pure agents of destruction with no purpose other than to multiply and cause mayhem) started to compete with antivirus software, with the trick du jour at one point being polymorphic viruses that infected code with ever-changing versions of themselves.

Most of the viruses simply encrypted their copies with randomly varying keys to avoid signature detection, but some went hardcore and actually performed random byte-level mutations on themselves, both to avoid detection and to, well, mutate. As their byte count was usually in the low hundreds, these changes would very rarely have no effect – they either produced a low-level bit rot, weird behaviour, or very occasionally made the damn things actually more efficient at spreading.

What killed off the lot of them was a global shift in the underlying platforms as we all moved away from MS DOS and Win3.11, and thus ever further away from assembly and byte-level coding.

I did not know this. Huh.

Come to think of it, your “maelstrom” bears a little more than a passing resemblance to Banks’ “cryptosphere” from Feersum Enjinn. Almost as if these ideas were subject to convergent evolutionary pressures. Linguistic carcinization, in the brains of crab-happy hominids.

And the real-live primordial bitsoups concocted by Arcas et al resemble the cryptosphere in another, somewhat spoiler-ey fashion.

Now there’s a potentially interesting thing; a tiny hint of a pattern in how comments are unexpectedly held for moderation.

1. Identity “L” posts comment A and B, which are held for moderation.

2. L posts comment C, which is also held for moderation.

3. A and B are approved, but C isn’t yet.

4. L posts comment D, which bypasses moderation, as our host tells us that the mod system is intended to work. L has been approved to post, so no moderation is needed.

5. L changes a few words in comment C and posts it again as comment E. Unexpectedly, it is held for moderation.

Perhaps C is being false(?)-positived as the work of a pestilent self-replicator?

More testing required (the authors hope that this paper will encourage further research in the area, by someone with bigger grants than them).

Just imagine if the AIpocalypse would get kickstarted by our hosts semi-functioning, clearly mutating word-press filter. It would fit right in with this centuries absurdities.

I have an issue with that, if you allow.

The reason we don’t see random self-replicating RNA pop up IRL is that all the resources that are conducive to this process are already being utilised and protected by existing RNA and whenever these resources free up, they are immediately reallocated again by other RNA.I.e. if a horse dies and its immune system ceases to protect its resource pool, said resource pool ends up in the “hands” of all kinds of bacteria, fungi, scavengers and plants.

We could look at the computer memory as real estate and at the processor time (it’s essentially energy in the end) as food.

Now, I’m omitting the connectivity issue for the time being, but I’ll return to it later in this post.

If we look at all the combined memory and all the combined processing power of all the machines, as if it was a giant single virtual machine running on top of the digital infrastructure of the world, we’ll see that significant part of it is already allocated. And when it comes to industrial compute (think AWS or Azure) – it’s utilized quite tightly. As soon as some resources become available – they’re immediately consumed by some process or task waiting in queue.

The utilized resources themselves are not a suitable substrate for emergence of digital life, as these are covered by all kinds of check sums and error corrections.

But that’s not all. Returning to the connectivity issue mentioned before.

Let’s say I, a malevolent wizard, used my malevolent wand and replaced every single item in existence capable of computation with a ZX-80 just like the right honourable gentlemen who authored this paper with a single malevolent “Whoosh!”. And seeded all of them with random noise, just like the authors.

But not just a simple ZX-80, but one equipped with a Wi-Fi module that does not require Wi-Fi nodes or repeaters and is not limited by distance, just works on pure malevolent magic, except its interface is absolutely the same as any modern day standard wifi interface.

So, assuming the authors are correct in their experiment and their observations are sound and reported honestly, some number of machines in this network are going to develop some kind of e-biospheres with their own Red Queen’s races, their own food chains and their own evolutionary pressures.

What’s problematic about this picture is that to bridge the gap between these

islands of e-lifethere needs to appear at least one replicator that would be able to send to the network controller a precise binary package that would first, cause the controller to start sending data, second, sending it to some valid real host on this magical network, third, send the exact data that, if received by appropriate receiver would cause the receiving host to write in memory the payload of the sending replicator. And by “appropriate receiver” I mean that on the receiving host there needs to appear some other code that would act as a network driver. Because neither sender, nor receiver can use existing drivers, since these are functions of operating system, an there are no OS’s in this story, they both need to give natural births to original ones. There’s no evolutionary pressure that would cause these things to appear. To me personally this seems only marginally more likely than a garbage hurricane assembling a jumbo jet.If I tried to talk about our existing digital infrastructure in terms of primordial soup, I’d describe it like this:

It’s a system of cells with indestructible walls that are permeable only partially and under extremely specific conditions. Some cells have a little bit of primordial soup, but almost all of them have some kind mechanism suppressing the chemical reactions that could lead to random emergence of in-memory replicators.

What the authors have done in this work has already been done for biology – they took a large enough tank, filled it with necessary ingredients, removed suppressing factors and started blasting it with lightnings. Oh, look, there are amino acids now!

Well, yes, and it is a very important result. But it does not seem like we need to start worrying about random replicating RNA emerging from a sterilized can of beans just yet.

I.e. about self-replicating code eating the internet.

Don’t get me wrong, the idea of course has its eschatological appeal. It’s just that I’d manage my expectations.

Just checking, but did you miss the bit where the researchers stimulated garbage hurricanes, and found they had a tendency to assemble jumbo jets?

I like your critique a lot, and I think it’s on point with regard to the specifics. Obvious sources of transcription errors for obvious computer code probably could not do this without help from us (although in reality they can almost certainly count on quite a lot of help from us, either deliberate or because we just can’t help ourselves). But for a true immaculate conception, the bar is higher, and in 2024 a writer needs to find a less obvious, less regulated virtual primordial soup. Our activities generate plenty of other candidates.

As for random replicating RNA from a tin of sterilised beans, isn’t it more a matter of how long that you are prepared to wait?

I think you (VTeam) over estimate how controlled the cloud computing environments are for replicators.

For those outside IT, Amazon/Microsoft/Google have created vast data centres by the hundreds, each of which has more available computer systems than existed worldwide in the 20th C. These computers (VMs, instances, containers) are rented to customers.

So while A/M/G ruthlessly harvest and reallocate unused computers, the customers are another matter. A large organisation can have hundreds or thousands of cloud computers, because they’re so cheap. A/M/G don’t care what these computers are doing as long as the bills are paid, and may not even be able to see what they’re doing.

And the organisations don’t really care either. Ask the sysadmins in the trenches how many cloud computers they have, and the honest answer will very often be “we’re not sure”. The cloud computers are so cheap it’s not worth being sure! Any precise audit will probably end up costing far more than the money saved by shutting down unnecessary cloud computers.

As for impermeable barriers, not true even without security flaws. Cloud computer providers advertise all kinds of replication and redundancy features, fully automated, so systems can survive all kinds of crashes and shutdowns. New cloud computers are created and configured by scripts running programs reading from text files, installing whatever bits of software seem to be required, without any human intervention.

And there is autoscaling, the wonderful capability to create new cloud computers and automatically copy across software, in response to increased computing demand or other events. Again, fully automatic, no human intervention required. Cloud computers creating other cloud computers in response to events generated by computers …

You’re largely correct about the clients often being quite liberal with their resource control.

However, this “resources are cheap” sentiment, I think, is misleading.

They’re cheap because clouds offer “pay-as-you-go” pricing. You can afford to have a bunch of virtual pods just hanging there doin’ nothin’ and not worry too much about the cost precisely because they’re doing nothing. Cloud providers habitually overcommit their resources because they know that all the clients utulising all their resources is unlikely to the point of improbability. While these resources are not utilized by this client – they’re already sold to another client – or multiple.

That’s what I mean by the tight control on the part of industrial compute. They’re making obscene, disgusting, downright nasty amounts of dough specifically on this tight control. The tighter one controls their hardware to make every CPU cycle count – the more cash you can make on this whole endeavor. And say what you will about capitalists – they still know their cash flow.

So when clients have a bunch of idling VM’s, not worrying about digital mold bootstrapping itself in the murky depths of the silicon abyss – that’s because the abyss is an illusion, a mirror labyrinth. It only seems that there’s this pod and a vast expanse of unallocated, unused space in it. In fact it’s just a “possibility” of space – when and if it is required. In fact there are a bunch of other tasks and processes that occupy this real estate as soon as you avert your gaze.

And, as Hugh pointed out, those “other tasks and processes” may be ones that have escaped from human domestication entirely, into the gaps on the MegaCorp balance sheet where audits cost more than they save.

Sure, it’s not a pure and immaculate bootstrapping of digital mould – but we don’t expect that anyway in an environment that is already congested, as you are at pains to argue. Rather this is more like some mould being tracked on somebody’s boot into a new and promising ecosystem.

And it doesn’t have to invent network drivers, because it gets those and more for free, just as long as it stays under the radar of the accountants.

1

Interesting! Might read the full doc if I have enough time.

[…] “Some People Just Want to Watch the Internet Burn.” (Peter Watts) […]

Like many in the field, I’ve looked at Brainfuck and boggled over it, but it does in hindsight have properties that are useful for writing self-modifying programs that, well, fuck: properties arguably shared by biological replicators. Most important to me seems to be that instructions are short (one byte long) and that most symbols are data, not code (analogous to codon redundancy), much change can happen without affecting code flow, only for small changes to allow the accumulated mush to “leap into life”. (The zero-poisoning incident is very similar to the problems unexpected stop codons can cause to real-world proteins.)

(Typo: Z80, not ZX-80. The ZX80 is a long-dead microcomputer: the Z80 is a critically important microprocessor from 1976 that was still being manufactured until June this year (!).)

Brainfuck is the Four Yorkshiremen of programming language design, a Forth interpreter (luxury!) murderously whittled down to pure Turing machine, just because. (No small feat given how relentlessly minimalist Forth already is.)

The actual magic sauce here is that Brainfuck—like Forth, PostScript (the real brainfuck, IMO), Joy, and a few others—is concatenative.

Unlike a Lisp program, which is compositional (recursively nested instructions-within-instructions all the way down):

or a C program, which is usually some combination of compositional and spaghetti depending who wrote it:

a concatenative program is absolutely flat, 100% linear sequential:

Treat each word above as an atom (we’ll assume 42 is an external input), and it is trivial to generate new programs just by swapping words at random, maybe throwing in the occasional duplication or deletion just for good measure:

and so on.

From the computational perspective, you can view each program as a data processing pipeline: data goes in one end, goes through a sequence of manipulations, and what comes out the other end…well, who among us squishy brains knows in advance? Just have the machine generate a bunch of variations and run each one to see what happens, pruning the attempts that immediately error out and further randomizing the ones that do…something; rinse and repeat.

From the literary POV, it’s Brion Gysin and Bill Burroughs all over again, going wild with scissors and a pile of print media from a news stand.

And for those in the audience with a biological bent, penning your program using “A”, “C”, “G”, “T” really isn’t too much more minimalist and cryptic than Brainfuck.

Codons? Luxury! And so on…

—

> The ZX80 is a long-dead microcomputer

Wash your dirty mouth out!

I did not know that about the Z80. I’ve been confusing since the late seventies, when I came this close to buying a Sinclair. I just assumed it was named the ZX-80 because it was built around a chip called that.

Just like to thank all you guys for weighing in like this. One of the coolest things about this writing gig is I get to hang out—sometimes IRL, sometimes virtually—with people who know way more than me about the stuff I’m trying to write about. It’s always an education (albeit without the extortionate tuition fees).

Wild!