Iterating Towards Bethlehem

This hairy little spider right here. A pinpoint brain with less than a million neurons, somehow capable of mammalian-level problem-solving. And just maybe, a whole new approach to cognition.

This is an old story, and a popsci one, although I've only discovered it now (with thanks to Sheila Miguez) in a 2006 issue of New Scientist. I haven't been able to find any subsequent reports of this work in the primary lit. So take it with a grain of salt; as far as I know, the peer-reviewers haven't got their talons into it yet. But holy shit, if this pans out…

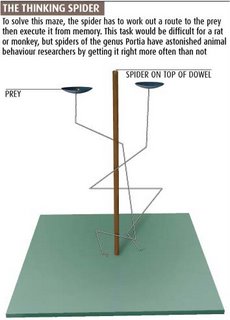

Here's the thumbnail sketch: we have here a spider who eats other spiders, who changes her foraging strategy on the fly, who resorts to trial and error techniques to lure prey into range. She will brave a full frontal assault against prey carrying an egg sac, but sneak up upon an unencumbered target of the same species. Many insects and arachnids are known for fairly complex behaviors (bumblebees are the proletarian's archetype; Sphex wasps are the cool grad-school example), but those behaviors are hardwired and inflexible. Portia here is not so rote: Portia improvises.

But it's not just this flexible behavioral repertoire that's so amazing. It's not the fact that somehow, this dumb little spider with its crude compound optics has visual acuity to rival a cat's (even though a cat's got orders of magnitude more neurons in one retina than our spider has in her whole damn head). It's not even the fact that this little beast can figure out a maze which entails recognizing prey, then figuring out an approach path along which that prey is not visible (i.e., the spider can't just keep her eyes on the ball: she has to develop and remember a search image), then follow her best-laid plans by memory including recognizing when she's made a wrong turn and retracing her steps, all the while out of sight of her target. No, the really amazing thing is how she does all this with a measly 600,000 neurons— how she pulls off cognitive feats that would challenge a mammal with seventy million or more.

She does it like a Turing Machine, one laborious step at a time. She does it like a Sinclair ZX-80: running one part of the system then another, because she doesn't have the circuitry to run both at once. She does it all sequentially, by timesharing.

She does it like a Turing Machine, one laborious step at a time. She does it like a Sinclair ZX-80: running one part of the system then another, because she doesn't have the circuitry to run both at once. She does it all sequentially, by timesharing.She'll sit there for two fucking hours, just watching. It takes that long to process the image, you see: whereas a cat or a mouse would assimilate the whole hi-res vista in an instant, Portia's poor underpowered graphics driver can only hold a fraction of the scene at any given time. So she scans, back and forth, back and forth, like some kind of hairy multilimbed Cylon centurion, scanning each little segment of the game board in turn. Then, when she synthesizes the relevant aspects of each (God knows how many variables she's juggling, how many pencil sketches get scribbled onto the scratch pad because the jpeg won't fit), she figures out a plan, and puts it into motion: climbing down the branch, falling out of sight of the target, ignoring other branches that would only seem to provide a more direct route to payoff, homing in on that one critical fork in the road that leads back up to satiation. Portia won't be deterred by the fact that she only has a few percent of a real brain: she emulates the brain she needs, a few percent at a time.

I wonder what the limits are to Portia's painstaking intellect. Suppose we protected her from predators1, and hooked her up to a teensy spider-sized glucose drip so she wouldn't starve. It takes her a couple of hours to capture a snapshot; how long will it take the fuzzy-legged little beauty to compose a sonnet?

Are we looking at a whole new kind of piecemeal, modular intellect here? And why the hell didn't I think of it first?

Update 9/1/08: Tarsitano & Jackson published these results in Animal Behaviour. Thanks to Kniffler for the heads-up

1 And isn't that a whole other interesting problem, how this little beast can sit contemplating her pedipalps for hours on end in a world filled with spider-eating predators? Do certain antipredator reflexes stay active no matter what, or does she just count on immobility and local cover to hide her ass while she's preoccupied with long-term planning? I'd love to see the cost-benefit of this tradeoff.

Portia photo: by Akio Tanikawa, scammed from Wikipedia under a CC licence.

Maze illo: scammed from New Scientist, under a nine-tenths-of-the-law licence.

Labels: biology, neuro, sentience/cognition