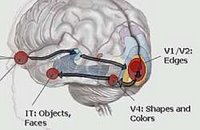

This is an ancient review article — about ten years old, judging by the references — but it contains an intriguing insight from split-brain research that I hadn't encountered before: The right hemisphere remembers stuff with a minimum of elaboration, pretty much as it happens. The

left hemisphere makes shit up. Mr. Right just parses things relatively agenda-free, while the left hemisphere tries to force things into

context.

The left hemisphere, according to Gazzaniga, looks for patterns. Ol' Lefty's on a quest for

meaning.

I learned back in undergrad days that our brains see patterns even where none exist; we're pattern-matching machines, is what we are. But I hadn't realized that such functions were lateralized. This hemispheric specialization strikes me as a little reminiscent of "gene duplication": that process by which genetic replication goes occasionally off the rails and serves up two (or more) copies of a gene where only one had existed before. Which is very useful, because evolution can now play around with one of those copies to its heart's content, and as long as the other retains its original function you don't have to worry about screwing up a vital piece of a working system. (This is something the creationists hope you never learn, since it single-handedly blows their whole the-mousetrap-can't-work-unless-all-the-parts-evolve-simultaneously argument right out of the water.) Analogously, I see one hemisphere experimenting with different functions — imagination, the search for meaning— while the other retains the basic just-the-facts-ma'am approach that traditionally served the organism so well.

Anyway, for whatever reason, we've got a pragmatist hemisphere, and a philosopher hemisphere. Lefty, who imposes patterns even on noise, unsurprisingly turns out to be the source of most false memories. But pattern-matching, the integration of scattered data into cohesive working models of The Way Things Are — that's almost another word for

science, isn't it? And a search for deeper meanings, for the

reasons behind the way things are — well, that's not exactly formal religion (it doesn't involve parasitic social constructs designed to exploit believers), but it

is, perhaps, the religious impulse that formal religion evolved to exploit. Which is getting uncomfortably close to saying that neurologically, the scientific and religious impulses are different facets of

the same thing.

Yes, all those mush mouthed self-proclaimed would-be reconcilers have been saying that shit for decades. I still bet you never thought you'd read it

here.

But bear with. A compulsion to find meaning and order. When there

is a pattern to be found, and enough usable data to parse it, the adaptive significance is obvious: you end up using the stars to predict when the Nile is going to flood its banks. If there is no data, or no pattern, you find it anyway, only it's bogus: thunder comes from Zeus, and Noah surfed a tidal bore that carved out the Grand Canyon in an afternoon. Lefty talks in metaphors sometimes, so even when it gets something right it's not the best at communicating those insights— but that's okay, because Mr. Right is just across the hall, unsullied, unspecialized, picking up the slack.

Only what if, now, we're acquiring data that Mr. Right can't handle? The Human brain is not designed to parse the spaces between galaxies or between quarks. The scales

we evolved to handle extend up or down a few orders of magnitude, losing relevance at each iteration. Are things below the Planck length really, empirically more absurd than those at everyday classical scales, or is it just that brains shaped to function at one scale aren't very good at parsing the other?

Maybe

this is where Lefty really comes into his own. Like the thermoregulating feather that got press-ganged, fully-formed, into flight duty, perhaps the bogus-pattern-matching, compulsive purpose-seeking,

religious wetware of the brain is most suited for finding patterns it once had to invent, back before there were enough data available to justify such cosmological pretzel logic. Perhaps the next stage is to rewire Mr. Right in Lefty's image, turn the whole brain into a lateral-parsing parallel-processor. Perhaps the next stage of scientific enquiry can only be conveyed by speaking in tongues, practiced by colonies of monks whose metaphors must be parsed by the nonconscious modules of Siri Keeton and his synthesist siblinghood. Maybe the future is a fusion of the religious and the empirical.

Of course, the obvious rejoinder is: if all this late-breaking twenty-first-century data is enough to let the religious impulse do something useful for a change, why is it that religious fundamentalists are still such colossal boneheads? Why, if delusion has segued into profound insight, do half the Murricans out there still believe that the universe is six thousand years old? Why do two thirds of them believe in angels?

And the obvious answer is that, appearances notwithstanding, these people are not living in the twenty-first century at all, but the fourteenth. They walk among us locked into a cultural diving bell reeled out along the centuries, hermetically sealed, impervious to any facts or insights more recent than the spheroid Earth (or even older, in the case of at least one ignorant cow on

The View). I can only wonder what would happen if somehow that brittle armor were to shatter, if all this real data were to wash over them and somehow penetrate the circuitry that informs their spastic gyrations and religious gibbering. Would they serve up a Theory of Everything? Would the rest of us recognize it if they did?

Probably no, and probably not. It's just idle speculation, smoke blown out my mind's ass. Still. Might be a story in it somewhere: the day when religion subsumed science, and It Was Good.

At least no one could accuse me of getting into a rut.

Labels: just putting it out there..., neuro, science